Balancing AI Innovation with Ethical Boundaries

In today's fast-paced world, artificial intelligence (AI) stands at the forefront of technological innovation, reshaping industries, enhancing efficiencies, and even changing the way we communicate. However, with great power comes great responsibility. As we dive deeper into the realm of AI, the question arises: how do we ensure that this remarkable technology is developed and deployed in a way that is not only innovative but also ethical? Balancing AI innovation with ethical boundaries is not just a necessity; it's a moral imperative that we must embrace to safeguard our future.

The rapid advancements in AI have led to unprecedented capabilities, from self-driving cars to intelligent virtual assistants. These innovations hold the promise of transforming lives for the better, but they also come with significant risks. Imagine a world where AI systems make decisions that affect our daily lives—decisions about healthcare, employment, and even justice. Without ethical guidelines, the potential for harm becomes alarmingly real. For instance, if an AI system is biased, it could lead to unfair treatment of individuals based on race, gender, or socioeconomic status. This is where the need for ethical boundaries becomes crystal clear.

Establishing these boundaries requires a collective effort from technologists, ethicists, policymakers, and society as a whole. It involves creating frameworks that not only promote innovation but also ensure that the benefits of AI are distributed equitably. Ethical considerations should be woven into the very fabric of AI development, guiding the processes from conception to deployment. This is akin to building a house: you wouldn’t start without a solid foundation. Similarly, we must lay the groundwork for ethical AI to prevent potential disasters down the line.

Moreover, as we navigate this complex landscape, we must remain vigilant about the implications of neglecting ethics in AI. The consequences can be dire, leading to a loss of public trust, increased inequality, and even societal unrest. It's crucial to engage in open dialogues about the ethical dilemmas posed by AI technologies. By fostering an environment where diverse perspectives are heard, we can cultivate a culture of responsibility that prioritizes human well-being over mere profit.

In summary, the journey toward balancing AI innovation with ethical boundaries is fraught with challenges but also filled with opportunities. By prioritizing ethical considerations, we can harness the power of AI to create a future that is not only technologically advanced but also just and equitable. As we move forward, let’s remember that the ultimate goal of AI should be to enhance human life, not diminish it. Together, we can ensure that innovation and ethics go hand in hand, paving the way for a brighter tomorrow.

The Importance of Ethical AI

In today's rapidly evolving technological landscape, the significance of ethical AI cannot be overstated. As artificial intelligence continues to permeate various sectors—ranging from healthcare to finance—it becomes imperative to ensure that these innovations not only serve their intended purposes but also align with societal values. The core of ethical AI lies in its ability to enhance human capabilities while safeguarding against potential harms. Imagine AI as a powerful tool, much like a double-edged sword; if wielded without caution, it can lead to unintended consequences that may affect millions.

One of the primary reasons to prioritize ethical considerations in AI development is the potential for harmful outcomes. When ethical frameworks are neglected, the risk of deploying biased algorithms increases, which can exacerbate existing inequalities. For instance, biased AI systems in hiring processes can lead to discrimination against certain demographic groups, perpetuating societal injustices. This highlights the necessity of embedding ethical principles at the heart of AI design. By doing so, we can create systems that are not only efficient but also equitable and just.

Moreover, the implications of ignoring ethics in AI extend beyond individual cases; they can shape public perception and trust in technology as a whole. If people perceive AI as a threat rather than a benefit, it could lead to widespread skepticism and resistance to adopting these technologies. This is why fostering an environment of trust is essential. A transparent approach to AI development, where stakeholders are informed about how decisions are made and how data is used, can significantly enhance public confidence.

To illustrate the importance of ethical AI, consider the following key points:

- Accountability: Establishing clear accountability in AI systems ensures that developers and organizations are responsible for the outcomes of their technologies.

- Transparency: Open communication about how AI systems function and make decisions promotes trust and understanding among users.

- Inclusivity: By involving diverse voices in the AI development process, we can create solutions that cater to a broader range of needs and perspectives.

In conclusion, the importance of ethical AI cannot be overlooked. As we continue to harness the power of artificial intelligence, we must remain vigilant and proactive in establishing ethical boundaries. This will not only protect individuals and communities but also pave the way for sustainable and beneficial AI innovations that truly enhance our society.

Regulatory Frameworks for AI

As we dive into the realm of artificial intelligence, we quickly realize that innovation is racing ahead at breakneck speed, while regulatory frameworks often lag behind. The relationship between AI advancements and the laws that govern them is like a dance—one partner leads with creativity and exploration, while the other tries to keep pace, ensuring safety and compliance. Without a robust regulatory framework, the potential for misuse and ethical breaches increases dramatically. So, what does this mean for the future of AI? Let's unpack the current landscape of AI regulations and explore their effectiveness.

Currently, various countries have implemented different regulatory approaches to manage the complexities of AI technologies. Some nations have established comprehensive guidelines, while others are still grappling with the foundational elements of AI governance. For instance, the European Union has taken significant strides with its proposed AI Act, aiming to create a legal framework that categorizes AI systems based on their risk levels. This proactive approach is designed to ensure that high-risk AI applications, like those used in healthcare and criminal justice, undergo stringent assessments before deployment.

On the other hand, the United States has adopted a more fragmented approach, with various states enacting their own laws and guidelines. This lack of uniformity can lead to confusion and inconsistency in how AI technologies are regulated across the nation. The challenge here is not just about creating laws but also about fostering collaboration between tech companies, policymakers, and the public. How do we ensure that these regulations are not just a tick-box exercise but genuinely serve to protect society?

To address these challenges, there is a growing consensus on the need for updated regulations that can keep pace with the rapid advancements in AI. This means not only revisiting existing laws but also anticipating future developments in technology. For example, as AI systems become more autonomous, regulations must evolve to address issues of accountability and liability. Who is responsible when an AI system makes a mistake? This question remains a hot topic among legal experts and technologists alike.

Moreover, the effectiveness of regulatory frameworks hinges on their adaptability. In a field as dynamic as AI, regulations must be flexible enough to accommodate new technologies and methodologies. A static approach could stifle innovation and push developers to operate in a grey area, where ethical considerations are overlooked. Therefore, engaging in continuous dialogue between stakeholders is crucial for shaping regulations that are both effective and forward-thinking.

In summary, the regulatory frameworks for AI are still in their infancy, and there is much work to be done. As we navigate this complex landscape, it is essential to strike a balance between fostering innovation and ensuring ethical boundaries. The stakes are high, and the implications of our regulatory decisions will resonate for years to come.

- What are the main challenges in regulating AI? The primary challenges include the rapid pace of technological advancement, the complexity of AI systems, and the need for international cooperation.

- How can we ensure that AI regulations are effective? Continuous engagement with stakeholders, regular updates to laws, and a focus on ethical considerations are essential for effective AI regulations.

- Are there global standards for AI regulation? Currently, there is no single global standard, but various countries are developing their frameworks, leading to a patchwork of regulations worldwide.

Global Perspectives on AI Regulation

When it comes to the regulation of artificial intelligence (AI), the world is anything but uniform. Different countries are navigating the complex waters of AI governance based on their unique cultural, economic, and political landscapes. For instance, in the United States, the approach to AI regulation is often characterized by a more laissez-faire attitude, encouraging innovation while relying on existing laws to address potential issues. This contrasts sharply with the European Union, which is known for its stringent regulatory frameworks aimed at protecting individual rights and data privacy. The EU’s General Data Protection Regulation (GDPR) has set a high standard for data protection that influences AI practices globally.

In China, the government takes a more centralized approach, actively promoting AI development as a national priority while implementing regulations that emphasize state control and security. This reflects a different set of values where innovation is pursued aggressively, yet under the watchful eye of regulatory bodies that prioritize national interests. Meanwhile, countries in Africa and Latin America are still in the early stages of developing AI regulations, often focusing on building foundational policies that can support technological growth without stifling innovation.

To illustrate these varying approaches, consider the following table that summarizes some key differences in AI regulation across selected regions:

| Region | Regulatory Approach | Key Focus |

|---|---|---|

| United States | Laissez-faire | Innovation and market-driven solutions |

| European Union | Strict regulations | Data privacy and individual rights |

| China | Centralized control | National security and state interests |

| Africa | Developing frameworks | Building foundational policies |

| Latin America | Emerging regulations | Support for technological growth |

As we examine these global perspectives, it becomes evident that the conversation around AI regulation is not just about creating laws but also about fostering an environment where ethical considerations are prioritized. For example, the EU’s proactive stance on ethical AI aims to create a framework that not only governs but also guides the development of AI technologies in a socially responsible manner. On the other hand, the US model raises questions about the balance between innovation and accountability, especially in areas like data privacy and algorithmic bias.

Ultimately, the global landscape of AI regulation is a tapestry woven from diverse threads of policy, culture, and technology. Each region’s unique approach reflects its values and priorities, shaping the future of AI in ways that can either enhance or hinder its potential. As we move forward, the challenge lies in finding common ground that respects these differences while promoting a cohesive framework for ethical AI development worldwide.

- What is the primary goal of AI regulation?

The main goal is to ensure that AI technologies are developed and used responsibly, minimizing harm while maximizing benefits to society. - How do different countries approach AI regulation?

Countries vary widely in their approaches, with some prioritizing innovation and others focusing on stringent regulations to protect individual rights. - Why is ethical AI important?

Ethical AI is crucial to prevent biases, protect privacy, and ensure that AI technologies serve the public good. - What challenges do regulators face in governing AI?

Challenges include the rapid pace of technological advancement, the complexity of AI systems, and resistance from industries that may be affected by regulations.

Case Studies of AI Regulation

When it comes to understanding the complexities of AI regulation, examining real-world case studies can provide invaluable insights. One notable example is the European Union's General Data Protection Regulation (GDPR), which has set a precedent for data privacy and protection not just in Europe but globally. The GDPR emphasizes the importance of transparency, consent, and the right to be forgotten, which are crucial in the context of AI systems that process vast amounts of personal data. This regulation has prompted many organizations to reevaluate their data handling practices, ensuring they prioritize user rights while still innovating.

On the other side of the Atlantic, the United States has taken a more fragmented approach to AI regulation. For instance, California's Consumer Privacy Act (CCPA) mirrors some aspects of the GDPR but lacks the same level of comprehensiveness. This inconsistency can lead to confusion among businesses operating in multiple states, highlighting the need for a more unified regulatory framework. The challenges faced by companies trying to comply with varying state laws underscore the importance of establishing coherent guidelines that can adapt to the rapid pace of technological advancement.

Another compelling case study comes from China, where the government has implemented a series of regulations aimed at controlling AI development and deployment. The Chinese approach is primarily focused on state control and surveillance, raising ethical questions about privacy and individual rights. While these regulations can foster rapid technological advancements, they also pose risks regarding human rights and freedom of expression, illustrating the delicate balance that must be struck in AI regulation.

To further illustrate the successes and failures in AI regulation, let's consider a comparative analysis of these three regions:

| Region | Regulatory Framework | Focus Areas | Challenges |

|---|---|---|---|

| European Union | GDPR | Data protection, user rights | Compliance costs, implementation complexity |

| United States | CCPA | Data privacy | Inconsistency across states |

| China | State-controlled regulations | Surveillance, control | Human rights concerns |

These case studies reveal that while there is no one-size-fits-all solution to AI regulation, the lessons learned can guide future policies. The EU's GDPR demonstrates the potential for comprehensive regulation to protect individual rights while promoting innovation. Conversely, the fragmented approach in the U.S. illustrates the confusion that can arise from inconsistent laws, while China's model raises critical ethical questions that must be addressed. As we look to the future, it is essential to incorporate these insights into the development of regulatory frameworks that are not only effective but also ethical.

- What is the GDPR, and why is it significant?

The General Data Protection Regulation is a comprehensive data protection law in the EU that emphasizes user rights and data privacy, influencing global standards. - How does AI regulation differ between countries?

Countries like the EU, U.S., and China have different approaches based on cultural, political, and economic factors, leading to varying levels of regulation and enforcement. - What are the ethical concerns surrounding AI regulation?

Ethical concerns include privacy rights, surveillance, and the potential for discrimination, necessitating a careful balance between innovation and individual freedoms.

Challenges in Implementing Regulations

Implementing regulations for artificial intelligence (AI) is akin to trying to catch smoke with your bare hands. The rapid pace of technological advancement often leaves regulatory bodies scrambling to keep up. One of the primary challenges is the **technological complexity** of AI systems. Unlike traditional software, AI algorithms can evolve over time, learning from data and changing their behavior in unpredictable ways. This makes it incredibly difficult for regulators to create static rules that can effectively govern such dynamic technologies.

Moreover, the **industry resistance** to regulation cannot be overlooked. Many companies view regulations as a hindrance to innovation and a potential threat to their competitive edge. This resistance often stems from a fear that regulations will stifle creativity or impose excessive compliance costs. As a result, there can be a significant pushback against any attempts to impose regulatory frameworks, leading to a tug-of-war between innovation and oversight.

Another layer of complexity arises from the **global nature of AI**. Technologies developed in one country can easily cross borders, making it challenging to enforce regulations that may vary significantly from one jurisdiction to another. For instance, while some countries prioritize strict data privacy laws, others may adopt a more laissez-faire approach to encourage technological advancement. This divergence can create a regulatory patchwork that complicates compliance for multinational corporations.

Furthermore, there is often a **lack of expertise** within regulatory bodies. Many policymakers may not fully understand the intricacies of AI technologies, which can lead to poorly designed regulations that fail to address the actual risks associated with AI. To bridge this gap, it is crucial to foster collaboration between technologists and regulators. This collaboration can help ensure that regulations are informed by a thorough understanding of the technology, allowing for more effective oversight.

Lastly, the **ethical implications** of AI add another layer of challenge to regulation. Regulators must balance the need for innovation with the responsibility to protect citizens from potential harms. This includes addressing concerns about privacy, security, and the potential for bias in AI systems. Striking this balance requires careful consideration and a willingness to adapt as new ethical dilemmas arise.

In summary, the challenges in implementing regulations for AI are multifaceted and complex. From technological intricacies to industry pushback and global disparities, regulators face an uphill battle. However, by fostering collaboration, enhancing expertise, and remaining adaptable to ethical considerations, we can work towards creating a regulatory environment that supports responsible AI innovation.

- What are the main challenges in regulating AI? The primary challenges include technological complexity, industry resistance, global disparities, lack of expertise among regulators, and ethical implications.

- Why is industry resistance a problem for AI regulation? Companies may fear that regulations will stifle innovation and impose excessive compliance costs, leading to pushback against regulatory measures.

- How can regulators keep up with the rapid advancements in AI technology? By fostering collaboration with technologists and enhancing regulatory expertise, regulators can create informed and effective oversight.

- What role do ethical considerations play in AI regulation? Ethical considerations are crucial for balancing innovation with the protection of citizens from potential harms such as privacy violations and biased outcomes.

Ethical Frameworks Guiding AI Development

In the rapidly evolving landscape of artificial intelligence, establishing ethical frameworks is not just a luxury—it's a necessity. These frameworks serve as the backbone for responsible AI development, ensuring that technology aligns with human values and societal norms. Imagine building a house without a solid foundation; it might look good initially, but over time, it can crumble. Similarly, without ethical guidelines, AI technologies can lead to unforeseen consequences that may harm individuals and communities.

At the core of ethical AI development are several key principles that guide researchers and developers. These principles include transparency, accountability, fairness, and privacy. Each of these plays a critical role in shaping how AI systems are designed and deployed:

- Transparency: This principle emphasizes the need for clear communication about how AI systems operate. Users should understand how decisions are made, which is crucial for building trust.

- Accountability: Developers and organizations must take responsibility for the outcomes of their AI systems. This includes addressing any negative impacts that arise from their use.

- Fairness: AI systems should be designed to avoid bias and discrimination, ensuring equitable treatment for all individuals, regardless of their background.

- Privacy: Protecting user data is paramount. Ethical frameworks must prioritize the safeguarding of personal information to maintain individual rights.

These principles are not just theoretical concepts; they have real-world applications. For instance, many organizations are adopting the IEEE Ethically Aligned Design framework, which provides guidelines for embedding ethical considerations into AI design processes. This framework encourages developers to consider the broader societal implications of their technologies, urging them to ask questions like: "How will this AI system affect marginalized communities?" and "What are the long-term impacts on employment and privacy?"

Moreover, ethical frameworks can vary across different industries. For example, in healthcare, the focus might be on patient safety and informed consent, while in finance, the emphasis could be on preventing discrimination in lending practices. Understanding these nuances is essential for creating AI systems that are not only innovative but also socially responsible.

As we look toward the future, the challenge lies in adapting these ethical frameworks to keep pace with the rapid advancements in AI technology. Continuous dialogue among stakeholders—including developers, ethicists, policymakers, and the public—is crucial. This collaboration can help refine ethical standards and ensure that AI serves humanity's best interests. After all, the ultimate goal of AI should be to enhance human capabilities and improve quality of life, not to undermine them.

In conclusion, ethical frameworks are indispensable in guiding the development of AI technologies. They provide the necessary guidelines to navigate the complex moral landscape that accompanies innovation. By prioritizing transparency, accountability, fairness, and privacy, we can harness the full potential of AI while safeguarding our values and rights.

- What is the purpose of ethical frameworks in AI development?

Ethical frameworks guide developers to create AI systems that are responsible, fair, and aligned with societal values. - How can we ensure AI systems are fair?

By implementing rigorous testing for bias, involving diverse teams in development, and adhering to ethical principles. - What role does transparency play in AI?

Transparency helps build trust by allowing users to understand how AI systems make decisions and what data they use.

AI Bias and Fairness

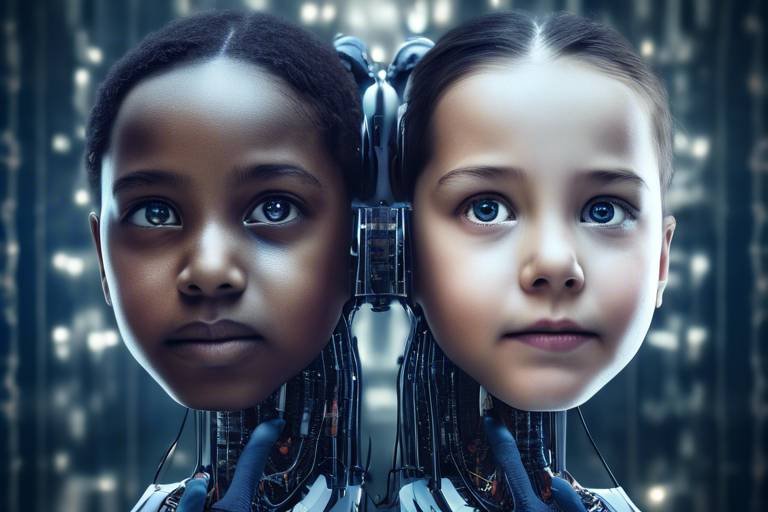

In today's rapidly advancing technological landscape, the concept of AI bias has emerged as a critical concern. Bias in artificial intelligence refers to the systematic favoritism or discrimination that can occur when algorithms are trained on data that reflects societal inequalities. This is not just a technical issue; it’s a societal one that can have profound impacts on people's lives. Imagine a world where a job application is screened by an AI that favors certain demographics over others—this could lead to unfair hiring practices and perpetuate existing inequalities. Therefore, addressing AI bias is not merely an option; it is a necessity for ensuring fairness and equity in AI applications.

So, where does this bias come from? The sources of AI bias can be traced back to several factors, including:

- Data Quality: If the data used to train an AI system is flawed or unrepresentative, the outcomes will likely reflect those biases.

- Algorithm Design: The choices made by developers during the algorithm design process can introduce bias, whether intentional or not.

- Human Prejudices: AI systems often learn from historical data, which may contain human biases that are then replicated in AI outputs.

To combat these biases, it is essential to implement strategies that promote fairness in AI systems. One of the most effective methods for detecting bias is through rigorous testing and evaluation of algorithms. By analyzing the performance of AI systems across diverse demographic groups, developers can identify discrepancies and work to correct them. Additionally, employing techniques such as adversarial debiasing can help to adjust the algorithm in real-time, minimizing bias during decision-making processes.

Real-world implications of AI bias can be severe, particularly for marginalized communities. For instance, consider facial recognition technology, which has been shown to misidentify individuals from certain racial backgrounds at a much higher rate than others. This can lead to wrongful accusations, surveillance overreach, and a general sense of distrust in technology. Moreover, biased algorithms in lending can result in unfair loan denials for specific demographic groups, exacerbating financial inequalities. These examples highlight the urgent need for responsible AI development that prioritizes fairness and equity.

As we look towards the future, it’s imperative that we build a culture of accountability in AI development. This includes not only technical solutions but also a commitment to ethical practices that consider the broader societal impacts of AI systems. By fostering collaboration among technologists, ethicists, and policymakers, we can work towards creating AI that not only innovates but also uplifts every segment of society.

- What is AI bias? AI bias refers to the tendency of algorithms to produce prejudiced results due to flawed data or design choices.

- How can AI bias affect society? AI bias can lead to unfair treatment in areas like hiring, law enforcement, and lending, disproportionately affecting marginalized communities.

- What can be done to mitigate AI bias? Strategies include improving data quality, rigorous testing, and employing debiasing techniques in algorithm design.

- Why is fairness in AI important? Ensuring fairness in AI is crucial for building trust, promoting equality, and preventing the perpetuation of societal inequalities.

Detecting and Mitigating AI Bias

In the rapidly evolving landscape of artificial intelligence, is not just a technical challenge; it’s a moral imperative. Bias in AI systems can lead to unfair treatment and discrimination, disproportionately affecting marginalized communities. So, how do we tackle this complex issue? First, we must understand that bias can creep into AI systems at various stages—from data collection to algorithm design. The sources of bias are often rooted in historical inequalities and societal prejudices, which can be inadvertently perpetuated by algorithms trained on flawed data.

To effectively detect bias, organizations can employ several strategies. One effective method is to conduct regular audits of AI systems, analyzing their decision-making processes and outcomes. By comparing the performance of AI across different demographic groups, we can identify discrepancies that indicate bias. For instance, if a hiring algorithm consistently favors one demographic over another, it’s a clear signal that bias is at play. Additionally, utilizing diverse datasets during the training phase can help mitigate the risk of embedding biases into the AI’s learning process.

Moreover, it is crucial to involve a diverse group of stakeholders in the development and evaluation of AI systems. This includes not only engineers and data scientists but also ethicists, sociologists, and representatives from affected communities. By fostering a collaborative environment, we can ensure that multiple perspectives are considered, ultimately leading to fairer outcomes. Transparency in AI processes is also vital; organizations should be open about how their algorithms function and the data they utilize. This openness can build trust and allow for external scrutiny, which is essential for accountability.

Once bias is detected, it’s imperative to implement corrective measures. This can involve re-training algorithms with more representative data or adjusting the algorithms themselves to prioritize fairness. For example, organizations can adopt techniques such as fairness-aware algorithms, which are designed specifically to minimize bias in outcomes. Additionally, continuous monitoring and feedback loops can help organizations adapt their AI systems over time, ensuring they remain fair and equitable as societal norms evolve.

In summary, while the challenge of detecting and mitigating AI bias is significant, it is not insurmountable. By adopting a proactive approach that includes regular audits, diverse stakeholder involvement, and transparent practices, organizations can work towards creating AI systems that are not only innovative but also ethical and fair. The responsibility lies with all of us to ensure that AI serves as a tool for progress, rather than a perpetuator of inequality.

- What is AI bias? AI bias refers to systematic and unfair discrimination in AI systems, often resulting from flawed data or algorithms.

- How can organizations detect AI bias? Organizations can detect AI bias through regular audits, performance comparisons across demographic groups, and stakeholder feedback.

- What steps can be taken to mitigate AI bias? Steps include using diverse datasets, implementing fairness-aware algorithms, and fostering transparency in AI processes.

- Why is it important to involve diverse stakeholders in AI development? Involving diverse stakeholders ensures that multiple perspectives are considered, which can lead to fairer and more equitable AI outcomes.

Real-World Implications of AI Bias

When we talk about AI bias, it’s not just a technical issue; it’s a human issue that can have profound real-world implications. Imagine a world where decisions affecting your life—like job applications, loan approvals, or even criminal sentencing—are influenced by algorithms that are inherently biased. This is not a dystopian future; it's happening right now. Research has shown that AI systems can reflect and even amplify existing biases present in the data they are trained on. For instance, if a hiring algorithm is trained on data from a company that has historically favored one demographic over others, it may unfairly disadvantage qualified candidates from underrepresented groups.

The consequences of AI bias can be severe and far-reaching. In the realm of criminal justice, biased algorithms can lead to disproportionate targeting of marginalized communities. Studies have indicated that predictive policing tools, which analyze crime data to forecast where crimes are likely to occur, can lead to over-policing in certain neighborhoods, often those predominantly inhabited by people of color. This creates a vicious cycle where communities are unfairly scrutinized and criminalized, further entrenching societal inequalities.

Moreover, in the financial sector, biased AI can perpetuate economic disparities. For instance, algorithms used in loan approval processes might deny credit to individuals based on biased historical data, which could be influenced by factors like race or socioeconomic status. This not only hampers individual opportunities but also stifles economic growth in entire communities. The ramifications of such biases can ripple across generations, affecting access to education, housing, and employment.

To illustrate the impact of AI bias, consider the following table that summarizes some key real-world implications:

| Sector | Implication | Example |

|---|---|---|

| Criminal Justice | Over-policing and wrongful convictions | Predictive policing tools targeting minority neighborhoods |

| Healthcare | Disparities in treatment recommendations | Algorithms favoring certain demographics for treatments |

| Employment | Discrimination in hiring practices | Hiring algorithms favoring candidates from specific backgrounds |

| Finance | Unequal access to credit | Loan approval algorithms disadvantaging minority applicants |

As we navigate this complex landscape, it’s crucial to recognize that the stakes are high. The failure to address AI bias not only undermines the credibility of AI technologies but also poses a significant risk to social justice and equity. We must ask ourselves: how can we create a future where AI systems are designed to be fair and inclusive? The answer lies in a collective effort to scrutinize the data we use, the algorithms we develop, and the societal structures we uphold.

- What is AI bias? AI bias refers to the presence of prejudice in AI algorithms that can lead to unfair treatment of individuals based on race, gender, or other characteristics.

- How does AI bias affect society? AI bias can perpetuate existing inequalities in various sectors, including criminal justice, healthcare, and finance, leading to discrimination and social injustice.

- What can be done to mitigate AI bias? Strategies to mitigate AI bias include using diverse training data, conducting regular audits of AI systems, and involving stakeholders from various backgrounds in the development process.

Frequently Asked Questions

- What is the significance of ethical considerations in AI development?

Ethical considerations in AI development are crucial because they help to ensure that technologies are designed and implemented in ways that benefit society while minimizing potential harm. Neglecting ethics can lead to harmful consequences, including privacy violations, discrimination, and the erosion of trust in technology.

- How do regulatory frameworks impact AI innovation?

Regulatory frameworks are essential for guiding the responsible development of AI technologies. They set the rules and standards that companies must follow, ensuring that innovation does not come at the expense of safety, fairness, and accountability. Without effective regulations, there is a risk of unchecked AI advancements that could have negative societal impacts.

- Are there global differences in AI regulation?

Yes, different countries have unique approaches to AI regulation, shaped by their cultural, economic, and political contexts. For instance, some nations may prioritize innovation and economic growth, while others focus on protecting individual rights and ensuring ethical standards. This diversity can lead to varying levels of oversight and enforcement in AI governance.

- What are some challenges in implementing AI regulations?

Implementing AI regulations can be challenging due to several factors, including the rapid pace of technological advancement, the complexity of AI systems, and resistance from industry stakeholders. These challenges can hinder the establishment of effective regulatory measures that keep up with the evolving landscape of AI technologies.

- How does AI bias affect society?

AI bias can have severe implications for society, particularly for marginalized communities. When AI systems exhibit bias, they can perpetuate and even exacerbate existing inequalities, leading to unfair treatment in areas like hiring, lending, and law enforcement. Addressing AI bias is critical to promoting fairness and equity in the use of AI technologies.

- What steps can be taken to mitigate AI bias?

To mitigate AI bias, organizations can implement several strategies, such as conducting regular audits of algorithms, using diverse training data, and employing fairness metrics to evaluate outcomes. By identifying and addressing bias during the development process, companies can create more equitable AI systems that serve all users fairly.