Ethics and AI: The Risks and Rewards

As we stand on the brink of a technological revolution, the conversation surrounding artificial intelligence (AI) is more critical than ever. The rapid evolution of AI technologies brings with it a plethora of opportunities and challenges. While the potential for AI to enhance our lives is immense, the ethical implications of its development and implementation cannot be overlooked. This article delves into these implications, examining both the potential benefits and the risks associated with AI across various sectors. Are we prepared to navigate this complex landscape where innovation meets morality?

Understanding the significance of ethical considerations in AI development is crucial for ensuring that technology serves humanity positively while avoiding harmful consequences. Imagine a world where AI systems operate without ethical guidelines; the potential for misuse and harm could be catastrophic. Ethical AI is not just a buzzword; it's a necessary framework that guides developers, companies, and policymakers in creating AI systems that respect human rights and promote fairness. By prioritizing ethics, we can harness the power of AI to foster innovation while safeguarding our values and societal norms.

While AI holds great promise, it also poses various risks that must be addressed. These include bias, privacy violations, and security threats. Identifying these risks is essential for developing responsible AI systems that do not compromise our ethical standards. For instance, if AI systems are trained on biased data, the decisions they make could perpetuate existing inequalities. Furthermore, as AI becomes more integrated into our daily lives, the potential for privacy breaches increases, raising questions about how our data is collected and used. Are we ready to face these challenges head-on?

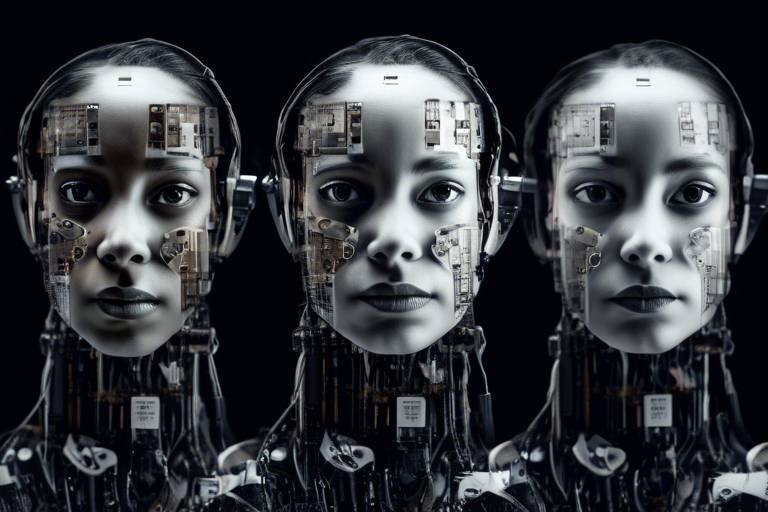

Bias in AI algorithms can lead to unfair treatment and discrimination. Addressing these biases is vital for creating equitable AI solutions. Consider this: if an AI system is trained predominantly on data from one demographic group, it may not perform well for individuals outside that group. This can result in skewed outcomes that favor certain populations over others. The implications are profound, affecting everything from hiring practices to law enforcement decisions. Therefore, recognizing and mitigating bias is not just a technical challenge; it’s a moral obligation.

Bias can originate from various sources, including training data, algorithm design, and human influence. Understanding these sources is key to mitigating bias in AI systems. For example, if the data used to train an AI is unrepresentative or flawed, the resulting model will likely reflect those imperfections. Moreover, the way algorithms are designed can inadvertently introduce biases if not carefully monitored. Lastly, human biases can seep into AI through the decisions made by developers and stakeholders. Recognizing these sources is the first step toward creating fairer AI systems.

The consequences of biased AI can be severe, affecting individuals' lives and perpetuating societal inequalities. For instance, biased algorithms in hiring can lead to qualified candidates being overlooked based solely on their demographic characteristics. Such outcomes not only harm individuals but also hinder societal progress. Understanding these impacts is essential for ethical AI development, as it compels us to ask: what kind of future do we want to create with AI?

AI systems often rely on vast amounts of data, raising significant privacy concerns. Balancing data utility with individual privacy rights is a critical challenge. As AI becomes more sophisticated, it can analyze personal data in ways that were previously unimaginable, leading to potential invasions of privacy. For instance, facial recognition technologies have sparked debates about surveillance and consent. Are we willing to sacrifice our privacy for the sake of progress? This question looms large in discussions about the ethical use of AI.

Despite the risks, AI offers numerous benefits that can transform our world for the better. These include improved efficiency, enhanced decision-making, and innovative solutions across various industries. Imagine a healthcare system where AI assists doctors in diagnosing diseases with unprecedented accuracy or a business landscape where AI optimizes operations, leading to cost savings and increased productivity. The potential is staggering, and it’s essential to highlight these benefits as we navigate the complexities of ethical AI.

AI technologies can revolutionize healthcare by improving diagnostics, personalizing treatment plans, and streamlining administrative processes, ultimately enhancing patient outcomes. For instance, AI algorithms can analyze medical images faster and more accurately than human radiologists, leading to earlier detection of conditions like cancer. Additionally, AI can help tailor treatments to individual patients by analyzing genetic information and lifestyle factors. The result? A more effective and personalized approach to healthcare that could save countless lives.

In the business sector, AI can optimize operations, enhance customer experiences, and drive innovation, leading to increased competitiveness and profitability. From automating routine tasks to providing insights through data analysis, AI empowers businesses to make informed decisions quickly. Imagine a retail company that uses AI to predict consumer trends and optimize inventory levels, reducing waste and improving customer satisfaction. The integration of AI in business is not just a trend; it’s a necessary evolution in a rapidly changing marketplace.

- What is ethical AI? Ethical AI refers to the development and implementation of artificial intelligence systems that prioritize fairness, accountability, and transparency.

- What are the risks associated with AI? The primary risks include bias, privacy violations, and security threats that can arise from the misuse of AI technologies.

- How can we mitigate bias in AI? By ensuring diverse training data, regularly auditing algorithms, and promoting awareness of human biases, we can work towards more equitable AI systems.

- What are the benefits of AI in healthcare? AI can enhance diagnostics, personalize treatment plans, and streamline administrative processes, leading to improved patient outcomes.

- How is AI transforming the business sector? AI optimizes operations, enhances customer experiences, and drives innovation, making businesses more competitive and profitable.

The Importance of Ethical AI

In today's rapidly evolving technological landscape, the significance of ethical considerations in artificial intelligence (AI) development is more crucial than ever. As we integrate AI into various aspects of our lives—from healthcare to finance and beyond—it's imperative that we ensure this powerful technology serves humanity positively. The potential of AI to revolutionize industries is undeniable, but without a solid ethical framework, we risk unleashing unforeseen consequences that could harm individuals and society as a whole.

Imagine a world where AI systems make decisions without human oversight, leading to outcomes that could be unfair or discriminatory. This scenario highlights the urgent need for ethical AI, which focuses on developing technology that aligns with human values and societal norms. By prioritizing ethics in AI, we can create systems that not only enhance efficiency but also promote fairness, transparency, and accountability.

One of the primary reasons for emphasizing ethical AI is to prevent the exacerbation of existing inequalities. For instance, if AI algorithms are trained on biased data, they may perpetuate stereotypes and reinforce societal biases. This can have serious implications, particularly in sensitive areas like hiring practices, law enforcement, and lending. To combat this, developers must actively seek out and mitigate biases throughout the AI lifecycle, from data collection to algorithm design.

Furthermore, ethical AI encourages collaboration and inclusivity in the development process. By involving diverse stakeholders—including ethicists, community representatives, and individuals from various backgrounds—we can ensure that AI technologies reflect a broader range of perspectives and values. This collaborative approach not only enriches the development process but also fosters trust among users, which is essential for the widespread adoption of AI.

Lastly, embracing ethical AI can lead to greater innovation and creativity. When developers prioritize ethical considerations, they are more likely to think critically about the implications of their work, leading to novel solutions that address real-world challenges. In this way, ethical AI is not just about avoiding harm; it's also about unlocking the full potential of technology to improve lives and create a better future for all.

In summary, the importance of ethical AI cannot be overstated. By focusing on fairness, inclusivity, and innovation, we can harness the power of artificial intelligence to create a brighter, more equitable future. As we continue to explore the vast possibilities of AI, let us remain vigilant in our commitment to ethical principles, ensuring that technology serves as a force for good in our society.

- What is ethical AI? Ethical AI refers to the development and implementation of artificial intelligence systems that prioritize fairness, accountability, and transparency, ensuring that technology benefits humanity as a whole.

- Why is it important to address bias in AI? Addressing bias in AI is crucial because biased algorithms can lead to unfair treatment of individuals and perpetuate societal inequalities, negatively impacting vulnerable communities.

- How can we ensure AI respects privacy? To ensure AI respects privacy, developers must implement strong data protection measures, obtain informed consent, and be transparent about how data is used.

- What role do diverse teams play in ethical AI development? Diverse teams bring a variety of perspectives and experiences to the table, helping to identify potential biases and ethical concerns that may be overlooked by homogeneous groups.

Potential Risks of AI

Artificial Intelligence (AI) is a double-edged sword, offering groundbreaking advancements while simultaneously posing significant risks. It's like having a powerful tool that, if misused, can lead to unintended consequences. As we dive deeper into the world of AI, it becomes increasingly crucial to understand these risks to navigate the technology responsibly. At the heart of the matter are three primary concerns: bias, privacy violations, and security threats. Each of these risks can have profound implications for individuals and society as a whole.

First, let's talk about bias in AI algorithms. Imagine a world where decisions about job applications, loan approvals, or even criminal justice outcomes are influenced by biased AI systems. That’s a reality we could face if we don’t address the biases that can seep into AI technologies. Bias can originate from various sources, such as skewed training data, flawed algorithm design, or even the unconscious biases of the developers themselves. For instance, if an AI system is trained on historical data that reflects societal inequalities, it may inadvertently perpetuate those inequalities. This can lead to unfair treatment of certain groups, which is not just unethical but can also have serious legal ramifications.

Next up is the issue of privacy concerns. AI systems thrive on data, and the more data they have, the smarter they become. However, this reliance on vast amounts of personal data raises significant questions about privacy. Are we sacrificing our privacy for the sake of convenience? Balancing the utility of data with the right to privacy is a tightrope walk that many organizations are struggling to manage. For example, AI technologies used in surveillance can enhance security but at the cost of individual privacy. It’s a classic case of “big brother” versus personal freedom, and finding that balance is critical.

Finally, we cannot ignore the security threats posed by AI. As AI systems become more sophisticated, they can also be exploited for malicious purposes. Cybercriminals can use AI to automate attacks, making them more efficient and harder to detect. This raises the stakes in cybersecurity, as organizations must not only defend against traditional threats but also prepare for AI-driven attacks. The potential for AI to be weaponized is a chilling thought, and it underscores the importance of developing robust security measures to protect against these emerging threats.

In summary, while AI holds remarkable potential, it is accompanied by significant risks that we must address proactively. Understanding the implications of bias, privacy violations, and security threats is essential for developing responsible AI systems. As we continue to innovate and integrate AI into our daily lives, we must remain vigilant and ensure that ethical considerations are at the forefront of our technological advancements.

- What are the main risks associated with AI? The primary risks include bias in algorithms, privacy concerns, and security threats.

- How can bias in AI be mitigated? Bias can be reduced by using diverse training data, regularly auditing algorithms, and involving diverse teams in the development process.

- What measures can be taken to protect privacy in AI systems? Organizations can implement data anonymization techniques, obtain informed consent, and ensure transparency in data usage.

- Are there any regulations governing AI? Yes, many countries are developing regulations to govern AI usage, focusing on ethical standards and accountability.

Bias in AI Algorithms

Bias in AI algorithms is a critical issue that can lead to unfair treatment and discrimination across various sectors. Imagine a world where a machine learning model, designed to assist in hiring, inadvertently favors one demographic over another simply because of the data it was trained on. This isn't just a hypothetical scenario; it's a reality that highlights the importance of addressing bias in AI systems. When algorithms are trained on historical data that reflects societal inequalities, they can perpetuate those very biases, leading to outcomes that are not only unjust but also damaging to individuals and communities.

To understand the roots of bias in AI, we need to explore its sources. The primary origins of bias can be categorized into three main areas:

- Training Data: If the data used to train an AI model is skewed or unrepresentative, the AI will likely produce biased outcomes. For example, if an AI system is trained predominantly on data from one demographic group, it may not perform well for others.

- Algorithm Design: The way an algorithm is structured can introduce bias. Certain design choices may prioritize specific features that inadvertently lead to discriminatory results.

- Human Influence: Bias can also stem from the developers themselves. If the creators of an AI system hold biased views, intentionally or unintentionally, these biases can be embedded in the algorithms.

Recognizing these sources is essential for mitigating bias in AI systems. However, understanding the implications of bias is equally important. The consequences of biased AI can be severe, affecting individuals' lives and perpetuating societal inequalities. For instance, biased algorithms in the criminal justice system can lead to unjust sentencing, while those used in lending can unfairly deny loans to certain groups. The ripple effects can be profound, leading to a cycle of disadvantage that is hard to break.

As we move forward in the development of AI technologies, it is imperative that we prioritize fairness and equity in our algorithms. This means not only identifying and correcting biases but also creating systems that actively promote inclusivity and diversity. By doing so, we can harness the power of AI to benefit all of humanity rather than reinforce existing disparities.

Sources of Bias

When we talk about bias in AI, it's essential to understand that it doesn't just appear out of thin air. Instead, it stems from various sources that can significantly impact how AI systems operate. One major source of bias is the training data used to develop these algorithms. If the data sets are not diverse or representative of the entire population, the AI can learn and reinforce existing stereotypes or prejudices. For instance, if an AI is trained predominantly on data from a specific demographic, it may not perform well for individuals outside that group, leading to skewed results.

Another critical factor is the algorithm design itself. The way algorithms are structured can inadvertently introduce bias. Developers might make assumptions about data relationships that don't hold true across different contexts, which can lead to discriminatory outcomes. For example, if an algorithm is designed with certain parameters that favor one group over another, it can perpetuate inequality even if the underlying data is unbiased.

Moreover, human influence plays a significant role in bias creation. AI systems are built by humans, and as such, they can carry the biases and prejudices of their creators. This human element can manifest in various ways, such as through the selection of training data, the framing of problems, or even the interpretation of results. It's akin to a painter who, despite their best intentions, might unintentionally infuse their work with personal biases, coloring the final piece in ways that reflect their own perspective rather than an objective reality.

To truly grasp the sources of bias in AI, we must consider them as a combination of these factors:

- Training Data: Incomplete or unrepresentative data sets.

- Algorithm Design: Structural choices that favor certain outcomes.

- Human Influence: Developer biases that seep into the AI's functionality.

Recognizing these sources is crucial for anyone involved in AI development. By identifying where biases can creep in, we can take proactive steps to mitigate them and strive for more equitable AI solutions. It's not just about creating technology that works; it's about ensuring that it works for everyone, regardless of their background or identity.

- What is bias in AI? Bias in AI refers to systematic favoritism or discrimination that can occur in AI algorithms, leading to unfair outcomes.

- How can bias in AI be mitigated? Bias can be mitigated by using diverse training data, designing algorithms carefully, and continuously monitoring AI systems for unintended consequences.

- Why is it important to address bias in AI? Addressing bias is crucial to ensure fairness, equity, and trust in AI technologies, which ultimately affects society as a whole.

Consequences of Bias

The consequences of bias in artificial intelligence are not just theoretical; they have real-world implications that can deeply affect individuals and society as a whole. When AI systems are trained on biased data or designed with inherent prejudices, the outcomes can be detrimental. For instance, biased algorithms in hiring processes may lead to qualified candidates being overlooked simply because of their gender, ethnicity, or age. This not only harms the individuals affected but also deprives organizations of diverse talent that can drive innovation.

Moreover, biased AI can exacerbate existing societal inequalities. Consider the criminal justice system, where predictive policing algorithms can disproportionately target minority communities. This creates a vicious cycle, reinforcing stereotypes and leading to a lack of trust in law enforcement. The ramifications extend beyond just individual lives; they can ripple through communities, affecting social cohesion and perpetuating cycles of disadvantage.

In healthcare, biased AI can lead to misdiagnoses or inadequate treatment plans for marginalized groups, further entrenching health disparities. For example, if an AI system is primarily trained on data from a specific demographic, it may not accurately predict health risks for individuals outside that group. This can result in life-altering consequences, including delayed treatments or improper medical advice.

To illustrate the various consequences of bias in AI, consider the following table:

| Sector | Potential Bias Consequences |

|---|---|

| Employment | Discrimination in hiring processes, loss of diverse talent |

| Criminal Justice | Over-policing of minority communities, loss of public trust |

| Healthcare | Misdiagnoses, inadequate treatment for underrepresented populations |

As we can see, the consequences of biased AI are multifaceted and can lead to significant harm across various sectors. It is crucial for developers and stakeholders to recognize these potential pitfalls and actively work towards creating more equitable AI systems. By doing so, we not only protect individuals but also foster a more inclusive and fair society.

- What is bias in AI? Bias in AI refers to systematic and unfair discrimination that can arise from the data used to train AI systems or from the algorithms themselves.

- How can bias in AI be mitigated? Bias can be mitigated through diverse training data, regular audits of AI systems, and inclusive design practices that consider the needs of various demographics.

- What are the real-world impacts of biased AI? Real-world impacts include discrimination in hiring, unequal treatment in healthcare, and increased surveillance in minority communities, among others.

- Why is ethical AI important? Ethical AI is crucial to ensure that technology serves humanity positively, minimizing harm and promoting fairness and equality.

Privacy Concerns

In our digital age, where information flows like water, the surrounding artificial intelligence (AI) have become a hot topic. Imagine a world where every click, every interaction, and every preference is meticulously tracked and analyzed. Sounds a bit like a sci-fi movie, right? But this is the reality we face as AI systems increasingly rely on vast amounts of data to function effectively. The challenge lies in balancing the utility of this data with the fundamental rights of individuals to maintain their privacy.

AI technologies often gather personal information from various sources, including social media, online transactions, and even smart devices. This information is then used to train algorithms that can predict behaviors and preferences. While this can lead to improved services and personalized experiences, it raises significant concerns about how this data is collected, stored, and used. Are we sacrificing our privacy for convenience? This question is at the forefront of the ongoing debate about ethical AI.

Moreover, the lack of transparency in how AI systems operate adds another layer of complexity to privacy concerns. Many users are unaware of the extent to which their data is being utilized. For instance, how many times have you clicked 'I agree' without reading the fine print? This lack of awareness can lead to a false sense of security, making individuals vulnerable to data breaches and misuse. To illustrate the potential risks, consider the following table that outlines some key privacy concerns associated with AI:

| Privacy Concern | Description | Potential Impact |

|---|---|---|

| Data Collection | AI systems often collect extensive personal data. | Increased risk of data breaches and identity theft. |

| Lack of Transparency | Users may not know how their data is used. | Loss of trust in technology and companies. |

| Data Misuse | Data can be used for purposes other than intended. | Potential discrimination and targeted advertising. |

As we navigate this complex landscape, it's crucial for developers and organizations to adopt a responsible approach to data handling. This includes implementing robust data protection measures, ensuring transparency in data usage, and allowing users to have control over their personal information. After all, privacy is not just a luxury; it's a fundamental human right.

In conclusion, while AI has the potential to enhance our lives in many ways, we must remain vigilant about privacy concerns. By fostering an environment of trust and accountability, we can harness the benefits of AI without compromising our personal freedoms. So, the next time you engage with an AI system, take a moment to consider the implications of your data. Are you comfortable with how it’s being used?

- What are the main privacy concerns related to AI? The main concerns include data collection, lack of transparency, and potential data misuse.

- How can individuals protect their privacy when using AI? Individuals can protect their privacy by being aware of data policies, using privacy settings, and limiting the information they share.

- Are there regulations in place to protect privacy in AI? Yes, various regulations, such as GDPR in Europe, aim to protect personal data and privacy rights.

Benefits of AI

Despite the concerns surrounding artificial intelligence, the are vast and transformative. As we delve into the various sectors where AI is making waves, it becomes clear that these technologies are not just tools; they are catalysts for change. Imagine a world where mundane tasks are automated, allowing humans to focus on creativity and innovation. This is precisely what AI offers—an opportunity to enhance our capabilities and improve overall efficiency.

One of the most significant advantages of AI lies in its ability to improve efficiency. In industries ranging from manufacturing to customer service, AI systems can process data at lightning speed, making decisions faster than any human could. This efficiency translates to cost savings and the ability to scale operations without a corresponding increase in labor. For instance, in the manufacturing sector, AI-driven robots can work around the clock, ensuring that production lines are never halted and output is maximized.

Moreover, AI enhances decision-making processes. By analyzing vast amounts of data, AI can uncover patterns and insights that would be impossible for humans to detect. This capability is particularly beneficial in sectors like finance, where algorithms can predict market trends, assess risks, and even automate trading. In healthcare, AI systems can analyze patient data to recommend personalized treatment plans, leading to better health outcomes. The ability to make informed decisions based on real-time data is a game-changer across various fields.

Another exciting aspect of AI is its role in fostering innovation. With AI handling routine tasks, professionals can dedicate their time to creative problem-solving and innovative thinking. For example, in the tech industry, AI can assist developers by automating code generation or debugging processes, allowing them to focus on building new features and improving user experiences. This shift not only increases productivity but also encourages a culture of innovation within organizations.

In summary, the benefits of AI are profound and multifaceted. From improving efficiency and enhancing decision-making to driving innovation, AI is reshaping how we work and live. However, it is essential to approach these advancements with a sense of responsibility and awareness of the ethical implications that come with them. As we continue to explore the potential of AI, we must ensure that it serves humanity positively and equitably.

- What are the primary benefits of AI? AI improves efficiency, enhances decision-making, and fosters innovation across various sectors.

- How does AI improve healthcare? AI technologies can enhance diagnostics, personalize treatment plans, and streamline administrative processes.

- Can AI help in business operations? Yes, AI optimizes operations, enhances customer experiences, and drives innovation, leading to increased competitiveness and profitability.

AI in Healthcare

Artificial Intelligence is not just a buzzword in the tech world; it’s a transformative force that is reshaping the landscape of healthcare. Imagine walking into a clinic where your medical history is analyzed in seconds, and treatment plans are tailored specifically for you, all thanks to AI. This is not science fiction; it’s happening now! AI technologies have the potential to revolutionize healthcare in several ways, enhancing patient care and streamlining processes that were once cumbersome.

One of the most exciting applications of AI in healthcare is in the realm of diagnostics. Traditional diagnostic methods can be time-consuming and sometimes inaccurate. However, AI algorithms can analyze medical images, such as X-rays and MRIs, with remarkable precision. For instance, studies have shown that AI can outperform human radiologists in detecting certain conditions, such as pneumonia or tumors. This leads to quicker diagnoses and, ultimately, better patient outcomes.

Moreover, AI can personalize treatment plans by analyzing vast amounts of data, including genetic information and lifestyle factors. This means that instead of a one-size-fits-all approach, patients receive care tailored to their specific needs. For example, AI can help oncologists determine the most effective chemotherapy regimen for a patient based on their unique genetic makeup. This level of personalization is a game-changer in how we approach treatment.

In addition to diagnostics and personalized treatment, AI is making waves in administrative processes within healthcare facilities. By automating tasks such as scheduling appointments, managing patient records, and billing, AI reduces the administrative burden on healthcare professionals. This allows them to focus more on what they do best: caring for patients. The efficiency gained through AI can lead to shorter wait times and improved patient satisfaction.

However, as we embrace these advancements, it’s essential to consider the ethical implications. The integration of AI in healthcare comes with challenges, such as ensuring data privacy and addressing potential biases in AI algorithms. Striking a balance between leveraging AI's capabilities and protecting patient rights is crucial for the future of healthcare.

In summary, the potential of AI in healthcare is immense, offering improved diagnostics, personalized treatment plans, and streamlined administrative processes. As we continue to explore these technologies, we must also remain vigilant about the ethical considerations that accompany them. The future of healthcare is bright, and AI is at the forefront of this exciting evolution.

- What are the main benefits of AI in healthcare? AI improves diagnostics, personalizes treatment plans, and streamlines administrative tasks.

- How does AI enhance diagnostic accuracy? AI algorithms can analyze medical images and data more quickly and accurately than human practitioners.

- Are there any risks associated with AI in healthcare? Yes, risks include potential biases in algorithms and concerns about data privacy.

- Can AI replace healthcare professionals? While AI can assist and enhance many functions, it cannot replace the human touch and judgment that healthcare professionals provide.

AI in Business

In today's fast-paced world, the integration of Artificial Intelligence (AI) in business is not just a trend; it's a revolution. Companies that embrace AI technologies are not merely keeping up with the competition; they are setting the pace in their respective industries. From streamlining operations to enhancing customer experiences, AI is reshaping the landscape of business in ways we could only dream of a few years ago. Imagine a world where mundane tasks are automated, allowing employees to focus on creative and strategic initiatives. This is the power of AI!

One of the most significant advantages of AI in business is its ability to analyze vast amounts of data. Businesses generate enormous data every day, and AI can sift through this information, identify patterns, and provide actionable insights in a fraction of the time it would take a human. For instance, AI-driven analytics tools can forecast sales trends, optimize inventory levels, and even predict customer behavior. This not only leads to better decision-making but also enhances operational efficiency, ultimately driving profitability.

Moreover, AI can significantly enhance customer experiences. With the advent of chatbots and virtual assistants, businesses can provide 24/7 customer support, answering queries and resolving issues in real-time. This level of responsiveness is crucial in today's market, where customers expect immediate answers. Additionally, AI can personalize marketing efforts by analyzing customer preferences and behaviors, allowing businesses to deliver targeted content and recommendations. This personalized approach creates a deeper connection with customers, fostering loyalty and increasing sales.

However, the implementation of AI in business isn't without its challenges. Companies must navigate issues related to data privacy and security, ensuring that customer information is protected while still leveraging it for insights. Furthermore, there is a growing concern about the potential for job displacement as AI takes over tasks traditionally performed by humans. It's essential for businesses to address these concerns proactively by investing in employee training and development, ensuring that their workforce is equipped to thrive alongside AI technologies.

To illustrate the impact of AI in business, consider the following table showcasing key areas where AI is making a difference:

| Area of Impact | Description | Examples |

|---|---|---|

| Customer Service | AI enhances customer interactions through chatbots and virtual assistants. | Chatbots, AI-driven help desks |

| Data Analytics | AI analyzes large datasets to uncover trends and insights. | Predictive analytics, sales forecasting |

| Marketing | AI personalizes marketing efforts based on customer behavior. | Targeted ads, recommendation engines |

| Operational Efficiency | AI automates routine tasks, freeing up human resources for strategic work. | Inventory management, supply chain optimization |

In conclusion, the integration of AI in business is a double-edged sword. While it offers remarkable benefits such as improved efficiency, enhanced customer experiences, and data-driven decision-making, it also presents challenges that must be addressed. Businesses that effectively leverage AI technologies while considering ethical implications and potential risks will undoubtedly lead the way in the future. As we continue to explore the capabilities of AI, one thing is clear: the future of business is intertwined with the evolution of artificial intelligence.

- What is AI in business? AI in business refers to the use of artificial intelligence technologies to improve operations, enhance customer experiences, and make data-driven decisions.

- How does AI improve customer service? AI improves customer service through tools like chatbots that provide instant responses and personalized assistance, enhancing customer satisfaction.

- What are the risks associated with AI in business? Risks include data privacy concerns, potential job displacement, and the possibility of biased decision-making if AI systems are not properly managed.

- Can AI help in decision-making? Yes, AI can analyze data and provide insights that assist businesses in making informed decisions quickly and effectively.

Frequently Asked Questions

- What are the main ethical concerns regarding AI?

The primary ethical concerns surrounding AI include bias in algorithms, privacy violations, and security threats. These issues can lead to unfair treatment of individuals, misuse of personal data, and potential harm to society. Addressing these concerns is crucial for the responsible development of AI technologies.

- How does bias affect AI systems?

Bias in AI systems can arise from various sources such as skewed training data, flawed algorithm design, or human influence. This bias may result in discriminatory outcomes, affecting marginalized groups disproportionately. For instance, if an AI system is trained on data that lacks diversity, it may not perform well for all users, leading to unfair advantages or disadvantages.

- What are the consequences of biased AI?

The consequences of biased AI can be profound, leading to systemic inequality and impacting individuals' lives. For example, biased algorithms in hiring processes can perpetuate discrimination, while biased facial recognition systems can result in wrongful accusations. Understanding these impacts is essential to ensure that AI serves everyone fairly.

- How can we protect privacy in AI systems?

To protect privacy in AI systems, it's essential to implement robust data governance practices. This includes anonymizing data, obtaining informed consent from users, and ensuring transparency in how data is used. By balancing the utility of data with individual privacy rights, we can create AI systems that respect user privacy while delivering valuable insights.

- What are the benefits of AI in healthcare?

AI has the potential to revolutionize healthcare by enhancing diagnostics, personalizing treatment plans, and streamlining administrative processes. For example, AI algorithms can analyze medical images with high accuracy, leading to earlier detection of diseases. This ultimately improves patient outcomes and helps healthcare providers deliver more efficient care.

- How does AI improve business operations?

AI enhances business operations by optimizing processes, improving customer experiences, and driving innovation. By automating repetitive tasks, businesses can save time and resources, allowing employees to focus on more strategic initiatives. Additionally, AI-driven analytics can provide valuable insights into customer behavior, helping businesses tailor their offerings and improve satisfaction.