The Ethics Behind AI: Risks and Rewards

Artificial Intelligence (AI) is not just a buzzword; it's a transformative force that is reshaping our world in unprecedented ways. As we stand on the brink of this technological revolution, the ethical implications of AI become increasingly significant. The question we must grapple with is not merely whether AI can improve our lives, but at what cost? This article explores the ethical implications of artificial intelligence, weighing its potential benefits against the risks it poses to society, privacy, and individual rights.

To navigate the complex landscape of AI, it’s essential to first understand the foundational concepts of AI ethics. Ethics in AI encompasses a range of moral considerations that guide the development and deployment of these technologies. Think of it as the compass that helps innovators steer clear of potential pitfalls. As we dive deeper into this topic, we find ourselves wrestling with questions like: What responsibilities do developers have? How do we ensure fairness in AI decision-making? And how can we protect individual rights while embracing innovation? These questions are crucial for responsible innovation, and they require thoughtful consideration.

Despite the ethical dilemmas, the potential benefits of AI are staggering. Imagine a world where healthcare is revolutionized, education is personalized, and transportation is efficient. AI can drive significant advancements across various sectors, enhancing efficiency and improving quality of life for many people. For instance, in healthcare, AI systems can analyze vast amounts of data to identify patterns that humans might miss, leading to better patient outcomes. In education, AI can tailor learning experiences to individual needs, ensuring that every student gets the attention they deserve.

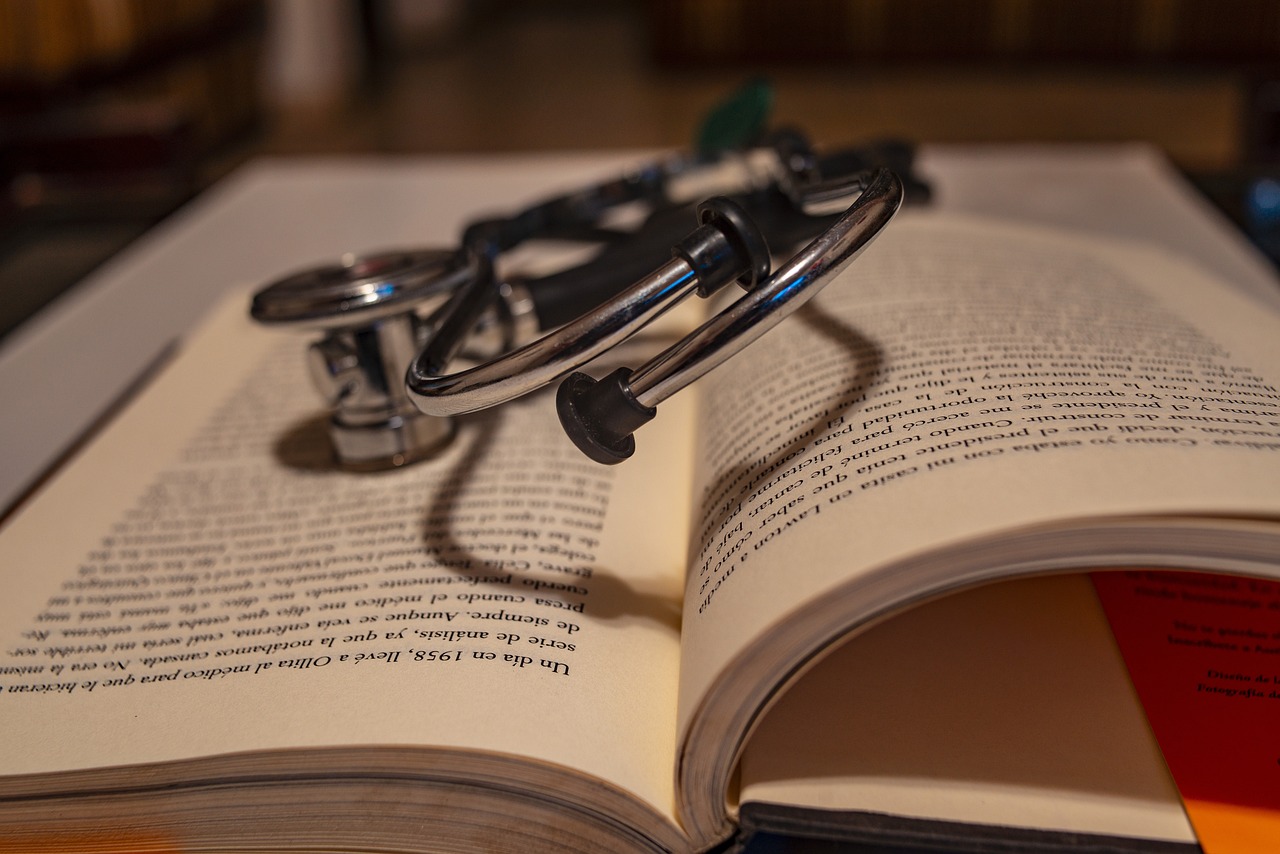

The integration of AI in healthcare is particularly promising. With AI, we can expect improved diagnostics, personalized treatment plans, and more efficient patient care. Imagine walking into a clinic where an AI system quickly analyzes your medical history and symptoms to suggest the best treatment options. This technology has the potential to revolutionize the way we approach health and wellness, making healthcare more accessible and effective for everyone.

However, with great power comes great responsibility. The use of AI in healthcare raises pressing data privacy concerns. Sensitive patient information is at risk of misuse or breaches, necessitating strict protocols to protect it. It’s like giving someone the keys to your house; you want to ensure they’re responsible enough to keep it safe. Without stringent measures in place, the very technology meant to help us could end up compromising our privacy.

Moreover, the ethical implications of using AI in treatment decisions cannot be overlooked. Questions about accountability and bias arise, especially when algorithms are involved in critical health decisions. What happens if an AI system makes a mistake? Who is responsible? The potential for dehumanization in patient care is another concern, as relying too heavily on machines could strip away the personal touch that is so vital in healthcare.

In the realm of education, AI's role promises personalized learning experiences that cater to individual student needs. Imagine a classroom where every student learns at their own pace, receiving tailored resources and support. AI can provide educators with valuable insights into student performance, helping them identify areas where students struggle and allowing for timely intervention. This technology has the power to create a more inclusive and effective educational environment.

While AI offers numerous advantages, it also poses significant risks that we must confront head-on. Issues like job displacement, algorithmic bias, and the potential for misuse in surveillance and warfare are just the tip of the iceberg. As we embrace AI, we must also be vigilant about its darker implications.

One of the most pressing concerns is algorithmic bias, which can lead to unfair treatment of individuals based on race, gender, or socioeconomic status. It's like programming a robot with a skewed perspective; the outcomes can be detrimental. This highlights the need for diverse data sets and ethical oversight in AI development to ensure fairness and equity.

Another significant risk is job displacement. The automation of jobs through AI technology raises concerns about unemployment and the economic impact on workers. It’s essential to have discussions about workforce retraining and adaptation to prepare for a future where AI plays a larger role in the workplace. Just like the industrial revolution transformed labor, AI is poised to do the same, and we must be ready.

Given these risks, establishing comprehensive regulatory frameworks is essential to ensure that AI technologies are developed and deployed responsibly. Balancing innovation with ethical considerations and public safety is crucial. Without proper regulations, we risk creating a chaotic environment where the benefits of AI are overshadowed by its dangers.

The development of global standards for AI ethics can facilitate international cooperation and ensure that AI technologies align with human rights and ethical principles. It’s a collaborative effort that requires input from various stakeholders, including governments, tech companies, and civil society.

Countries are beginning to implement national regulations to govern AI usage, focusing on accountability, transparency, and safeguarding citizens' rights in an increasingly automated world. These regulations are vital to ensure that as we move forward with AI, we do so with a strong ethical foundation.

- What is AI ethics? AI ethics refers to the moral considerations that guide the development and use of artificial intelligence technologies.

- What are the benefits of AI? AI can improve efficiency and quality of life in sectors like healthcare, education, and transportation.

- What are the risks associated with AI? Risks include job displacement, algorithmic bias, and privacy concerns.

- How can we regulate AI? Establishing comprehensive regulatory frameworks and global standards can help ensure responsible AI development.

Understanding AI Ethics

When we dive into the world of artificial intelligence (AI), it’s essential to grasp the concept of AI ethics. This field examines the moral implications of creating and utilizing AI technologies. Think of it as the compass guiding us through the complex landscape of innovation, ensuring that our advancements align with our societal values. Just like a ship needs a navigator to avoid treacherous waters, we need ethical guidelines to steer AI development in a direction that benefits humanity.

At its core, AI ethics grapples with questions that are as profound as they are challenging. For example, how do we ensure that AI systems treat all individuals fairly? What happens when algorithms make decisions that significantly impact lives? These inquiries are not just academic; they resonate with real-world implications. As AI systems become more integrated into our daily lives, understanding these ethical considerations becomes crucial for responsible innovation.

One of the foundational concepts in AI ethics is the idea of accountability. When an AI makes a mistake—say, misdiagnosing a patient or misidentifying a criminal—who is responsible? Is it the developer who created the algorithm, the company that deployed it, or the AI itself? Establishing clear lines of accountability is vital to ensure that individuals and organizations take responsibility for the outcomes of their AI systems.

Another critical aspect is transparency. In a world where algorithms dictate decisions, it’s imperative that we understand how these systems work. Imagine trying to solve a puzzle without knowing what the picture looks like; that’s how users feel when they engage with opaque AI systems. By promoting transparency, we can foster trust between AI technologies and the people they serve. This involves not only clear communication about how AI systems function but also making the data they rely on accessible for scrutiny.

Moreover, the concept of bias in AI cannot be overlooked. Algorithms are only as good as the data they’re trained on. If that data reflects societal biases—whether related to race, gender, or socioeconomic status—the AI will perpetuate those biases. This is a significant ethical concern that demands diverse data sets and rigorous testing to ensure fairness. For instance, if an AI system is trained predominantly on data from one demographic, it may fail to accurately serve or represent others.

In summary, understanding AI ethics is not just about adhering to a set of rules; it’s about fostering a culture of responsibility, accountability, and fairness in the development and deployment of AI technologies. As we continue to innovate, we must remain vigilant and proactive in addressing these ethical challenges. By doing so, we can harness the full potential of AI while safeguarding our shared values and rights.

Potential Benefits of AI

Artificial intelligence is not just a buzzword; it’s a transformative force that has the potential to reshape our world in profound ways. Imagine a future where machines can analyze vast amounts of data in seconds, uncovering insights that humans might overlook. This is not science fiction—this is the promise of AI. From enhancing healthcare to revolutionizing education and optimizing transportation, the benefits of AI are as exciting as they are impactful.

In the realm of healthcare, AI is making waves with its ability to improve diagnostics and create personalized treatment plans. For instance, AI algorithms can analyze medical images with incredible accuracy, often outperforming human radiologists. This means faster diagnoses and better outcomes for patients. Furthermore, AI can sift through electronic health records to identify patterns, enabling healthcare providers to tailor treatments based on individual patient needs. Imagine a world where your treatment is uniquely designed for you, based on a comprehensive analysis of your health data!

But the benefits of AI don't stop at healthcare. In education, AI is paving the way for personalized learning experiences. Students can learn at their own pace, with AI systems adapting to their individual learning styles. This individualized approach not only helps students grasp complex concepts more effectively but also boosts their confidence. Educators, too, benefit from AI, as these systems provide valuable insights into student performance, allowing teachers to focus their efforts where they are needed most.

Moreover, AI is revolutionizing transportation. With the advent of autonomous vehicles, we are on the brink of a new era in how we travel. These vehicles are designed to reduce accidents caused by human error, optimize traffic flow, and decrease emissions by driving more efficiently. Imagine hopping into a self-driving car that knows the best route to avoid traffic jams while you relax or catch up on work! The potential for AI to enhance our daily commutes is nothing short of remarkable.

However, it’s essential to acknowledge that the integration of AI into these sectors comes with its own set of challenges. For instance, while AI can enhance efficiency, it also raises questions about data privacy and the ethical implications of its use. As we embrace the benefits, we must also ensure that we are implementing strict protocols to protect sensitive information. This includes safeguarding personal data in healthcare and ensuring that educational AI tools respect student privacy.

In summary, the potential benefits of AI are vast and varied. From improving healthcare outcomes and personalizing education to revolutionizing transportation systems, the advantages are undeniable. Yet, as we journey into this new frontier, it's crucial to strike a balance between innovation and ethical responsibility. Only then can we truly harness the power of AI for the greater good.

AI in Healthcare

Artificial Intelligence is not just a buzzword in the tech world; it’s a transformative force in the field of healthcare. Imagine a world where doctors have supercharged assistants that can analyze mountains of data in seconds, helping them make better decisions for their patients. That’s precisely what AI brings to the table! With its ability to process vast amounts of information quickly and accurately, AI can lead to improved diagnostics, personalized treatment plans, and more efficient patient care.

One of the most significant benefits of AI in healthcare is its role in diagnostics. For instance, AI algorithms can analyze medical images, such as X-rays or MRIs, with a level of precision that often surpasses human capabilities. This capability not only speeds up the diagnostic process but also reduces the chances of human error. For example, a recent study showed that AI systems could identify certain types of cancers earlier than traditional methods, potentially saving lives and reducing treatment costs.

Furthermore, AI can help create personalized treatment plans by analyzing a patient’s genetic information, lifestyle choices, and medical history. This means treatments can be tailored specifically to the individual, rather than adopting a one-size-fits-all approach. Imagine having a treatment plan that considers your unique genetic makeup! This level of customization can lead to better outcomes and a higher quality of life for patients.

However, with great power comes great responsibility. As we integrate AI into healthcare, we must also address the pressing issue of data privacy. The sensitive nature of health information necessitates strict protocols to protect patient data from misuse or breaches. For instance, healthcare providers must ensure that AI systems comply with laws like HIPAA in the United States, which mandates the protection of patient information. Without proper safeguards, the very technology designed to improve healthcare could inadvertently compromise patient privacy.

Moreover, the ethical implications of using AI in treatment decisions raise important questions. Who is accountable when an AI makes a mistake in diagnosis or treatment? Is it the healthcare provider, the software developer, or the institution? These questions highlight the need for clear guidelines and ethical oversight in the deployment of AI technologies in healthcare settings.

In summary, while AI holds immense potential to revolutionize healthcare by improving diagnostics and personalizing treatment, it also brings challenges that must be addressed. The balance between innovation and ethical responsibility is crucial in ensuring that AI serves the best interests of patients while safeguarding their rights and privacy.

- What are the main benefits of AI in healthcare?

AI improves diagnostics, personalizes treatment plans, and increases efficiency in patient care. - How does AI enhance diagnostic accuracy?

AI algorithms analyze medical images and data more quickly and accurately than human practitioners, reducing errors. - What are the privacy concerns associated with AI in healthcare?

AI systems handle sensitive patient data, necessitating strict protocols to protect this information from breaches. - Who is responsible if AI makes a mistake in treatment?

This raises ethical questions about accountability, which need to be addressed through clear guidelines.

Data Privacy Concerns

As we plunge deeper into the realm of artificial intelligence, one of the most pressing issues we face is data privacy. With AI systems increasingly handling sensitive information, the stakes have never been higher. Imagine a world where your medical history, financial records, and personal communications are all accessible to algorithms designed to learn and adapt. It's a double-edged sword; while AI can enhance our lives, it also raises significant concerns about how our data is collected, stored, and utilized.

When AI is integrated into healthcare, for instance, it relies heavily on vast amounts of data to function effectively. This data often includes personal identifiers that, if mishandled, could lead to severe privacy breaches. The question arises: how do we ensure that our private information remains protected in an era where data is the new gold? The answer lies in establishing robust protocols that govern data usage. These protocols should encompass:

- Consent: Patients must be informed about how their data will be used and must give explicit permission.

- Data Anonymization: Techniques to remove personally identifiable information from datasets should be standard practice.

- Access Control: Only authorized personnel should have access to sensitive data, minimizing the risk of unauthorized use.

Moreover, the ethical implications of AI in treatment decisions cannot be overlooked. If an AI system recommends a treatment based on data that includes biases or inaccuracies, it could lead to inappropriate care. This situation raises the question of accountability: who is responsible when an AI's recommendation results in harm? Is it the developers, the healthcare providers, or the AI itself? These dilemmas highlight the need for transparent algorithms and ethical oversight to ensure that AI operates within a framework that prioritizes patient safety and privacy.

As we navigate these challenges, it's crucial to foster a culture of accountability and transparency in AI development. By doing so, we can build trust with the public and pave the way for innovations that respect individual rights while maximizing the benefits AI has to offer. The conversation around data privacy is not just a technical issue; it’s a societal one that requires input from various stakeholders, including technologists, ethicists, and everyday citizens.

- What are the main data privacy concerns related to AI? The main concerns include unauthorized access to personal data, misuse of sensitive information, and lack of transparency in data handling practices.

- How can individuals protect their data in an AI-driven world? Individuals can protect their data by being cautious about sharing personal information, using privacy settings on platforms, and advocating for stronger data protection regulations.

- What role do regulations play in ensuring data privacy? Regulations are essential for establishing guidelines on data collection, usage, and protection, ensuring that companies adhere to ethical standards and prioritize consumer privacy.

Ethical AI in Treatment Decisions

When we think about artificial intelligence in healthcare, the conversation often steers towards the impressive capabilities it brings to diagnostics and treatment. However, we must also confront the ethical dilemmas that arise when AI systems are involved in making treatment decisions. Imagine a world where a machine, not a human, determines the best course of action for your health. Sounds futuristic, right? But this is the reality we are heading towards, and it raises some critical questions about accountability, bias, and even the potential for dehumanization in patient care.

One of the most pressing concerns is accountability. If an AI system makes a mistake—say, it recommends a treatment that leads to adverse effects—who is responsible? The healthcare provider who relied on the AI's recommendation? The developers of the AI? Or perhaps the institution that implemented the system? Establishing clear lines of accountability is essential, as it ensures that patients can seek recourse when things go wrong. This complexity is reminiscent of a tangled web; each thread represents a different stakeholder, and pulling on one can affect the entire structure.

Moreover, we must consider the issue of bias in AI algorithms. Algorithms are only as good as the data fed into them. If the data is skewed or unrepresentative, the AI may perpetuate existing inequalities in healthcare. For instance, if an AI system is trained primarily on data from one demographic group, it may not perform well for others, leading to subpar treatment recommendations. This phenomenon is not just a technical glitch; it has real-world implications for patient care, potentially exacerbating health disparities. To combat this, it is crucial to ensure that diverse and representative data sets are used in training AI systems.

Another significant concern is the risk of dehumanization. When AI systems take over critical decision-making processes, there is a danger that patients may feel like mere data points rather than human beings with unique needs and emotions. The relationship between a patient and a healthcare provider is built on trust and empathy, qualities that machines inherently lack. As we integrate AI into treatment decisions, we must strive to maintain the human touch in healthcare, ensuring that technology complements rather than replaces the essential qualities of care.

In summary, while AI holds tremendous potential to enhance treatment decisions, we must tread carefully. The ethical implications are profound and multifaceted. We need to ask ourselves: How do we balance the benefits of AI with the necessity of ethical considerations? The answer lies in fostering a collaborative approach that includes healthcare professionals, ethicists, and technologists working together to create guidelines that prioritize patient welfare and uphold ethical standards. Only then can we harness the power of AI while safeguarding the dignity and rights of every patient.

- What are the main ethical concerns regarding AI in healthcare?

The primary concerns include accountability, bias in algorithms, and the potential for dehumanization in patient care. - How can we ensure AI systems are fair and unbiased?

By using diverse and representative data sets for training AI systems and implementing rigorous oversight during development. - What role do healthcare providers play in AI decision-making?

Healthcare providers need to work collaboratively with AI systems, ensuring they maintain a human touch in patient care while leveraging AI's capabilities.

AI in Education

Imagine a classroom where every student learns at their own pace, where teachers have the tools to understand each child's unique needs, and where education is tailored to foster individual strengths. This is not just a dream; it's becoming a reality with the integration of artificial intelligence (AI) in education. AI technologies are transforming traditional learning environments into dynamic spaces that can adapt to the diverse requirements of students.

One of the most exciting aspects of AI in education is its ability to provide personalized learning experiences. With AI algorithms analyzing data from student interactions, educational platforms can create customized learning paths. This means that students who struggle with certain concepts can receive additional resources and support, while those who excel can be challenged with more advanced material. It's like having a personal tutor available 24/7, guiding each student through their educational journey.

Furthermore, AI can assist educators by offering valuable insights into student performance. By analyzing data trends, teachers can identify which areas students are excelling in and which ones may require more attention. This data-driven approach allows for timely interventions, ensuring that no student falls through the cracks. Imagine a teacher being able to pinpoint exactly when a student is struggling with a topic and intervening before it becomes a larger issue.

However, while the benefits of AI in education are substantial, we must also consider the ethical implications of its use. The reliance on algorithms for personalized learning raises questions about data privacy and security. Schools must implement strict protocols to protect sensitive student information from potential breaches. Additionally, there is a risk of over-reliance on technology, which could lead to a decrease in human interaction and the development of social skills among students.

To address these concerns, it is essential for educational institutions to establish a framework that prioritizes ethical AI usage. This includes ensuring that AI systems are transparent and that students and parents are informed about how their data is being used. By fostering an environment of trust and accountability, we can harness the power of AI to enhance education without compromising the values that are fundamental to teaching and learning.

In conclusion, the integration of AI in education holds tremendous potential to revolutionize the way we teach and learn. By providing personalized experiences and valuable insights, AI can empower both students and educators. However, it is crucial to navigate the associated ethical challenges carefully. As we embrace this technological advancement, let’s ensure that we do so in a way that prioritizes the well-being and development of our students.

- How does AI personalize learning? AI analyzes student data to create tailored learning experiences, adapting content to meet individual needs.

- What are the privacy concerns related to AI in education? The use of AI requires strict data protection measures to safeguard sensitive student information from breaches.

- Can AI replace teachers? While AI can assist in providing personalized learning, it cannot replace the essential human interaction and mentorship that teachers provide.

- How can schools ensure ethical AI use? Schools should establish clear guidelines for data use, transparency, and accountability in AI applications.

Risks Associated with AI

While the allure of artificial intelligence is undeniable, we cannot ignore the shadows it casts. The rapid advancement of AI technologies brings with it a host of **significant risks** that can affect individuals and society at large. One of the most pressing concerns is **job displacement**. As machines become more capable of performing tasks traditionally done by humans, many workers find themselves facing the threat of unemployment. Just imagine waking up one day to find that your job has been automated—it's a reality that many industries are grappling with, from manufacturing to service sectors.

Another critical risk is **algorithmic bias**. AI systems are only as good as the data they are trained on. If that data is flawed or unrepresentative, the algorithms can perpetuate and even amplify existing biases. For instance, facial recognition technologies have been shown to perform poorly on individuals from diverse racial backgrounds. This not only raises ethical concerns but can also lead to **unfair treatment** of individuals based on race, gender, or socioeconomic status. The implications are profound, as biased algorithms can influence hiring practices, law enforcement, and even loan approvals.

Furthermore, the potential for **misuse of AI** in surveillance and warfare is alarming. In an age where privacy is already a significant concern, the deployment of AI technologies in surveillance can lead to a society where individuals are constantly monitored. This could result in a chilling effect on free expression and dissent. Similarly, the use of AI in military applications raises ethical questions about accountability and the potential for autonomous weapons systems to make life-and-death decisions without human intervention.

To illustrate these risks more clearly, consider the following table that summarizes the primary risks associated with AI:

| Risk | Description |

|---|---|

| Job Displacement | Automation of jobs leading to unemployment and economic instability for workers. |

| Algorithmic Bias | Unfair treatment of individuals due to flawed data sets, leading to discriminatory outcomes. |

| Surveillance | Increased monitoring of individuals, threatening privacy and freedom of expression. |

| Autonomous Weapons | Ethical concerns surrounding AI in military applications and decision-making processes. |

As we navigate the complexities of AI, it is crucial to have open discussions about these risks. We must ask ourselves: how do we balance the benefits of AI with the potential dangers? What safeguards can we put in place to ensure that technology serves humanity rather than undermines it? By engaging in these conversations, we can work towards a future where AI is developed responsibly and ethically, minimizing risks while maximizing rewards.

Q: What is algorithmic bias?

A: Algorithmic bias occurs when an AI system produces results that are systematically prejudiced due to flawed data or assumptions made during the algorithm's development.

Q: How can AI lead to job displacement?

A: AI can automate tasks that were previously performed by humans, which can result in job losses in certain sectors, particularly in roles that involve repetitive or predictable tasks.

Q: What are the ethical concerns surrounding AI in warfare?

A: The use of AI in military applications raises questions about accountability, the potential for autonomous weapons to make life-or-death decisions, and the moral implications of removing human judgment from such critical processes.

Algorithmic Bias

Algorithmic bias is a critical issue that has gained significant attention in recent years, especially as artificial intelligence (AI) systems become more integrated into our daily lives. At its core, algorithmic bias refers to the systematic and unfair discrimination that can occur when AI systems make decisions based on flawed or biased data. Imagine a world where a computer program decides who gets a job, who receives a loan, or even who gets healthcare based on historical data that may reflect societal prejudices. This scenario is not just a dystopian fantasy; it is a reality that we must confront as we increasingly rely on AI.

One of the main reasons algorithmic bias exists is due to the data used to train AI models. If the data reflects existing inequalities—whether based on race, gender, or socioeconomic status—the AI will likely perpetuate these biases. For example, if a hiring algorithm is trained on historical hiring data that disproportionately favors one demographic, it may continue to favor that demographic in future hiring decisions, further entrenching systemic inequalities. This can lead to a cycle of discrimination that is difficult to break.

To visualize the impact of algorithmic bias, consider the following table that highlights different sectors where bias can manifest and its potential consequences:

| Sector | Potential Bias | Consequences |

|---|---|---|

| Hiring | Gender and racial bias in candidate selection | Reinforcement of workforce homogeneity |

| Healthcare | Disparities in treatment recommendations | Worsening health outcomes for marginalized groups |

| Law Enforcement | Discriminatory profiling based on historical crime data | Increased surveillance and criminalization of specific communities |

To combat algorithmic bias, it is essential to adopt a multi-faceted approach. This includes:

- Diverse Data Sets: Ensuring that the data used to train AI systems is representative of the entire population can help mitigate bias.

- Transparency: Developers should be transparent about how algorithms are created and the data they use, allowing for scrutiny and accountability.

- Regular Audits: Conducting regular audits of AI systems can help identify and address biases that may arise over time.

Ultimately, addressing algorithmic bias is not just about improving AI technology; it’s about safeguarding our values as a society. As we navigate the complexities of AI, we must prioritize fairness and equity to ensure that technology serves everyone, not just a select few. The stakes are high, and we cannot afford to ignore the implications of biased algorithms on our lives and communities.

- What is algorithmic bias? Algorithmic bias refers to the unfair discrimination that occurs when AI systems make decisions based on flawed or biased data.

- How does algorithmic bias occur? It often arises from training data that reflects existing societal inequalities, leading AI to replicate these biases in its decision-making processes.

- What are the consequences of algorithmic bias? Consequences can include unfair hiring practices, disparities in healthcare, and discriminatory law enforcement practices, among others.

- How can we mitigate algorithmic bias? Mitigation strategies include using diverse data sets, ensuring transparency in AI development, and conducting regular audits of AI systems.

Job Displacement

The rise of artificial intelligence (AI) is a double-edged sword, offering remarkable advancements while simultaneously posing serious challenges, particularly in the realm of employment. As AI technologies become more sophisticated, the risk of job displacement grows, leaving many workers feeling anxious about their future. Imagine a world where machines can perform tasks more efficiently than humans—this is not just a sci-fi fantasy; it’s becoming our reality. The automation of jobs across various sectors means that certain roles may become obsolete, leading to significant upheaval in the job market.

Consider the manufacturing industry, where robots have already taken over repetitive tasks on assembly lines. This shift has resulted in increased productivity and reduced costs for companies, but it has also led to a decline in traditional manufacturing jobs. According to a recent report, it is estimated that up to 20 million manufacturing jobs worldwide could be displaced by 2030 due to advancements in AI and robotics. This statistic is alarming, but it’s only one part of the story.

Job displacement isn't limited to blue-collar jobs. White-collar professions, such as accounting, legal services, and even some aspects of healthcare, are also at risk. For instance, AI algorithms can analyze vast amounts of data, making decisions faster and often more accurately than humans. This leads to concerns about the future of jobs that rely heavily on data analysis and decision-making. The World Economic Forum predicts that while 85 million jobs may be displaced, 97 million new roles could emerge, highlighting the need for workforce retraining and adaptability.

To navigate this shifting landscape, it’s crucial for workers to be proactive. Here are some strategies to consider:

- Upskilling: Learning new skills that are in demand can help workers stay relevant. Online courses and workshops can provide opportunities to learn about AI, data analysis, and other emerging fields.

- Career Transitioning: Exploring careers that are less likely to be automated can provide a safety net. Fields such as healthcare, education, and creative industries often require a human touch that machines cannot replicate.

- Networking: Building connections within industries can open doors to new opportunities and provide insights into job trends.

It’s worth noting that while job displacement is a significant concern, it also presents an opportunity for society to rethink the nature of work. As we embrace AI, the focus could shift from merely performing tasks to enhancing creativity, critical thinking, and emotional intelligence—skills that machines struggle to replicate. This evolution may lead to more fulfilling and meaningful work for individuals.

In conclusion, while AI holds the potential for job displacement, it also offers a chance for innovation and growth. By embracing change and investing in personal development, workers can not only survive but thrive in this new era. The key is to stay informed and adaptable, ensuring that the workforce evolves alongside technological advancements.

- What is job displacement?

Job displacement occurs when workers lose their jobs due to changes in technology, market conditions, or other factors, often leading to a need for retraining or transitioning to new roles.

- Which jobs are most at risk of being displaced by AI?

Jobs that involve repetitive tasks, data analysis, and routine decision-making are most at risk. Manufacturing, accounting, and certain healthcare roles may be significantly affected.

- What can workers do to prepare for potential job displacement?

Workers can upskill, explore new career paths, and network within their industries to stay informed about job trends and opportunities.

Regulatory Frameworks for AI

As artificial intelligence (AI) continues to evolve and integrate into various aspects of our daily lives, the importance of establishing robust regulatory frameworks cannot be overstated. These frameworks are essential for ensuring that AI technologies are developed and deployed in a manner that prioritizes ethical considerations, public safety, and individual rights. Without such regulations, we risk allowing unchecked AI growth, which could lead to harmful consequences for society.

One of the primary goals of regulatory frameworks is to strike a balance between fostering innovation and protecting the public. This means creating policies that encourage technological advancement while simultaneously addressing potential risks associated with AI. For instance, regulations can help mitigate issues like algorithmic bias, privacy violations, and the ethical implications of autonomous decision-making systems. By establishing clear guidelines, stakeholders can work together to ensure that AI serves the greater good.

In recent years, various countries and organizations have begun to recognize the need for comprehensive AI regulations. Some have initiated discussions on global standards that would facilitate international cooperation. This is crucial because AI technologies often transcend borders, making it imperative for nations to align their regulatory approaches. Such collaboration can help ensure that AI development adheres to fundamental human rights and ethical principles, regardless of where the technology is created or implemented.

Moreover, many nations are taking proactive steps to implement national regulations tailored to their specific contexts. These regulations often focus on key areas such as:

- Accountability: Who is responsible when an AI system causes harm or makes a mistake?

- Transparency: How can we ensure that AI systems are understandable and their decisions can be explained?

- Data Protection: What measures are in place to safeguard citizens’ personal information?

Countries like the United States, the European Union, and China are leading the charge in developing their own AI regulatory frameworks. For example, the EU has proposed the Artificial Intelligence Act, which aims to categorize AI applications based on their risk levels and impose stricter regulations on high-risk applications. This proactive approach aims to create a safer environment for AI deployment while fostering public trust in these technologies.

In addition to national regulations, it is essential to involve various stakeholders in the regulatory process, including tech companies, ethicists, and civil society. This collaborative approach ensures that a wide range of perspectives is considered, ultimately leading to more comprehensive and effective regulations. By engaging in open dialogue, we can address concerns about AI's impact on society and develop solutions that benefit everyone.

In conclusion, as AI technologies continue to advance at a rapid pace, establishing regulatory frameworks is not just a necessity; it is a responsibility. By prioritizing ethical considerations and public safety, we can harness the power of AI while minimizing its risks. The future of AI is bright, but it requires careful navigation through the complexities of ethics and regulation.

- What are regulatory frameworks for AI?

Regulatory frameworks for AI are sets of guidelines and rules designed to govern the development, deployment, and use of artificial intelligence technologies, ensuring ethical practices and public safety. - Why are regulatory frameworks important?

They are important because they help mitigate risks associated with AI, such as bias, privacy violations, and accountability issues, while fostering innovation and public trust. - How do global standards for AI work?

Global standards for AI aim to create uniform guidelines that can be adopted by different countries, promoting international cooperation and aligning AI technologies with human rights and ethical principles.

Global Standards

As we navigate the complex landscape of artificial intelligence, the establishment of becomes increasingly vital. These standards serve as a framework to ensure that AI technologies are developed and implemented in a way that respects human rights and ethical principles. Imagine a world where AI operates like a well-tuned orchestra, with every instrument playing in harmony, rather than a chaotic cacophony. This analogy highlights the importance of having a coordinated approach to AI ethics across various countries and cultures.

Global standards can facilitate international cooperation and provide a common language for stakeholders involved in AI development. Such standards can encompass a variety of aspects, including:

- Transparency: Ensuring that AI systems operate in a way that is understandable and accessible to users.

- Accountability: Establishing clear lines of responsibility for AI-driven decisions and actions.

- Fairness: Promoting the use of unbiased algorithms to prevent discrimination based on race, gender, or socioeconomic status.

- Privacy: Protecting personal data and ensuring that individuals have control over their information.

By aligning on these principles, countries can work together to mitigate the risks associated with AI while maximizing its benefits. To illustrate the potential impact of global standards, consider the following table that outlines key areas where such standards could be applied:

| Area | Potential Impact | Examples of Standards |

|---|---|---|

| Healthcare | Improved patient outcomes and data security | Data encryption protocols, patient consent guidelines |

| Education | Enhanced learning experiences and equitable access | Personalization standards, accessibility guidelines |

| Employment | Fair labor practices and job security | Retraining programs, anti-discrimination policies |

However, the journey toward establishing these global standards is fraught with challenges. Different countries have varying cultural norms, legal frameworks, and economic priorities, which can complicate the process. It's essential for policymakers, industry leaders, and ethicists to engage in ongoing dialogue to create standards that are not only comprehensive but also flexible enough to adapt to emerging technologies.

In conclusion, the creation of global standards for AI ethics is not just a lofty ideal; it is a necessary step toward a future where AI can coexist with humanity in a responsible and ethical manner. By fostering international collaboration and commitment to these standards, we can ensure that AI serves as a tool for good, enhancing our lives while safeguarding our rights.

- What are global standards in AI? Global standards in AI refer to a set of guidelines and principles that ensure the ethical development and deployment of artificial intelligence technologies across different countries.

- Why are global standards important? They are crucial for promoting transparency, accountability, fairness, and privacy in AI applications, helping to mitigate risks and enhance the benefits of AI.

- Who develops these global standards? Global standards are typically developed through collaboration among governments, industry leaders, and ethical organizations, often involving public consultations and expert input.

National Regulations

As the world grapples with the rapid advancement of artificial intelligence, are becoming increasingly crucial. Countries are recognizing the need to establish frameworks that govern the use of AI technologies, ensuring they align with ethical standards and protect citizens' rights. These regulations aim to create a balance between fostering innovation and safeguarding public interests, which is no small feat in our fast-paced technological landscape.

One of the primary focuses of national regulations is accountability. Governments are working to ensure that AI developers and companies are held responsible for the outcomes of their technologies. This includes establishing clear guidelines on liability in cases where AI systems cause harm or make biased decisions. It's essential that those who create and deploy AI systems are aware of the implications their technologies might have on society.

Another critical aspect of national regulations is transparency. AI systems often operate as black boxes, making it difficult for users to understand how decisions are made. Regulations are being designed to mandate transparency in AI algorithms, requiring companies to disclose how their systems function and the data they utilize. This transparency is vital for building trust with the public, as individuals need to feel confident that AI technologies are used ethically and responsibly.

Moreover, citizen protection is a significant concern. With the rise of AI in various sectors, there is a growing need to ensure that individuals' rights are not infringed upon. This includes protecting personal data from misuse and ensuring that AI does not perpetuate discrimination or inequality. National regulations are beginning to address these issues by implementing strict data protection laws and guidelines for ethical AI usage.

To illustrate the landscape of national regulations, consider the following table that highlights some countries' approaches to AI governance:

| Country | Regulation Focus | Key Features |

|---|---|---|

| United States | Accountability & Innovation | Emphasis on innovation with voluntary guidelines for AI ethics. |

| European Union | Data Protection & Transparency | Strict regulations on data privacy and mandatory transparency in AI algorithms. |

| China | Surveillance & Control | Focus on using AI for state surveillance and social control mechanisms. |

| Canada | Human Rights & Ethics | Development of ethical guidelines to protect citizens' rights in AI applications. |

As we can see, the approaches to AI regulation vary significantly from one country to another. Some nations prioritize innovation and economic growth, while others focus on protecting individual rights and ensuring ethical use of technology. This disparity highlights the need for international cooperation and dialogue to develop a cohesive framework that addresses the global implications of AI.

In conclusion, national regulations are a vital step toward ensuring that AI technologies are developed and implemented responsibly. As these regulations evolve, they must adapt to the changing landscape of technology while prioritizing the well-being of society. The challenge lies in finding the right balance between fostering innovation and protecting individual rights, but with concerted efforts from governments, developers, and the public, it is a challenge that can be met.

- What are the main goals of national AI regulations? The primary goals include ensuring accountability, transparency, and protecting citizens' rights.

- How do different countries approach AI regulation? Countries vary in their focus, with some prioritizing innovation and others emphasizing ethical considerations and human rights.

- Why is transparency important in AI? Transparency builds trust with the public and allows users to understand how AI systems make decisions.

Frequently Asked Questions

- What are the main ethical concerns surrounding AI?

The primary ethical concerns include issues of data privacy, algorithmic bias, and the potential for job displacement. As AI systems make decisions that can affect people's lives, ensuring fairness and accountability is crucial.

- How can AI benefit the healthcare sector?

AI can revolutionize healthcare by providing improved diagnostics, creating personalized treatment plans, and enhancing the overall efficiency of patient care. This leads to better health outcomes and a more streamlined healthcare system.

- What is algorithmic bias and why is it a problem?

Algorithmic bias occurs when AI systems produce unfair outcomes based on race, gender, or socioeconomic status. This can lead to discrimination and perpetuate existing inequalities, highlighting the need for diverse data sets and ethical oversight.

- How does AI impact job displacement?

The automation of tasks through AI can lead to job displacement, raising concerns about unemployment and economic stability. It's essential to discuss strategies for workforce retraining and adaptation to new job markets.

- What regulations are being put in place for AI?

Countries are beginning to implement national regulations focused on accountability, transparency, and protecting citizens' rights. Additionally, there is a push for global standards to ensure ethical AI development across borders.

- Can AI be trusted with sensitive data?

Trusting AI with sensitive data hinges on strict data privacy protocols and robust security measures. It's vital to ensure that AI systems are designed to protect personal information from misuse or breaches.

- What role does AI play in education?

AI enhances education by offering personalized learning experiences, allowing students to learn at their own pace. It also provides educators with valuable insights into student performance, helping tailor instruction to individual needs.