The Confluence of AI & Ethics

In today's fast-paced digital world, the intersection of artificial intelligence (AI) and ethics has become a hot topic, sparking conversations that are both necessary and urgent. As AI technologies rapidly evolve, they bring with them a host of ethical dilemmas that society must confront. Imagine a world where machines not only assist us but also make decisions that could impact our lives in profound ways. With such power comes a great responsibility, and it's crucial to examine the moral frameworks that should guide the development and deployment of these technologies.

AI is not just about algorithms and data; it’s about the implications of those algorithms on human lives. The decisions made by AI systems can affect everything from hiring practices to law enforcement, healthcare, and beyond. As we embrace the potential of AI to transform industries and enhance our daily lives, we must also grapple with the ethical considerations that accompany these advancements. What happens when an AI system makes a mistake? Who is to blame when bias creeps into an algorithm, leading to unfair treatment of individuals? These questions are not merely academic; they touch on the very fabric of our society.

As we delve deeper into this confluence of AI and ethics, we must recognize that ethical AI is not just a technical challenge but a societal one. It requires collaboration among technologists, ethicists, policymakers, and the public. The development of AI technologies should be accompanied by rigorous ethical standards that ensure fairness, accountability, and transparency. This is not just about preventing harm; it’s about actively promoting good and ensuring that the benefits of AI are distributed equitably across all segments of society.

Furthermore, as we stand on the brink of an AI-driven future, we must remain vigilant. The rapid pace of innovation can often outstrip our ability to regulate and understand its implications. Thus, ongoing dialogue and engagement with diverse stakeholders are essential to navigate this complex landscape. The future of AI ethics is not predetermined; it is shaped by our collective choices and actions today.

- What is AI ethics? AI ethics refers to the moral principles guiding the development and use of artificial intelligence, ensuring that these technologies are used responsibly and equitably.

- Why is bias in AI a concern? Bias in AI can lead to unfair outcomes, perpetuating discrimination and inequality, which is why addressing it is crucial for creating equitable systems.

- How can we ensure accountability in AI? Establishing clear legal frameworks and corporate responsibility is essential for ensuring that AI systems are held accountable for their actions.

- What role does privacy play in AI ethics? Privacy is a significant concern as AI technologies often involve surveillance and data collection, making robust privacy protections essential.

Understanding AI Ethics

AI ethics refers to the set of moral principles and guidelines that govern the development and application of artificial intelligence technologies. As these technologies become increasingly integrated into our daily lives, understanding the ethical implications is essential. Just like the compass that guides a ship through stormy seas, AI ethics helps navigate the murky waters of innovation and responsibility. The rapid evolution of AI brings forth questions that challenge our traditional notions of morality and accountability. What happens when machines make decisions that impact human lives? Who is responsible when an algorithm fails?

At its core, AI ethics seeks to ensure that artificial intelligence serves humanity positively, promoting fairness, transparency, and accountability. The foundational concepts of AI ethics revolve around several key principles:

- Fairness: Ensuring that AI systems do not perpetuate existing biases or create new forms of discrimination.

- Transparency: Making AI processes understandable and accessible to users, allowing them to see how decisions are made.

- Accountability: Establishing clear lines of responsibility for the outcomes produced by AI systems.

- Privacy: Protecting individuals' personal data and ensuring that AI does not infringe on their rights.

The importance of these principles cannot be overstated. They serve as the bedrock of responsible AI development, guiding engineers, policymakers, and stakeholders in creating systems that align with societal values. As we stand on the brink of an AI-driven future, the need for ethical considerations becomes more pressing. Imagine a world where AI systems make decisions about healthcare, criminal justice, or employment without oversight. The potential for harm is significant, and thus, ethical frameworks must be established and adhered to.

Moreover, as AI technologies continue to evolve, the conversation around ethics must also adapt. New challenges will emerge, requiring continuous dialogue and engagement among technologists, ethicists, and the public. This is not just a technical issue; it's a societal one. Engaging diverse voices in discussions about AI ethics ensures that the resulting technologies reflect the values and needs of all members of society. In essence, AI ethics is not just about preventing harm; it's about fostering trust and ensuring that AI serves as a tool for good.

Key Ethical Concerns

As we dive deeper into the world of artificial intelligence, it's crucial to spotlight the that accompany this rapidly evolving technology. While AI has the potential to revolutionize industries and improve our daily lives, it also presents a myriad of challenges that we must confront head-on. These concerns primarily revolve around bias, privacy, accountability, and transparency. Understanding these issues is not just important; it's essential for ensuring that AI is developed and deployed responsibly.

First and foremost, let's talk about bias. Bias in AI systems can lead to unfair outcomes that disproportionately affect certain groups, perpetuating existing inequalities. Imagine a hiring algorithm that favors candidates from a specific demographic simply because it was trained on biased data. This not only undermines the principles of fairness but also raises serious ethical questions about who gets access to opportunities. Bias can manifest in various forms, from racial and gender biases to socioeconomic disparities. Addressing these biases is paramount, as failing to do so can result in AI technologies that reinforce discrimination rather than eliminate it.

Next up is the issue of privacy. With AI's increasing capabilities in data collection and analysis, the potential for invasive surveillance is a growing concern. Think about how your smartphone tracks your movements or how social media algorithms analyze your behavior. This raises significant ethical questions: How much of our personal information are we willing to sacrifice for convenience? Are we aware of who is watching us and how our data is being used? The implications of AI-driven surveillance can be profound, necessitating robust privacy protections to safeguard individual rights.

Then we have accountability. When AI systems make decisions that lead to harm, who is held responsible? Is it the developers, the companies, or the AI itself? Establishing clear lines of accountability is vital for ethical governance. Without it, we risk creating a legal and moral vacuum where no one feels responsible for the consequences of AI actions. This is where legal frameworks come into play, as they can help define responsibility and ensure that victims have avenues for redress.

Lastly, we must consider the importance of transparency. For AI to be trusted, its workings must be transparent. This means that stakeholders should understand how AI systems make decisions and what data they rely on. The lack of transparency can lead to mistrust and skepticism, which can hinder the adoption of beneficial AI technologies. Imagine trying to navigate a maze without knowing the layout; it would be frustrating and disorienting, right? That's how many people feel about opaque AI systems. Therefore, fostering transparency is essential for building trust among users and stakeholders alike.

In summary, the key ethical concerns surrounding AI—bias, privacy, accountability, and transparency—are interwoven and must be addressed collectively. As we continue to integrate AI into our lives, it's our responsibility to ensure that these ethical considerations are at the forefront of development and deployment strategies. After all, the goal should not only be to advance technology but to do so in a way that respects human dignity and promotes social good.

- What is AI ethics? AI ethics refers to the moral principles that guide the development and use of artificial intelligence, ensuring that technologies are created and utilized in a way that is fair, transparent, and accountable.

- Why is bias in AI a concern? Bias in AI can lead to unfair treatment of individuals or groups, perpetuating discrimination and inequality, which is why it's essential to identify and mitigate these biases in AI systems.

- How can we ensure accountability in AI? Establishing clear legal frameworks and corporate responsibility can help define accountability in AI systems, ensuring that those affected by AI decisions have avenues for recourse.

- What role does transparency play in AI? Transparency is crucial for building trust in AI systems, as it allows users to understand how decisions are made and what data is used, ultimately fostering a more ethical AI landscape.

Bias in AI Systems

Bias in artificial intelligence (AI) systems is a pressing issue that can lead to unfair outcomes and discrimination. Imagine a world where decisions that significantly impact our lives—like hiring, lending, and law enforcement—are made by algorithms that harbor biases. It’s a bit like letting a biased referee officiate a game; the fairness of the outcome is compromised. AI systems learn from data, and if that data reflects societal biases, the AI will inevitably replicate those biases in its decision-making processes. This raises a crucial question: how can we ensure that AI serves as a tool for equality rather than a perpetuator of existing inequalities?

One of the most alarming aspects of bias in AI is its ability to affect marginalized communities disproportionately. For instance, facial recognition technologies have been shown to misidentify individuals from certain racial backgrounds at a higher rate than others. This not only leads to wrongful accusations but also fosters a sense of mistrust in technology, which should ideally enhance our lives. Addressing bias in AI systems is not just a technical challenge; it’s a moral imperative. We must ask ourselves: what kind of future do we want to build with AI?

To tackle bias, we first need to understand its sources. Bias can creep into AI systems through various avenues:

- Data Selection: If the data used to train AI models is unrepresentative or flawed, the AI will learn and propagate those biases.

- Algorithm Design: The way algorithms are structured can inadvertently favor certain groups over others.

- Human Influence: Human biases can seep into AI systems through subjective decisions made during the development process.

Recognizing these sources is the first step towards mitigation. But how do we actually address these biases? There are several effective strategies that can help:

- Diverse Data Sets: Incorporating a wide range of data that reflects different demographics can help create a more balanced AI.

- Inclusive Design Practices: Engaging with diverse teams during the design and testing phases can lead to more equitable outcomes.

- Regular Audits: Conducting periodic assessments of AI systems can help identify and rectify biases that may have developed over time.

In conclusion, addressing bias in AI systems is not just about improving technology; it's about ensuring that technology serves humanity in a fair and just manner. As we continue to integrate AI into various aspects of our lives, we must remain vigilant and proactive in addressing these ethical challenges. The future of AI should be one where fairness and equality are at the forefront, allowing us to harness the full potential of artificial intelligence without compromising our moral values.

Sources of Bias

Understanding the in artificial intelligence is crucial for developing fair and equitable systems. Bias can creep into AI in various ways, often originating from the data used to train these systems. Imagine trying to bake a cake with stale ingredients; the end product will inevitably suffer. Similarly, if the data fed into AI algorithms is flawed or unrepresentative, the outcomes can be skewed. Here are some of the primary sources of bias:

- Data Selection: The choice of data used to train AI models is one of the most significant sources of bias. If the data is not diverse or inclusive, the AI will learn from a narrow perspective, which can lead to unfair outcomes. For example, facial recognition systems trained predominantly on images of lighter-skinned individuals may struggle to accurately identify individuals with darker skin tones.

- Algorithm Design: The algorithms themselves can introduce bias. If the mathematical models used to process data are flawed or biased in their assumptions, the AI's decisions will reflect those biases. This is akin to having a biased referee in a sports game; the outcome will unfairly favor one team over another.

- Human Influence: Humans play a critical role in AI development, from curating training data to designing algorithms. If the developers hold biases—conscious or unconscious—these can be inadvertently coded into the AI systems. This human element is often overlooked but is vital in understanding how bias manifests in AI.

To illustrate, consider a recent study that examined hiring algorithms. It found that if the training data consisted mostly of resumes from male candidates, the AI would likely favor male candidates in its recommendations. This not only perpetuates existing inequalities but also stifles diversity in workplaces. Thus, identifying these sources of bias is the first step toward mitigation.

Addressing bias requires a multifaceted approach. By recognizing where bias originates, developers can implement strategies to counteract these influences. For instance, employing a diverse team to create AI systems can help in recognizing and correcting inherent biases. Furthermore, utilizing robust data collection methods that ensure representation from various demographics can significantly improve the fairness of AI outcomes.

In conclusion, the sources of bias in AI are deeply rooted in data selection, algorithm design, and human influence. By understanding these sources, we can take meaningful steps towards creating more equitable AI technologies. Remember, just like in any recipe, the quality of the ingredients will ultimately determine the success of the final dish.

- What is AI bias? AI bias refers to the systematic and unfair discrimination that can occur when AI algorithms produce results that are prejudiced due to flawed data or design.

- How can we mitigate AI bias? Mitigation strategies include using diverse and representative data sets, engaging diverse teams in AI development, and continuously testing and auditing AI systems for bias.

- Why is understanding sources of bias important? Understanding the sources of bias helps developers create fairer AI systems and ensures that the technology benefits all users, reducing the risk of discrimination.

Mitigation Strategies

When it comes to addressing bias in AI systems, the journey towards equity and fairness is not just a destination—it's a continuous process that involves multiple strategies and proactive measures. To effectively mitigate bias, developers and organizations must adopt a multifaceted approach that encompasses diverse data sets, inclusive design practices, and ongoing evaluation. One of the most impactful strategies is the use of diverse data sets. By ensuring that the data used to train AI models represents a wide range of demographics, experiences, and perspectives, we can significantly reduce the risk of biased outcomes. Imagine trying to bake a cake without all the necessary ingredients; similarly, an AI model trained on limited data will produce skewed results, much like a cake that lacks flavor.

In addition to diverse data, inclusive design practices are crucial. This means involving a variety of stakeholders in the design process, including those from underrepresented communities. By incorporating their insights and feedback, developers can create AI systems that are more attuned to the needs and concerns of all users. Think of it like assembling a puzzle; each piece represents a unique viewpoint, and only when they are all included can the full picture emerge.

Another effective strategy is the implementation of ongoing evaluation and monitoring of AI systems post-deployment. This involves regularly auditing algorithms to identify and address any emerging biases. Organizations should establish a system of checks and balances, much like a safety net, to catch potential issues before they escalate. By employing techniques such as algorithmic auditing, organizations can critically assess how their AI systems are performing and whether they are inadvertently perpetuating bias.

Furthermore, fostering a culture of transparency within organizations is essential. When developers openly share their methodologies, data sources, and decision-making processes, it encourages accountability and trust. This transparency can be enhanced through the use of detailed documentation and public reporting, which not only informs stakeholders but also invites constructive feedback from the community.

Lastly, education and training play a pivotal role in bias mitigation strategies. By equipping AI practitioners with knowledge about the ethical implications of their work and the potential for bias, organizations can cultivate a workforce that is more conscientious about the impact of their decisions. Workshops, seminars, and ongoing professional development can serve as valuable tools in this educational endeavor.

In summary, addressing bias in AI requires a comprehensive approach that includes diverse data sets, inclusive design practices, ongoing evaluation, transparency, and education. By implementing these strategies, we can move towards a future where AI technologies serve all members of society equitably, ensuring that they enhance rather than hinder our collective progress.

- What is AI bias?

AI bias refers to systematic and unfair discrimination in AI systems, often resulting from biased data or flawed algorithms.

- How can bias in AI be identified?

Bias can be identified through audits, testing, and monitoring the outcomes of AI systems against diverse benchmarks.

- Why is diversity in data important?

Diverse data helps ensure that AI models perform fairly across different demographics and do not reinforce existing inequalities.

- What role does transparency play in AI ethics?

Transparency fosters trust and accountability, allowing stakeholders to understand how AI systems operate and make decisions.

Privacy and Surveillance

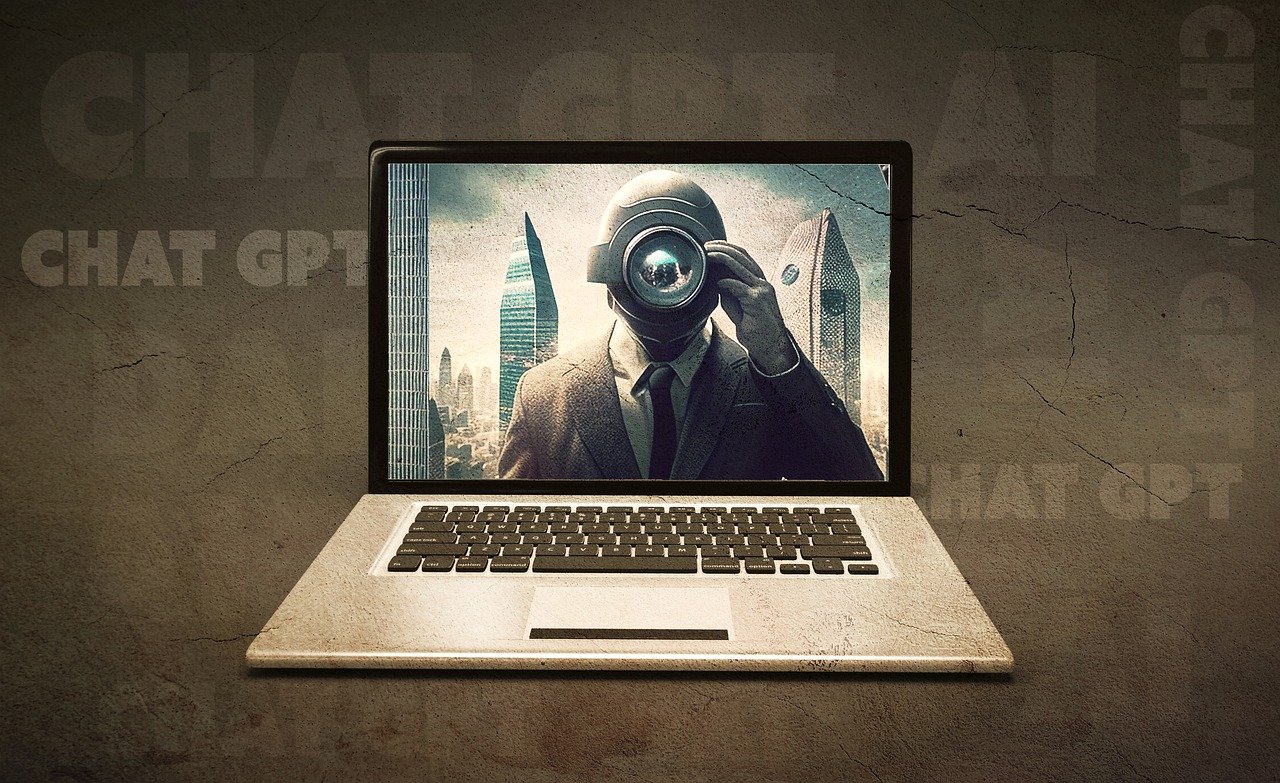

The rapid advancement of artificial intelligence has led to an increased capability for surveillance, raising significant ethical questions about privacy. In a world where AI can analyze vast amounts of data in real-time, the line between security and personal privacy becomes increasingly blurred. It’s like living in a glass house; while you may enjoy the transparency, you also expose every aspect of your life to the outside world. This reality prompts us to ask: at what cost do we prioritize safety over privacy?

AI-driven surveillance technologies, such as facial recognition and predictive policing, have the potential to enhance public safety but also pose serious risks to individual freedoms. For instance, the use of facial recognition software by law enforcement agencies can lead to wrongful arrests and a disproportionate impact on marginalized communities. Imagine being wrongly identified as a suspect simply because the algorithm misinterpreted your features. This scenario underscores the urgent need for robust privacy protections.

Moreover, the collection of personal data by AI systems often occurs without explicit consent. Many users unknowingly consent to data collection through vague privacy agreements, which can be as confusing as trying to read a legal document written in ancient Greek. This lack of transparency not only violates individual privacy rights but also erodes public trust in technology. As we navigate this complex landscape, it’s crucial to establish clear guidelines that prioritize user consent and data protection.

To better understand the implications of AI on privacy, consider the following key points:

- Data Collection: AI systems often require extensive data to function effectively, which raises concerns about how this data is collected and used.

- Surveillance State: The potential for governments to misuse AI for mass surveillance can lead to authoritarian practices that infringe on civil liberties.

- Informed Consent: Users must be aware of how their data is being used and should have the right to opt-out of data collection.

As we look to the future, it is essential to strike a balance between leveraging AI for societal benefits and safeguarding individual privacy rights. This balance requires collaboration among technologists, ethicists, lawmakers, and the public. Without such collaboration, we risk creating a society where surveillance becomes the norm, and privacy is a relic of the past.

Q1: What are the main ethical concerns regarding AI and privacy?

A1: The primary concerns include data collection without consent, the potential for misuse of surveillance technologies, and the erosion of individual privacy rights.

Q2: How can individuals protect their privacy in an AI-driven world?

A2: Individuals can protect their privacy by being cautious about the data they share, reading privacy agreements, and using privacy-focused technologies.

Q3: What role do governments play in regulating AI surveillance?

A3: Governments are responsible for creating laws and regulations that protect citizens' rights while ensuring that AI technologies are used ethically and transparently.

Accountability in AI

As we delve into the realm of artificial intelligence, the question of accountability looms large. Who is responsible when an AI system goes awry? Is it the developer, the user, or perhaps the AI itself? These questions are not just philosophical musings; they are critical considerations that shape the ethical landscape of AI technology. As AI systems become increasingly autonomous, the need for clear lines of accountability becomes paramount. Without accountability, we risk creating a technological Wild West where the consequences of AI actions are left unaddressed.

The complexities of accountability in AI are further compounded by the rapid pace of technological advancement. Traditional legal frameworks often struggle to keep up with the innovations and capabilities of AI. For instance, if an AI system makes a decision that leads to harm—be it financial loss, physical injury, or violation of privacy—determining who is at fault can be a convoluted process. This is particularly challenging when decisions are made based on algorithms that are not easily interpretable, leading to what is known as the “black box” problem.

To address these challenges, several key aspects of accountability in AI must be considered:

- Transparency: AI systems should be designed with transparency in mind. Stakeholders need to understand how decisions are made, which requires clear documentation of algorithms and data sources.

- Regulatory Oversight: Governments and regulatory bodies must establish guidelines that define accountability standards for AI technologies, ensuring that developers adhere to ethical practices.

- Corporate Responsibility: Companies must take ownership of their AI products, implementing ethical considerations at every stage of development and deployment.

Legal frameworks are crucial in navigating the murky waters of AI accountability. Current laws often fall short, as they were not designed with AI technologies in mind. The introduction of new regulations could include provisions that explicitly address AI's unique challenges. For example, there could be laws that mandate companies to conduct impact assessments before deploying AI systems, ensuring that potential harms are identified and mitigated in advance.

Moreover, corporate responsibility plays a significant role in fostering trust and accountability in AI deployment. Companies must prioritize ethical practices and be transparent about their AI systems' capabilities and limitations. This not only builds consumer trust but also encourages a culture of accountability within the organization. By adopting ethical AI guidelines, corporations can lead the way in establishing a framework that others might follow.

In summary, accountability in AI is a multifaceted issue that requires collaboration among developers, users, regulators, and corporations. As AI technologies continue to evolve, establishing clear accountability mechanisms will be essential to ensure that these systems operate ethically and responsibly. With proactive measures and a commitment to transparency, we can create an environment where AI technologies benefit society while minimizing risks.

- What is AI accountability? AI accountability refers to the responsibility of individuals and organizations to ensure that AI systems operate ethically and transparently, and to take responsibility for the outcomes of their use.

- Why is accountability important in AI? Accountability is crucial in AI to prevent misuse, ensure ethical use, and protect individuals from harm caused by AI decisions.

- How can accountability be enforced in AI? Accountability can be enforced through regulatory frameworks, corporate policies, and transparency measures that outline responsibilities and consequences for AI-related actions.

Legal Frameworks

When it comes to the ethical governance of artificial intelligence, establishing is not just important—it’s imperative. These frameworks serve as the backbone for regulating AI technologies and ensuring that they operate within the bounds of societal norms and ethical standards. As AI continues to evolve, so too must the laws that govern its use, creating a dynamic interplay between technology and regulation.

One of the primary challenges in creating effective legal frameworks for AI is the rapid pace at which technology is advancing. Laws often lag behind innovations, leaving gaps that can be exploited or that fail to protect individuals adequately. For instance, the General Data Protection Regulation (GDPR) in Europe has set a precedent for data privacy, but its applicability to AI technologies is still being debated. This regulation emphasizes the importance of transparency and consent, which are crucial in an age where AI systems often operate as "black boxes."

Furthermore, the question of accountability is paramount. Who is responsible when an AI system makes a mistake? Is it the developer, the user, or the organization that deployed it? These are complex questions that require careful consideration and clear legal definitions. Current laws often do not address these nuances, leading to uncertainty and potential injustice. For example, if an autonomous vehicle causes an accident, determining liability can be a convoluted process involving multiple parties.

To tackle these issues, several countries and organizations are working on developing comprehensive legal frameworks. Here are some key elements that are often discussed:

- Accountability Mechanisms: Establishing who is responsible for AI decisions and outcomes.

- Transparency Requirements: Mandating that AI systems be explainable and understandable to users.

- Data Protection Regulations: Ensuring that personal data is handled in compliance with privacy laws.

- Ethical Guidelines: Creating standards for ethical AI development and deployment.

In addition to these elements, international cooperation is essential. AI technologies are not confined by borders, and their implications can have global consequences. Therefore, collaborative efforts among nations to establish unified legal standards could help mitigate risks and enhance accountability. Organizations like the United Nations and the European Union are already taking steps in this direction, advocating for international agreements that address the ethical use of AI.

As we look to the future, it's clear that the legal frameworks surrounding AI must be adaptable and forward-thinking. They should not only respond to current challenges but also anticipate future developments in technology. This proactive approach will help ensure that AI serves humanity positively and ethically, fostering trust and safety in a world increasingly influenced by artificial intelligence.

- What are the main goals of AI legal frameworks?

The primary goals include ensuring accountability, protecting personal data, promoting transparency, and establishing ethical guidelines for AI development.

- How do current laws address AI technologies?

Current laws often focus on data protection and privacy, but many do not adequately address the complexities of AI decision-making and accountability.

- Why is international cooperation important for AI regulation?

AI technologies operate globally, and international cooperation can help create consistent standards that protect individuals and promote ethical practices across borders.

Corporate Responsibility

In the rapidly evolving landscape of artificial intelligence, has emerged as a critical pillar for ensuring that AI technologies are developed and deployed ethically. Companies at the forefront of AI innovation must recognize that their actions have profound implications not only for their bottom line but also for society at large. It's no longer just about creating cutting-edge technology; it's about doing so in a way that respects human rights, promotes fairness, and fosters trust.

One of the key aspects of corporate responsibility in AI is acknowledging the impact of bias in algorithms. When organizations prioritize profit over ethics, they risk perpetuating harmful stereotypes and discriminatory practices. For instance, if a tech company uses biased training data, the AI systems they develop can inadvertently discriminate against marginalized groups. Thus, companies must actively work to ensure that their AI models are fair and equitable. This involves implementing rigorous testing and validation processes to identify and mitigate any biases present in their systems.

Furthermore, transparency is another cornerstone of corporate responsibility. Organizations should openly communicate how their AI systems work, what data is being used, and how decisions are made. This transparency not only builds trust with consumers but also allows for greater scrutiny from external stakeholders, including regulators and advocacy groups. By being transparent, companies can demonstrate their commitment to ethical practices and accountability.

Another important consideration is the privacy of individuals. As AI technologies often rely on vast amounts of data, ensuring that this data is collected, stored, and used responsibly is paramount. Companies must implement robust data protection measures and respect user privacy rights. This means obtaining informed consent, anonymizing data where possible, and being clear about how data will be utilized. Failure to protect user privacy can result in significant backlash and damage to a company's reputation.

Moreover, corporate responsibility extends to the environmental impact of AI technologies. The energy consumption associated with training large AI models can be substantial. Companies should strive to adopt sustainable practices, such as utilizing energy-efficient data centers and exploring renewable energy sources. By doing so, they not only reduce their carbon footprint but also set an example for the industry, demonstrating that ethical considerations include environmental stewardship.

In summary, the responsibility of corporations developing AI technologies is multifaceted. It involves:

- Addressing bias and ensuring fairness in AI algorithms.

- Promoting transparency in AI processes and decision-making.

- Protecting user privacy and data rights.

- Considering the environmental impact of AI technologies.

As we look to the future, it is clear that corporate responsibility will play a crucial role in shaping the ethical landscape of AI. Companies that prioritize these values will not only benefit from enhanced public trust and loyalty but also contribute to a more equitable and just society. The challenge lies in integrating these ethical considerations into the core business strategy, ensuring that the pursuit of innovation does not come at the expense of ethical integrity.

1. What is corporate responsibility in the context of AI?

Corporate responsibility in AI refers to the ethical obligations of companies to develop, deploy, and manage AI technologies in ways that are fair, transparent, and respectful of human rights.

2. Why is transparency important for AI companies?

Transparency is crucial as it builds trust with users, allows for external scrutiny, and helps ensure that AI systems are operating fairly and responsibly.

3. How can companies mitigate bias in AI?

Companies can mitigate bias by using diverse data sets, conducting regular audits of their algorithms, and involving a diverse team in the development process.

4. What role does privacy play in AI ethics?

Privacy is a significant ethical concern as AI systems often rely on personal data. Companies must ensure that they collect and use data responsibly, safeguarding users' privacy rights.

5. How can AI companies address their environmental impact?

AI companies can address their environmental impact by adopting energy-efficient technologies, utilizing renewable energy sources, and implementing sustainable practices throughout their operations.

The Future of AI Ethics

As we stand on the precipice of a technological revolution, the future of AI ethics is becoming increasingly complex and multifaceted. The rapid advancement of artificial intelligence is not just a trend; it’s a transformational wave that is reshaping our society, our economies, and even our day-to-day lives. With such profound changes come a plethora of ethical considerations that we must navigate carefully. So, what does the future hold? Will we see a world where AI operates with a moral compass, or are we heading into uncharted, potentially perilous waters?

One key aspect of the future of AI ethics will be the integration of ethical frameworks into AI development processes. As organizations increasingly recognize the importance of ethical considerations, we can expect to see a rise in the establishment of guidelines and standards that govern AI behavior. This could take the form of industry-wide ethics boards or regulatory bodies that oversee AI technologies, ensuring they align with societal values. Imagine a world where every AI system is designed with a built-in ethical framework, much like how we have safety standards for automobiles.

Moreover, the role of public engagement will be paramount. As citizens become more aware of AI’s capabilities and limitations, there will be an increasing demand for transparency and accountability. The public will want to know not just how AI systems are making decisions, but also the ethical implications of those decisions. This could lead to a more informed citizenry that actively participates in discussions about AI policies, ultimately shaping the development of AI technologies to reflect collective values. Think of it as a democratic process where the voices of the people influence the direction of technology.

Additionally, the global nature of AI presents unique challenges and opportunities. Different cultures and countries may have varying ethical standards, which could lead to conflicts in AI governance. For instance, what is considered acceptable AI behavior in one country might be deemed unethical in another. This disparity necessitates international cooperation and dialogue to establish a cohesive ethical framework that respects cultural differences while promoting universal human rights. The future might see the emergence of global treaties or agreements focused on AI ethics, much like the climate accords we have today.

As we look ahead, we must also consider the advancements in AI technology itself. With the rise of autonomous systems, such as self-driving cars and AI-driven decision-making tools, the stakes are higher than ever. These technologies will require robust ethical guidelines to ensure they operate safely and justly. Imagine a future where AI systems are held to the same ethical standards as human professionals—where an AI doctor must adhere to the Hippocratic Oath, or a self-driving car must prioritize the safety of its passengers and pedestrians alike.

In conclusion, the future of AI ethics is not just a concern for technologists and ethicists; it is a shared responsibility that involves everyone. As we forge ahead into this new era, we must remain vigilant, proactive, and engaged in discussions about the ethical implications of AI. By doing so, we can strive to create a future where AI technologies enhance our lives while adhering to the highest ethical standards. The journey may be challenging, but the potential rewards—a fairer, more equitable society—are worth the effort.

- What are the main ethical concerns regarding AI? The primary concerns include bias, privacy, accountability, and transparency.

- How can we ensure AI is developed ethically? By establishing guidelines, promoting public engagement, and fostering international cooperation.

- What role does the public play in AI ethics? The public can influence AI policies and demand transparency, ensuring technologies align with societal values.

- Will AI have ethical standards similar to human professionals? In the future, we may see AI systems held to ethical standards comparable to those of human professionals.

Frequently Asked Questions

- What is AI ethics?

AI ethics refers to the set of moral principles that guide the development and use of artificial intelligence technologies. It encompasses various considerations, including fairness, accountability, transparency, and the impact of AI on society.

- Why is bias in AI a concern?

Bias in AI is a major concern because it can lead to unfair outcomes and discrimination against certain groups. If AI systems are trained on biased data, they may perpetuate existing inequalities, making it crucial to identify and mitigate bias in these technologies.

- How can bias in AI be mitigated?

Mitigation strategies for bias in AI include using diverse data sets, implementing inclusive design practices, and regularly auditing algorithms for fairness. These approaches help ensure that AI systems are equitable and do not discriminate against users.

- What are the privacy implications of AI?

The use of AI in surveillance raises significant privacy concerns. AI-driven technologies can collect vast amounts of personal data, leading to potential misuse and erosion of individual privacy. Robust privacy protections are essential to safeguard citizens' rights.

- Who is accountable when AI systems cause harm?

Accountability in AI is complex and involves multiple stakeholders, including developers, corporations, and users. Establishing clear legal frameworks is vital to determine responsibility and ensure that victims can seek redress when AI systems cause harm.

- What role do corporations play in AI ethics?

Corporations developing AI technologies have a crucial role in prioritizing ethical practices. By fostering transparency, accountability, and responsible AI deployment, companies can build trust with users and contribute to a more ethical AI landscape.

- What does the future hold for AI ethics?

The future of AI ethics will likely involve evolving considerations as technology advances. Continuous public engagement and ethical oversight will be necessary to address new challenges and ensure that AI benefits society as a whole.