Prioritizing Ethics in AI Innovation

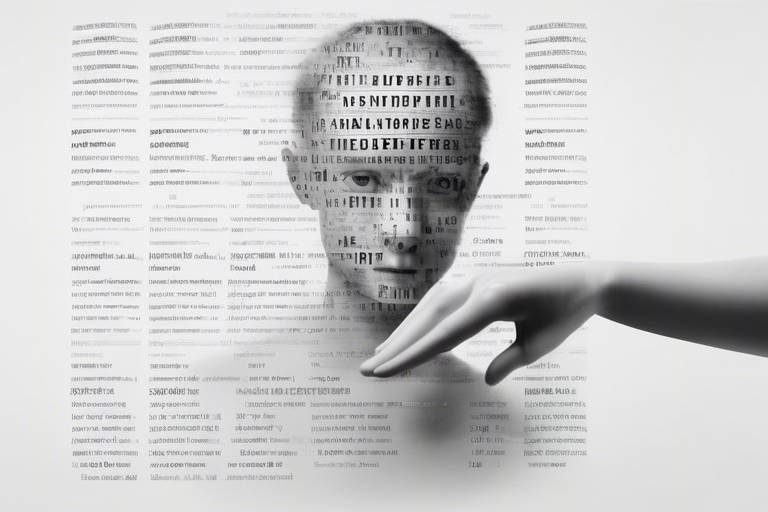

In today’s fast-paced technological landscape, the rise of artificial intelligence (AI) is nothing short of revolutionary. However, with great power comes great responsibility. As we delve deeper into the realms of AI innovation, it's imperative to prioritize ethics to ensure that these advancements do not come at the expense of our values and societal norms. Imagine a world where AI systems make decisions that impact our daily lives—everything from healthcare to criminal justice. The implications are vast and, if not approached with a strong ethical framework, could lead to unintended consequences that could harm individuals and communities.

Ethical considerations in AI development are not just an afterthought; they are fundamental to the way we shape the future. Developers and organizations wield immense influence over how AI technologies are designed and deployed. Thus, they bear a significant responsibility to create systems that are not only efficient but also align with human values. This responsibility extends beyond mere compliance with laws; it involves a commitment to fostering trust, promoting fairness, and ensuring that technology serves the common good.

In this article, we will explore the importance of ethical AI, the key principles that should guide its development, and the regulatory frameworks necessary to support responsible innovation. By prioritizing ethics in AI, we can work towards a future where technology enhances our lives without compromising our fundamental rights and values. So, what does it mean to prioritize ethics in AI innovation? Let’s dive deeper into this critical conversation.

The Importance of Ethical AI

In today's rapidly evolving technological landscape, the significance of ethical considerations in artificial intelligence (AI) cannot be overstated. With AI systems increasingly influencing our daily lives—from the way we communicate to how we make decisions—it's crucial to understand the societal implications that come with these advancements. Imagine a world where AI systems are not just tools but integral parts of our decision-making process. Would you trust a system that lacks ethical grounding? This is where the responsibility of developers and organizations comes into play. They must ensure that the technologies they create align with human values and promote the common good.

One of the primary reasons ethics should be prioritized in AI development is the potential for both positive and negative societal impacts. For instance, while AI can enhance efficiency and provide personalized experiences, it can also perpetuate biases and inequalities if not handled with care. The developers of AI systems hold the power to shape outcomes that can either uplift or undermine communities. Thus, they must be vigilant about the ethical ramifications of their work. It’s a bit like being a chef: if you add too much salt to your dish, it can ruin the entire meal. Similarly, a small oversight in AI ethics can lead to catastrophic consequences.

Moreover, ethical AI fosters trust among users. When people believe that AI systems are designed with their best interests in mind, they are more likely to embrace these technologies. Trust is the bedrock of any relationship, and the relationship between humans and AI is no different. If users feel that AI is transparent, fair, and accountable, they will be more willing to engage with it. This trust not only benefits users but also enhances the reputation of organizations, potentially leading to greater adoption and success in the market.

Furthermore, as AI technology becomes more integrated into various sectors—such as healthcare, finance, and education—the need for ethical guidelines becomes even more pressing. For example, in healthcare, AI can assist in diagnosing diseases, but if the algorithms are biased, they may lead to misdiagnoses for certain populations. This could have dire consequences, affecting lives and perpetuating health disparities. Therefore, the responsibility of creating ethical AI extends beyond developers to include policymakers, industry leaders, and society as a whole. We all play a role in ensuring that AI is a force for good.

In conclusion, prioritizing ethical considerations in AI development is not just a choice; it's a necessity. As we navigate this intricate landscape, we must ask ourselves: Are we building technology that serves humanity? By placing ethics at the forefront, we can create AI systems that not only advance innovation but also uphold the values that define us as a society. The journey toward ethical AI is ongoing, and it requires a collective effort to ensure that our technological future is bright and equitable.

Key Ethical Principles

When we talk about ethical AI, we’re diving into a realm that isn’t just about technology; it’s about humanity itself. The development of artificial intelligence has the potential to revolutionize our world, but with great power comes great responsibility. It’s crucial to establish a foundation built on key ethical principles that guide this innovation. These principles include fairness, accountability, transparency, and respect for privacy. Each of these elements plays a pivotal role in ensuring that AI serves the common good rather than becoming a tool of oppression or inequality.

Let’s break these principles down a bit further. First up is fairness. Imagine a world where AI systems make decisions that impact your life—like hiring, lending, or even law enforcement. If these systems are biased, they can perpetuate existing inequalities, leading to unfair treatment of certain groups. Therefore, ensuring fairness means actively working to eliminate biases in AI algorithms. This is not just a technical challenge; it’s a moral imperative. Developers must be vigilant in identifying and addressing these biases to create equitable outcomes for all.

Next, we have accountability. In the fast-paced world of AI, it’s easy for developers to hide behind their code, but accountability demands that they take responsibility for the systems they create. This means being transparent about how AI models are developed and deployed, and ensuring that there are mechanisms in place to address any negative consequences that arise. It’s not enough to just create a powerful AI; developers and organizations must own the impact of their innovations.

Then there’s transparency. This principle is about making AI systems understandable and accessible to users. When people interact with AI, they should have a clear idea of how decisions are made. This not only builds trust but also allows users to challenge and question outcomes that seem unjust. Transparency can take many forms, from clear documentation of algorithms to user-friendly interfaces that explain AI decision-making in layman’s terms.

Finally, we must talk about respect for privacy. In our increasingly digital world, personal data is a valuable commodity, and AI systems often rely on vast amounts of this data to function effectively. However, the collection and use of personal data raise significant ethical concerns. Developers must prioritize user privacy by implementing robust data protection measures and ensuring that individuals have control over their own information. This respect for privacy is not just a legal obligation; it’s a fundamental aspect of ethical AI development.

In summary, the key ethical principles of fairness, accountability, transparency, and respect for privacy are not just buzzwords; they are essential guidelines that should shape the entire lifecycle of AI development. By adhering to these principles, we can foster a future where technology enhances our lives while upholding our shared values. As we move forward, it’s crucial to keep these principles at the forefront of our minds, ensuring that AI innovation is not only groundbreaking but also responsible and ethical.

- What is ethical AI? Ethical AI refers to the development and deployment of artificial intelligence systems that prioritize human rights, fairness, and accountability.

- Why is fairness important in AI? Fairness is vital to prevent discrimination and ensure that AI systems do not perpetuate existing societal biases.

- How can transparency be achieved in AI systems? Transparency can be achieved through clear documentation, user-friendly explanations, and open communication about how AI decisions are made.

- What role does privacy play in ethical AI? Privacy is crucial as it ensures individuals have control over their personal data, which is often used by AI systems.

Fairness in AI

In the rapidly evolving world of artificial intelligence, the concept of fairness is not just a buzzword; it’s a fundamental principle that must guide AI development. Imagine a world where AI systems are designed to treat everyone equally, regardless of their background, ethnicity, or gender. Sounds ideal, right? However, the reality is that many AI algorithms inadvertently carry biases that can lead to unfair outcomes. This is why understanding and implementing fairness in AI is essential for creating technology that genuinely serves all of humanity.

Fairness in AI encompasses the idea of eliminating biases that can skew results and lead to discrimination. For instance, if an AI system is trained on historical data that reflects societal inequalities, it may perpetuate those biases in its decision-making processes. This can manifest in various applications, from hiring algorithms that favor certain demographics to facial recognition systems that misidentify individuals from minority groups. Therefore, it’s crucial to actively seek out and address these biases to ensure equitable outcomes.

One effective approach to achieving fairness in AI is to incorporate diverse datasets during the training phase. By ensuring that the data reflects a wide range of perspectives and experiences, developers can create systems that are more representative of the population as a whole. Furthermore, regular audits of AI systems can help identify and mitigate biases that may arise over time. Developers should also engage with diverse stakeholders throughout the development process to gain insights that can inform fair practices.

Implementing fair practices is not just about addressing existing inequalities; it’s also about fostering a culture of inclusivity in AI development. This might involve:

- Inclusive Design: Ensuring that people from various backgrounds are involved in the design process can lead to more equitable AI systems.

- Bias Mitigation Techniques: Employing statistical methods to detect and reduce bias in AI algorithms is crucial.

- Transparency: Providing clear explanations about how AI decisions are made can help build trust and accountability.

Ultimately, fairness in AI is about creating technology that reflects our shared values and upholds the dignity of all individuals. As we continue to innovate, it’s imperative that we prioritize fairness to prevent the emergence of systems that reinforce existing societal biases. By committing to fair practices, we can pave the way for a future where AI is a force for good, promoting equity and justice in every application.

Q1: What is fairness in AI?

A1: Fairness in AI refers to the principle of ensuring that AI systems make decisions without bias, treating all individuals equitably regardless of their background.

Q2: Why is fairness important in AI development?

A2: Fairness is crucial because biased AI systems can perpetuate discrimination and inequality, leading to negative societal impacts.

Q3: How can developers ensure fairness in AI?

A3: Developers can ensure fairness by using diverse datasets, conducting regular audits, and engaging with a wide range of stakeholders during the design process.

Identifying Biases

Identifying biases within artificial intelligence systems is a crucial step towards ensuring fairness and equity in technology. Biases can creep into AI algorithms through various channels, often leading to unfair treatment of certain groups or individuals. These biases can originate from the data used to train AI models, the design of the algorithms themselves, or even the societal norms that influence developers. Recognizing these biases is not just about spotting them; it’s about understanding their sources and implications. For instance, if an AI system is trained on historical data that reflects past prejudices, it may inadvertently perpetuate those biases in its decision-making processes. This creates a ripple effect that can adversely impact marginalized communities, reinforcing existing inequalities.

One effective method for identifying biases is through data auditing. This involves a thorough examination of the datasets used in AI training. By analyzing the demographics represented in the data, developers can uncover whether certain groups are underrepresented or misrepresented. For example, if a facial recognition system is predominantly trained on images of light-skinned individuals, its accuracy for darker-skinned individuals may be significantly lower, leading to harmful consequences. Therefore, auditing data not only highlights potential biases but also provides a pathway for corrective measures.

Another approach is employing algorithmic audits, where the algorithms themselves are scrutinized for biased outcomes. This process often involves running the AI system through various scenarios to observe how it performs across different demographic groups. By evaluating the outcomes, developers can identify patterns of discrimination that may not be immediately apparent. For instance, if a hiring algorithm consistently favors candidates from certain backgrounds over others, it raises a red flag that demands further investigation.

Moreover, utilizing diverse teams in the development process can significantly aid in identifying biases. When teams are composed of individuals from varied backgrounds, they bring different perspectives and experiences to the table, which can help in recognizing blind spots that a homogenous team might overlook. This diversity fosters a culture of inclusion that is vital for ethical AI development.

To summarize, identifying biases in AI systems is a multifaceted endeavor that requires a combination of data auditing, algorithmic scrutiny, and diverse team collaboration. By taking these steps, we can work towards creating AI technologies that are not only innovative but also equitable and just. The responsibility lies with developers and organizations to ensure that their AI systems do not perpetuate existing societal biases but instead contribute positively to the fabric of society.

- What are some common sources of bias in AI? Bias can arise from skewed training data, algorithm design, and societal norms influencing developers.

- How can organizations mitigate bias in their AI systems? By conducting thorough data and algorithm audits, employing diverse teams, and continuously monitoring outcomes.

- Why is identifying bias important? It is essential to ensure fairness, equity, and accountability in AI systems, preventing discrimination and reinforcing societal inequalities.

- What role does diversity play in identifying biases? Diverse teams bring different perspectives, which can help uncover biases that might be overlooked by a more homogenous group.

Implementing Fair Practices

When it comes to in AI, we must first acknowledge the complexities involved. It's not just about creating algorithms; it's about ensuring that these algorithms are designed with a sense of social responsibility. Developers and organizations need to commit to a framework that prioritizes fairness at every stage of the AI lifecycle. This means actively engaging with diverse communities and understanding their unique needs and perspectives. It’s akin to cooking a meal: if you only use one ingredient, the dish will lack flavor and depth. Similarly, if AI systems are built without considering a wide range of inputs, they risk becoming biased and ineffective.

One effective strategy for fostering fairness in AI is the adoption of inclusive design principles. This approach emphasizes the importance of involving a broad spectrum of voices in the development process. Imagine trying to build a bridge without consulting the people who will cross it; the result would likely be a structure that fails to meet their needs. By including stakeholders from various backgrounds—such as different races, genders, and socioeconomic statuses—developers can better identify potential biases and address them before they manifest in the final product.

Moreover, organizations should implement regular audits of their AI systems. These audits serve as a check-up, ensuring that the algorithms are functioning as intended and not perpetuating existing biases. Think of it like getting your car serviced; regular maintenance can prevent larger issues down the road. By conducting these audits, companies can identify discrepancies in how their AI systems operate across different demographics and make necessary adjustments. This proactive approach not only enhances fairness but also builds trust with users who may feel marginalized by technology.

Additionally, it’s crucial to provide ongoing training for developers and stakeholders on ethical AI practices. Just as a doctor must stay updated on the latest medical procedures, AI developers should be aware of the evolving landscape of ethical considerations. Workshops, seminars, and online courses can be invaluable resources for keeping everyone informed about the latest findings and best practices in AI fairness.

Finally, collaboration with external organizations can significantly enhance the fairness of AI systems. Partnering with academic institutions, non-profits, and advocacy groups can bring in fresh perspectives and expertise that might be lacking internally. For instance, a tech company might collaborate with a civil rights organization to better understand the implications of their AI systems on marginalized communities. This partnership can lead to more equitable outcomes and foster a culture of accountability and transparency.

In conclusion, implementing fair practices in AI is not a one-time task but an ongoing commitment. By embracing inclusive design, conducting regular audits, providing education, and collaborating with external organizations, we can pave the way for AI systems that truly serve everyone. After all, in a world that is becoming increasingly dependent on technology, fairness should never be an afterthought.

- What are fair practices in AI development? Fair practices in AI development refer to the principles and strategies aimed at eliminating biases and ensuring equitable outcomes in AI systems.

- Why is inclusive design important? Inclusive design is crucial because it incorporates diverse perspectives, helping to identify and mitigate potential biases in AI algorithms.

- How can organizations audit their AI systems? Organizations can audit their AI systems by analyzing performance across different demographics and adjusting algorithms to correct any discrepancies.

- What role does collaboration play in ethical AI? Collaboration with external organizations can provide valuable insights and expertise, enhancing the fairness and accountability of AI systems.

Accountability in AI Development

Accountability in AI development isn't just a buzzword; it's a fundamental necessity that shapes the future of technology and its integration into our daily lives. As AI systems become more complex and autonomous, the question arises: who is responsible when things go wrong? This isn't just a theoretical concern; it has real-world implications. Imagine an AI system making a decision that adversely affects someone's life—who do you turn to for answers? The developers? The companies? This ambiguity can lead to a lack of trust among users and stakeholders alike, which is why establishing clear lines of accountability is crucial.

One of the primary roles of accountability is to ensure that AI systems are developed with a sense of responsibility. Developers and organizations must recognize that their creations have the potential to impact society significantly. This means that they must not only focus on the technical aspects of AI but also consider the ethical implications of their work. For instance, if an AI system used in hiring practices is found to be biased, it raises the question of who is accountable for that bias. Is it the data scientists who trained the model, the management team that approved its deployment, or the organization as a whole? Each party has a role to play, and accountability must be shared.

To foster a culture of accountability, organizations should implement structured frameworks that clearly define roles and responsibilities at every stage of AI development. This can be achieved through:

- Documentation: Keeping thorough records of decision-making processes, data usage, and model training can help trace back any issues that arise.

- Ethics Committees: Establishing dedicated teams to oversee AI projects can ensure that ethical considerations are integrated into the development lifecycle.

- Regular Audits: Conducting periodic evaluations of AI systems can help identify potential risks and biases, allowing for timely interventions.

Moreover, accountability isn't just about internal processes; it also extends to external communication. Developers should be transparent with users about how AI systems operate, the data they use, and the potential risks involved. This transparency builds trust and encourages users to engage with AI technologies more confidently. For example, if a company openly shares the algorithms and data sets used in their AI applications, it allows for greater scrutiny and feedback from the public, which can lead to improvements and innovations.

In addition, the responsibility of accountability also lies with regulatory bodies and policymakers. They must create frameworks that not only promote innovation but also protect the rights and interests of individuals affected by AI systems. This includes establishing guidelines on ethical AI practices and holding organizations accountable for any breaches. As AI continues to evolve, so too must the regulations that govern it, ensuring that accountability remains a priority.

Ultimately, accountability in AI development is about creating a balance between innovation and ethical responsibility. By acknowledging the potential consequences of AI technologies and implementing robust accountability measures, we can pave the way for a future where AI serves humanity positively and ethically. It's about asking the tough questions and ensuring that every player in the AI ecosystem understands their role in fostering a responsible and trustworthy technological landscape.

- What is accountability in AI development? Accountability in AI development refers to the responsibility of developers and organizations to ensure that AI systems are created, deployed, and managed ethically and transparently.

- Why is accountability important in AI? It is crucial to prevent misuse, bias, and negative impacts on society, ensuring that AI technologies serve the common good.

- How can organizations promote accountability? Organizations can promote accountability by implementing documentation practices, forming ethics committees, and conducting regular audits of AI systems.

- What role do regulations play in AI accountability? Regulations help establish clear guidelines and standards for ethical AI practices, holding organizations accountable for their technologies.

Regulatory Frameworks for Ethical AI

Establishing regulatory frameworks for ethical AI is not just important; it’s essential. As artificial intelligence continues to evolve at a breakneck pace, the need for comprehensive policies that guide its development becomes increasingly pressing. Without these frameworks, we risk creating a technological landscape that operates without accountability, potentially leading to harmful consequences for society. Imagine driving a car without traffic laws—chaos would ensue! Similarly, AI systems without regulations can lead to unpredictable and often dangerous outcomes.

Currently, various countries are scrambling to catch up with the rapid advancements in AI technology. Some have initiated regulatory measures aimed at ensuring that AI development aligns with ethical standards. For instance, the European Union has proposed the AI Act, which seeks to classify AI systems based on their risk levels and implement stricter regulations for high-risk applications. This act is a significant step towards creating a structured approach to AI governance, but it also raises questions about its effectiveness and the challenges of enforcement.

One of the primary challenges in establishing effective regulatory frameworks is the dynamic nature of AI technology itself. As new innovations emerge, existing regulations may quickly become outdated. This creates a tug-of-war between fostering innovation and ensuring that ethical standards are upheld. Policymakers must be agile, adapting regulations that can keep pace with technological advancements while also safeguarding public interests.

To illustrate the current state of AI regulations globally, consider the following table that highlights some key initiatives:

| Region | Initiative | Focus Area |

|---|---|---|

| European Union | AI Act | Risk-based classification of AI systems |

| United States | Algorithmic Accountability Act | Transparency in AI algorithms |

| Canada | Directive on Automated Decision-Making | Accountability in automated decisions |

| United Kingdom | AI Sector Deal | Investment and ethical guidelines |

As we can see, various regions are taking steps to regulate AI, but the approaches differ significantly. Some focus on transparency and accountability, while others emphasize risk classification and ethical guidelines. This disparity can lead to confusion and inconsistency, especially for companies operating in multiple jurisdictions. It’s crucial for international collaboration to occur, allowing for the establishment of universal standards that can guide ethical AI development globally.

Moreover, the role of organizations and industry stakeholders cannot be overlooked. They have a significant influence on shaping ethical practices and can act as a bridge between regulatory bodies and the tech community. By advocating for ethical standards and participating in the regulatory process, these organizations can help ensure that AI technologies are developed responsibly.

In conclusion, while the journey towards establishing comprehensive regulatory frameworks for ethical AI is fraught with challenges, it is a journey we must undertake. The stakes are high, and the implications for society are profound. By prioritizing ethical considerations in AI development, we can harness the power of this technology to serve the common good, rather than allowing it to spiral out of control.

- What are the main challenges in regulating AI? The primary challenges include the rapid evolution of technology, the need for international cooperation, and balancing innovation with ethical standards.

- Why is ethical AI important? Ethical AI ensures that technologies are developed responsibly, minimizing the risk of harm and promoting fairness and accountability.

- How can organizations contribute to ethical AI practices? Organizations can advocate for ethical standards, participate in regulatory discussions, and implement best practices in their AI development processes.

Global Initiatives

In today's fast-paced technological landscape, the call for ethical AI practices has never been louder. Numerous have emerged to address the pressing need for responsible AI development. These initiatives aim to create a framework that not only guides developers but also instills trust among users and stakeholders. For instance, organizations like the Partnership on AI bring together industry leaders, academics, and civil society to promote best practices and share knowledge. Their collaborative approach emphasizes the significance of ethical considerations in AI, ensuring that innovations align with societal values.

Another noteworthy initiative is the OECD's Principles on Artificial Intelligence, which sets out guidelines for governments and organizations to foster trustworthy AI. These principles advocate for AI that is inclusive, sustainable, and respects human rights. By establishing a common ground, the OECD aims to facilitate international cooperation and ensure that AI technologies are developed and deployed responsibly.

Moreover, the European Union has taken significant strides with its AI Act, which seeks to regulate AI systems based on their risk levels. This legislation aims to ensure that high-risk AI applications undergo rigorous testing and adhere to strict ethical standards. The EU's proactive stance highlights the importance of having robust regulatory frameworks to mitigate potential harms associated with AI technologies.

These initiatives are not just isolated efforts. They represent a growing recognition that ethical AI is a shared responsibility that transcends borders. For instance, the Global Partnership on AI (GPAI) is an international initiative that brings together experts from various countries to collaborate on AI research and policy. By pooling resources and knowledge, GPAI aims to accelerate the adoption of ethical AI practices globally.

In addition to these formal initiatives, there are numerous grassroots movements and organizations advocating for ethical AI. These groups often focus on raising public awareness about the implications of AI and the importance of integrating ethical considerations into technology design. Their efforts are crucial in pushing for transparency and accountability, ensuring that AI serves the common good rather than merely corporate interests.

In conclusion, the landscape of ethical AI is being shaped by a multitude of global initiatives. Each of these efforts plays a vital role in fostering an environment where technology can thrive while respecting human rights and societal values. As we move forward, it is essential for developers, policymakers, and the public to engage in these discussions, ensuring that ethical considerations remain at the forefront of AI innovation.

- What are the main goals of global initiatives for ethical AI?

The primary goals include promoting best practices, ensuring accountability, and fostering international cooperation to develop AI technologies responsibly. - How can organizations participate in these initiatives?

Organizations can join partnerships, adhere to established guidelines, and contribute to discussions around ethical AI practices. - Why is regulatory oversight important in AI development?

Regulatory oversight is crucial to mitigate risks associated with AI technologies, ensuring they are safe, fair, and aligned with human rights.

Challenges in Regulation

Regulating artificial intelligence (AI) is akin to trying to catch smoke with your bare hands. The rapid evolution of technology presents a myriad of challenges that make it difficult for lawmakers and regulatory bodies to keep pace. As AI systems become more sophisticated, the potential for misuse and unintended consequences grows, making it essential to strike a balance between fostering innovation and ensuring safety and ethical standards.

One of the primary challenges in regulating AI is the sheer speed at which the technology evolves. Traditional regulatory frameworks often rely on established norms and practices, which can quickly become obsolete in the face of groundbreaking advancements. For instance, consider how quickly social media platforms evolved; regulations that were relevant a few years ago are often inadequate today. Similarly, AI technology can outpace regulations, leaving gaps that could be exploited.

Moreover, the global nature of AI technology adds another layer of complexity. AI systems are often developed and deployed across borders, making it difficult to enforce regulations that may vary from one country to another. This leads to a patchwork of regulations that can confuse developers and businesses. Inconsistent regulations can stifle innovation, as companies may hesitate to invest in AI technologies if they are unsure about compliance requirements.

Additionally, the lack of a clear understanding of AI's implications can hinder regulatory efforts. Policymakers often struggle to grasp the technical aspects of AI, leading to regulations that may not effectively address the core issues. For example, regulations that focus solely on data privacy without considering algorithmic transparency may fail to protect users adequately. This underscores the need for collaboration between technologists and regulators to ensure that policies are informed and effective.

Another significant challenge is the ethical considerations surrounding AI. As AI systems increasingly influence decisions in sectors like healthcare, finance, and law enforcement, the potential for bias and discrimination becomes a pressing concern. Regulators must grapple with how to enforce fairness and accountability without stifling innovation. This is where the concept of ethical AI becomes crucial, as it provides a framework for guiding the development of technology that aligns with societal values.

It's also worth noting that creating regulations for AI isn't just about addressing current challenges; it's about anticipating future developments. The AI landscape is continually changing, and regulations must be adaptable to remain relevant. Policymakers need to be forward-thinking, considering not just the technology of today but also the innovations of tomorrow. This requires a commitment to ongoing dialogue and engagement with stakeholders across the industry.

In conclusion, while the challenges in regulating AI are significant, they are not insurmountable. By fostering collaboration between technologists and regulators, embracing a flexible approach to policy-making, and prioritizing ethical considerations, we can create a regulatory environment that supports responsible AI innovation. The goal should be to harness the potential of AI while safeguarding society from its risks, ensuring that this powerful technology serves the common good.

- What are the main challenges in regulating AI?

The main challenges include the rapid evolution of technology, global inconsistencies in regulations, a lack of understanding among policymakers, ethical considerations, and the need for adaptable regulations. - Why is it important to regulate AI?

Regulating AI is crucial to ensure safety, prevent misuse, promote fairness, and protect individual rights in an increasingly automated world. - How can stakeholders collaborate to improve AI regulation?

Stakeholders can collaborate by engaging in open dialogues, sharing knowledge, and developing joint initiatives that address both technological advancements and ethical considerations.

Frequently Asked Questions

- What is the significance of ethics in AI development?

Ethics in AI development is crucial because it ensures that technology aligns with human values and societal norms. By prioritizing ethical considerations, we can mitigate potential harms and foster trust in AI systems, making them beneficial for everyone.

- How can we ensure fairness in AI systems?

Ensuring fairness in AI systems involves identifying and eliminating biases in algorithms and data. Techniques like diverse data collection, regular audits, and inclusive design practices can help achieve equitable outcomes, thus preventing discrimination against any group.

- What are the key ethical principles guiding AI innovation?

The key ethical principles include fairness, accountability, transparency, and respect for privacy. These principles serve as a foundation for developing AI technologies that not only perform effectively but also adhere to ethical standards that benefit society as a whole.

- Why is accountability important in AI development?

Accountability is essential in AI development because it ensures that developers and organizations take responsibility for their creations. This responsibility includes maintaining ethical standards throughout the AI lifecycle, thereby fostering trust and reliability in AI applications.

- What are some global initiatives promoting ethical AI?

Several global initiatives focus on promoting ethical AI practices, including organizations like the Partnership on AI and the IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems. These groups work towards establishing guidelines and standards to ensure responsible AI development across the globe.

- What challenges do regulators face in managing AI ethics?

Regulators face unique challenges in managing AI ethics due to the rapid evolution of technology. Keeping regulations current while encouraging innovation is difficult, as outdated policies can hinder progress and fail to address emerging ethical concerns.