Designing Cybersecurity in an Era of AI

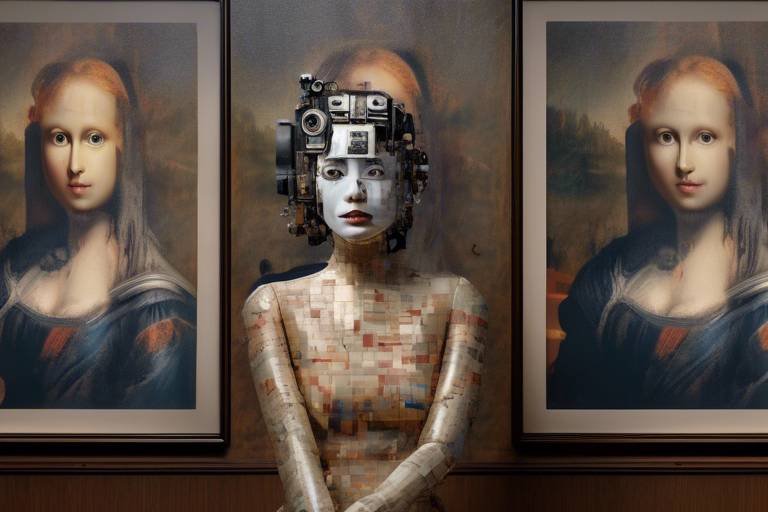

In today's rapidly evolving digital landscape, the intersection of artificial intelligence and cybersecurity is becoming increasingly significant. As technology advances, so too do the threats that lurk in the shadows of the internet. Cybersecurity is no longer just about firewalls and antivirus software; it’s about leveraging cutting-edge technologies to predict, detect, and respond to threats in real-time. Imagine a world where machines can learn from millions of data points to identify a potential breach before it even happens. This is the promise of AI in cybersecurity, but it also raises a host of questions about how we can protect ourselves in an era where both technology and threats are becoming more sophisticated.

As we delve deeper into this topic, we will explore the ways AI is transforming the cybersecurity landscape, the unique challenges posed by AI-driven attacks, and innovative strategies that organizations can adopt to build resilient security frameworks. With AI's ability to analyze vast amounts of data and learn from patterns, it enhances threat detection and response capabilities like never before. However, the same technology that empowers us can also be exploited by cybercriminals, creating a cat-and-mouse game that demands constant vigilance and adaptation.

In this article, we will not only highlight the opportunities presented by AI but also the potential risks it introduces. From deepfakes that can undermine trust to the ethical implications surrounding data privacy, the conversation around AI and cybersecurity is as complex as it is critical. As we navigate through these challenges, it becomes clear that a collaborative approach—where AI technologies and human expertise work hand in hand—will be essential for effective threat management. Join us as we uncover the future landscape of digital security in an increasingly automated world.

Artificial intelligence is transforming cybersecurity by enhancing threat detection and response capabilities. This section examines how AI tools are being integrated into security frameworks to combat evolving cyber threats.

As AI technology advances, so do the tactics of cybercriminals. This section highlights the unique challenges posed by AI-driven attacks and the need for adaptive security measures to counteract them.

Deepfake technology presents new risks in cybersecurity, enabling sophisticated misinformation campaigns. This subsection explores the implications of deepfakes for trust and security in digital communications.

Understanding how to detect and counter deepfake threats is crucial for organizations. This section discusses current methods and technologies used to identify and mitigate deepfake risks.

The rise of deepfakes raises significant legal and ethical questions. This section examines the implications for privacy, consent, and the responsibility of tech companies in managing these technologies.

AI enhances threat intelligence by analyzing vast data sets for patterns and anomalies. This subsection discusses how organizations leverage AI to predict and prevent cyber threats effectively.

Creating resilient cybersecurity frameworks involves integrating AI technologies while addressing vulnerabilities. This section outlines best practices for developing robust security systems that can adapt to emerging threats.

While AI can automate many processes, human expertise remains vital. This section discusses the importance of collaboration between AI systems and cybersecurity professionals for effective threat management.

Looking ahead, this section explores upcoming trends in cybersecurity influenced by AI advancements, including predictive analytics, automated response systems, and the evolving role of human oversight in security protocols.

Q: How does AI improve cybersecurity?

A: AI improves cybersecurity by analyzing large datasets to identify patterns and anomalies, enabling quicker threat detection and response.

Q: What are the risks associated with AI in cybersecurity?

A: The risks include the potential for AI to be used by cybercriminals to automate attacks and create deepfakes, which can undermine trust in digital communications.

Q: Can AI completely replace human cybersecurity experts?

A: No, while AI can automate many processes, human expertise is essential for strategic decision-making and understanding complex threats.

Q: What measures can organizations take to protect against AI-driven attacks?

A: Organizations should implement adaptive security measures, continuously update their systems, and foster collaboration between AI tools and human experts.

The Rise of AI in Cybersecurity

Artificial intelligence (AI) is rapidly transforming the landscape of cybersecurity, acting as a powerful ally in the ongoing battle against cyber threats. Imagine AI as a vigilant guard dog, tirelessly watching over your digital assets, ready to bark at any sign of danger. With its ability to analyze vast amounts of data at lightning speed, AI enhances threat detection and response capabilities like never before. This integration of AI tools into security frameworks is not just a trend; it’s a necessity in a world where cybercriminals are becoming increasingly sophisticated.

One of the most significant advantages of AI in cybersecurity is its ability to learn and adapt. Traditional security measures often rely on predefined rules and signatures, which can be easily bypassed by clever attackers. In contrast, AI systems utilize machine learning algorithms to identify patterns and anomalies in network traffic. This means that they can detect unusual behavior that deviates from the norm, flagging it for further investigation. For instance, if an employee suddenly accesses sensitive data they’ve never touched before, an AI-driven system can raise an alert, potentially preventing a data breach before it occurs.

Furthermore, AI-powered tools can automate routine security tasks, freeing up human experts to focus on more complex issues. Just think of it like having a personal assistant who handles all the mundane chores, allowing you to dedicate your time to strategic planning and execution. With AI managing tasks such as log analysis and vulnerability scanning, organizations can respond to threats more quickly and efficiently. This automation not only enhances security but also reduces operational costs, making it a win-win situation for businesses.

However, the rise of AI in cybersecurity is not without its challenges. As AI tools become more prevalent, so too do the tactics employed by cybercriminals. Many hackers are now leveraging AI to develop more sophisticated attacks, creating a cat-and-mouse game between defenders and attackers. For instance, AI can be used to automate phishing attacks, making them more convincing and harder to detect. This evolution necessitates a continuous adaptation of security measures to stay one step ahead of the bad actors.

In summary, the rise of AI in cybersecurity is reshaping the way organizations protect themselves against cyber threats. By enhancing threat detection, automating routine tasks, and learning from vast datasets, AI serves as a crucial component of modern security frameworks. However, as we embrace these advancements, we must also remain vigilant against the evolving tactics of cybercriminals. The future of cybersecurity lies in the harmonious integration of AI technologies and human expertise, creating a robust defense against the digital threats that loom on the horizon.

Challenges Posed by AI-Driven Attacks

As we venture further into the realm of artificial intelligence, we find ourselves grappling with a double-edged sword. On one side, AI offers incredible advancements in cybersecurity, but on the flip side, it has also become a powerful tool for cybercriminals. The challenges posed by AI-driven attacks are not just technical; they are complex, multifaceted issues that require a robust response. Cybercriminals are leveraging AI to create more sophisticated attacks, making it essential for organizations to stay one step ahead in this ongoing battle.

One of the most significant challenges is the speed and efficiency with which AI can analyze data. Traditional cybersecurity measures often struggle to keep up with the rapid pace of AI-driven attacks. For instance, AI can automate the process of identifying vulnerabilities, allowing attackers to exploit them before organizations even realize a threat exists. This creates a scenario where the defenders are constantly playing catch-up, which is not only exhausting but also increasingly risky.

Moreover, the rise of deepfake technology exemplifies how AI can be weaponized. Deepfakes can create hyper-realistic fake videos or audio recordings that can mislead individuals or manipulate public opinion. Imagine receiving a video of your CEO making a controversial statement, only to find out later that it was a deepfake. The implications for trust and security are staggering, and organizations must invest in technologies that can detect such manipulations before they cause irreparable damage.

AI-driven attacks also introduce a new level of complexity in threat detection. Traditional methods often rely on known signatures or patterns of behavior to identify threats. However, AI can generate novel attack vectors that do not match existing patterns, making them difficult to detect. This necessitates the adoption of adaptive security measures that can learn and evolve over time. Organizations must implement systems that utilize machine learning to identify anomalies and respond in real-time.

Additionally, the sheer volume of data generated by AI systems can overwhelm security teams. With every interaction, AI systems produce vast amounts of information that need to be analyzed for potential threats. This flood of data can lead to alert fatigue, where security professionals become desensitized to alarms, potentially overlooking genuine threats. To combat this, companies need to prioritize the integration of AI into their security frameworks, ensuring that their systems can filter out noise and focus on what truly matters.

In response to these challenges, organizations must adopt a proactive approach to cybersecurity. This involves not only investing in advanced technologies but also fostering a culture of security awareness among employees. Training staff to recognize the signs of AI-driven attacks, such as phishing attempts that utilize deepfake technology, can significantly reduce the risk of successful breaches.

Furthermore, collaboration between AI systems and human experts is crucial. While AI can automate many processes, the intuition and experience of cybersecurity professionals are irreplaceable. This partnership can lead to more effective threat management strategies, combining the speed of AI with the critical thinking skills of humans.

In conclusion, the challenges posed by AI-driven attacks are significant but not insurmountable. By embracing adaptive security measures, investing in employee training, and fostering collaboration between AI and human experts, organizations can create a resilient cybersecurity framework that stands strong against the evolving landscape of digital threats.

- What are AI-driven attacks? AI-driven attacks utilize artificial intelligence to enhance the sophistication and speed of cyber threats, making them harder to detect and counter.

- How can organizations protect themselves from AI-driven attacks? Organizations can protect themselves by implementing adaptive security measures, investing in employee training, and fostering collaboration between AI systems and human experts.

- What role does deepfake technology play in cybersecurity? Deepfake technology can be used to create realistic fake content that can mislead individuals or organizations, posing significant risks to trust and security.

Deepfakes and Misinformation

In today's digital landscape, the emergence of deepfake technology has revolutionized the way we perceive and interact with information. Imagine a world where video evidence can be manipulated so convincingly that it challenges our very notion of truth. This is not just a sci-fi scenario; it’s a reality we face today. Deepfakes use advanced artificial intelligence to create hyper-realistic fake videos and audio recordings, blurring the lines between reality and fabrication. As a result, they pose significant risks to cybersecurity, trust, and the integrity of information.

The implications of deepfakes extend far beyond mere entertainment; they can fuel misinformation campaigns that can mislead the public, manipulate opinions, and even sway elections. For instance, a deepfake video of a political figure making inflammatory statements could incite chaos and unrest, all based on a fabrication. This manipulation of media not only undermines public trust but also complicates the efforts of organizations striving to maintain factual integrity in their communications.

To further understand the impact of deepfakes, let’s consider a few key areas where they have raised concerns:

- Political Manipulation: Deepfakes can be weaponized to create false narratives that damage reputations and influence public perception.

- Corporate Espionage: In the corporate world, deepfakes can be used to impersonate executives, leading to fraudulent activities and financial losses.

- Personal Privacy: Individuals can become victims of deepfakes, with their likenesses being used without consent, leading to harassment or defamation.

As deepfake technology becomes more sophisticated, the challenge of identifying and countering these threats grows more daunting. Organizations must implement robust strategies to detect deepfakes and mitigate their impact. This includes investing in advanced detection tools that leverage AI to analyze video and audio for inconsistencies that human eyes might miss.

Moreover, the rise of deepfakes raises significant legal and ethical questions. Who is responsible when a deepfake is used to defame someone? What are the implications for privacy and consent? As these technologies continue to evolve, it’s crucial for lawmakers and tech companies to collaborate in establishing regulations that protect individuals and organizations from the potential harms of deepfakes.

In conclusion, deepfakes represent a double-edged sword in the realm of cybersecurity. While they showcase the incredible capabilities of AI, they also present profound challenges that require immediate attention and action. By understanding the risks and implementing proactive measures, we can hope to navigate this complex landscape and safeguard the truth in our digital communications.

- What are deepfakes? Deepfakes are synthetic media where a person’s likeness is replaced with someone else’s using AI technology.

- How do deepfakes affect cybersecurity? They can be used to spread misinformation, manipulate public opinion, and commit fraud, posing significant risks to organizations and individuals.

- What can be done to detect deepfakes? Organizations can use advanced AI detection tools that analyze inconsistencies in video and audio content to identify deepfakes.

Identifying Deepfake Threats

In today's digital landscape, the emergence of deepfake technology poses a significant challenge to cybersecurity. These hyper-realistic manipulations of audio and video can create a false sense of reality, making it increasingly difficult to discern fact from fiction. As organizations grapple with the implications of deepfakes, understanding how to identify these threats becomes crucial. But how can we effectively spot something designed to look so authentic?

One effective approach to identifying deepfake threats lies in the use of advanced detection technologies. These technologies leverage machine learning algorithms to analyze videos and audio for inconsistencies that the human eye might miss. For example, subtle discrepancies in facial movements or unnatural speech patterns can signal that a deepfake is at play. Organizations can deploy these algorithms as part of their cybersecurity frameworks to enhance their ability to detect potential threats.

Additionally, human expertise plays an essential role in identifying deepfake content. While AI can process vast amounts of data quickly, cybersecurity professionals bring critical thinking and contextual understanding to the table. By combining the analytical capabilities of AI with the nuanced judgment of human experts, organizations can create a more robust defense against deepfake threats. This collaboration not only helps in detection but also in the formulation of effective responses to mitigate risks.

To further illustrate the importance of identifying deepfake threats, consider the following table that outlines common indicators of deepfake content:

| Indicator | Description |

|---|---|

| Inconsistent Facial Movements | Facial expressions that do not match the audio or context can indicate manipulation. |

| Unnatural Eye Blinking | Deepfakes often struggle with mimicking natural eye movements, leading to unnatural blinking patterns. |

| Audio-Visual Mismatch | Discrepancies between what is being said and the speaker's lip movements can signal a deepfake. |

| Background Artifacts | Strange distortions or blurring in the background may indicate the presence of a deepfake. |

As deepfake technology continues to evolve, staying ahead of these threats requires a proactive approach. Organizations need to invest in both technology and training to ensure that their teams are equipped to identify and respond to deepfake content effectively. Regular training sessions on the latest detection methods and threat scenarios can empower cybersecurity professionals to act swiftly when faced with potential deepfake incidents.

Ultimately, the key to combating deepfake threats lies in a multifaceted strategy that combines technology, human expertise, and ongoing education. By fostering a culture of vigilance and adaptability, organizations can better navigate the complexities of deepfake technology and protect themselves from its potentially damaging effects.

- What are deepfakes? Deepfakes are synthetic media where a person’s likeness is replaced with someone else's, often used to create misleading or false content.

- How can I tell if a video is a deepfake? Look for inconsistencies in facial movements, unnatural blinking, and audio-visual mismatches as potential indicators.

- What technologies help in detecting deepfakes? Machine learning algorithms and AI-based tools are commonly used to analyze and detect deepfake content.

- Why is human expertise important in identifying deepfakes? Human experts can provide contextual understanding and critical thinking that AI may lack, making their input invaluable in threat detection.

Legal and Ethical Considerations

The rapid rise of deepfake technology has sparked a whirlwind of legal and ethical challenges that society must confront. As these sophisticated tools become more accessible, the implications for privacy, consent, and accountability are becoming increasingly complex. Organizations and individuals alike are left wondering: who is responsible when a deepfake is used to manipulate public opinion or damage reputations? This uncertainty creates a pressing need for legal frameworks that can adapt to the fast-paced evolution of technology.

One of the most significant legal dilemmas arises from the fact that deepfakes can easily infringe on personal rights. For instance, when someone's likeness is used without permission, it raises questions about intellectual property and right of publicity. In many jurisdictions, laws are still catching up to the technology, leaving victims without adequate recourse. This gap in legislation allows malicious actors to exploit deepfake technology, resulting in potential harm to individuals and society at large.

Furthermore, the ethical implications of deepfakes extend beyond legalities. The ability to create hyper-realistic videos or audio clips can erode trust in digital communications. Imagine a world where every video could be questioned—where seeing is no longer believing. This potential for widespread misinformation not only impacts individuals but can also have grave consequences for democracy and social cohesion. The ethical responsibility of tech companies becomes paramount as they navigate the fine line between innovation and potential misuse of their products.

As we delve deeper into these complexities, it’s essential to consider a few key questions:

- What measures can be implemented to protect individuals from unauthorized deepfake usage?

- How can tech companies ensure they are held accountable for the misuse of their technologies?

- What role should governments play in regulating the use of deepfake technology?

In response to these concerns, some jurisdictions are beginning to enact laws specifically targeting the use of deepfakes. For instance, certain states in the U.S. have introduced legislation that makes it illegal to create or distribute deepfakes without consent, particularly when the intent is to defraud or harm. However, the challenge remains in enforcing these laws across various platforms and jurisdictions, given the global nature of the internet.

Moreover, ethical guidelines are being developed to help organizations navigate these murky waters. Companies are encouraged to adopt a proactive stance by implementing internal policies and training programs aimed at raising awareness about the risks associated with deepfakes. By fostering a culture of responsibility and accountability, organizations can play a pivotal role in mitigating the risks posed by this technology.

In conclusion, as deepfake technology continues to evolve, so too must our legal and ethical frameworks. The responsibility lies not only with lawmakers but also with tech companies and society as a whole to engage in meaningful discussions about the implications of this technology. By addressing these challenges head-on, we can work towards a future where innovation does not come at the cost of our fundamental rights and societal trust.

- What are deepfakes? Deepfakes are synthetic media in which a person's likeness is digitally altered to create realistic-looking but fake content.

- Are deepfakes illegal? The legality of deepfakes varies by jurisdiction. Some places have enacted laws against malicious use, while others have yet to catch up.

- How can I protect myself from deepfake misuse? Being aware of the technology and advocating for stronger regulations can help protect individuals from deepfake exploitation.

AI in Threat Intelligence

In today's digital landscape, the integration of artificial intelligence (AI) into threat intelligence is nothing short of revolutionary. Organizations are constantly bombarded with a barrage of cyber threats, and the sheer volume of data generated daily can be overwhelming. This is where AI steps in, acting like a vigilant guard dog, sniffing out patterns and anomalies that would otherwise go unnoticed by human analysts. Imagine having a supercharged brain that can analyze thousands of data points in mere seconds, identifying potential threats before they escalate into full-blown attacks. That’s the power of AI in threat intelligence.

AI enhances threat intelligence by utilizing sophisticated algorithms that sift through vast amounts of information from various sources, including network traffic, user behavior, and historical attack data. By leveraging machine learning techniques, these systems can learn from past incidents, adapting and improving their detection capabilities over time. For instance, AI can recognize subtle changes in network traffic that may indicate a breach, alerting cybersecurity teams to investigate further. This proactive approach not only saves time but also allows organizations to respond to threats in real-time.

Moreover, AI-driven threat intelligence platforms can aggregate data from multiple sources, creating a comprehensive view of the threat landscape. This includes analyzing social media trends, monitoring dark web activities, and even tracking geopolitical events that could impact cybersecurity. By understanding the context behind potential threats, organizations can prioritize their responses more effectively. For example, if an AI system detects a spike in discussions about a specific vulnerability on hacker forums, it can alert the security team to take preventive measures before an exploit occurs.

As organizations continue to embrace AI in their threat intelligence strategies, it’s essential to consider the ethical implications. The use of AI raises questions about privacy and data security. Organizations must ensure that they are not infringing on individual rights while gathering and analyzing data. Additionally, there’s the risk of over-reliance on AI systems, which could lead to complacency among human analysts. It’s crucial to strike a balance, where AI serves as an invaluable tool, but human oversight remains a key component of cybersecurity efforts.

In conclusion, the role of AI in threat intelligence is transformative, offering organizations the ability to stay one step ahead of cybercriminals. By harnessing the power of AI, businesses can enhance their security posture, improve incident response times, and ultimately protect their valuable digital assets. As we look to the future, the collaboration between AI systems and human experts will be critical in navigating the ever-evolving landscape of cyber threats.

- What is threat intelligence? Threat intelligence is the collection and analysis of information about potential threats to an organization, allowing for proactive defense measures.

- How does AI improve threat intelligence? AI improves threat intelligence by analyzing large datasets quickly, identifying patterns, and predicting potential threats based on historical data.

- Are there ethical concerns with using AI in cybersecurity? Yes, there are ethical concerns regarding privacy and data security, as well as the potential for over-reliance on AI systems without adequate human oversight.

Building Resilient Security Frameworks

In today's rapidly evolving digital landscape, building resilient security frameworks is not just an option; it's a necessity. With cyber threats becoming more sophisticated, organizations must adopt a proactive approach to cybersecurity that integrates cutting-edge technologies like artificial intelligence (AI). But what does it mean to be resilient in the face of such challenges? Essentially, it means having a security system that can not only withstand attacks but also adapt to new threats as they emerge. This involves a multifaceted strategy that combines technology, processes, and human expertise.

One of the fundamental aspects of creating a resilient security framework is integrating AI technologies. AI can analyze vast amounts of data at lightning speed, identifying patterns and anomalies that would be impossible for a human to detect alone. For instance, by employing machine learning algorithms, organizations can continuously learn from past incidents and improve their defenses over time. However, while AI can automate many processes, it’s crucial to remember that it should complement human expertise rather than replace it. The most effective security frameworks are those that harness the strengths of both AI and human professionals.

To build a truly robust security system, organizations should consider the following best practices:

- Continuous Monitoring: Implementing real-time monitoring solutions ensures that any suspicious activity is detected and addressed promptly.

- Regular Updates: Keeping software and systems updated is vital to protect against newly discovered vulnerabilities.

- Incident Response Plans: Developing and regularly testing incident response plans helps organizations react swiftly and effectively to breaches.

- Employee Training: Ensuring that all employees are aware of cybersecurity best practices can significantly reduce the risk of human error, which is often a weak link in security.

Moreover, collaboration is key. Cybersecurity is not a solo endeavor; it thrives on teamwork. Organizations should foster a culture where cybersecurity professionals work closely with AI systems to enhance threat detection and response capabilities. This collaboration can lead to innovative solutions that not only protect sensitive data but also provide peace of mind to stakeholders.

As organizations invest in building resilient frameworks, they must also be aware of the importance of adaptability. The cyber threat landscape is constantly changing, and what works today may not be effective tomorrow. Therefore, organizations should regularly assess their security measures, adapting to new threats and incorporating feedback from past incidents. This cycle of continuous improvement is what will ultimately lead to a more secure environment.

In summary, building resilient security frameworks is about creating a dynamic interplay between technology and human expertise. By leveraging AI, fostering collaboration, and remaining adaptable, organizations can not only protect themselves against current threats but also prepare for the inevitable challenges that lie ahead.

Q1: What are the key components of a resilient security framework?

A resilient security framework typically includes continuous monitoring, regular updates, incident response plans, and employee training. Each of these components plays a critical role in ensuring that an organization can effectively respond to cyber threats.

Q2: How can AI enhance cybersecurity?

AI enhances cybersecurity by analyzing large data sets for patterns and anomalies, allowing for quicker detection of potential threats and automating responses to incidents. This capability enables organizations to stay one step ahead of cybercriminals.

Q3: Why is human expertise important in cybersecurity?

While AI can automate many processes, human expertise is vital for interpreting data, making strategic decisions, and responding to complex threats. A combined approach that leverages both AI and human skills is the most effective way to manage cybersecurity challenges.

Collaboration Between AI and Human Experts

In the ever-evolving landscape of cybersecurity, the collaboration between artificial intelligence and human experts is not just beneficial; it is essential. Think of AI as a powerful tool, akin to a high-speed train, capable of processing vast amounts of data and identifying threats at lightning speed. However, without the guidance of skilled cybersecurity professionals, this train could easily go off the rails. The synergy between these two elements creates a dynamic defense mechanism that can adapt and respond to threats more effectively than either could alone.

AI technologies excel in analyzing patterns, detecting anomalies, and automating mundane tasks, allowing human experts to focus on more complex problems. For instance, while AI can sift through terabytes of data to identify potential vulnerabilities, it is the human touch that interprets these findings, assesses their implications, and devises strategic responses. This blend of speed and intuition is what makes modern cybersecurity so robust. Organizations that embrace this collaboration often find themselves better equipped to face the increasing sophistication of cyber threats.

Moreover, the integration of AI into cybersecurity frameworks fosters a culture of continuous learning. As AI systems gather data from various incidents, they learn and improve over time. However, it is the insights and contextual understanding provided by human experts that inform these systems, ensuring they evolve in a way that is relevant to the specific challenges faced by the organization. This is where the magic happens—when human intuition meets machine learning.

To illustrate the effectiveness of this collaboration, consider a scenario where an organization faces a potential breach. The AI system detects unusual activity, flagging it for review. Here’s how the collaboration unfolds:

- AI Analysis: The AI analyzes the behavior patterns and identifies it as a potential threat.

- Human Expertise: Cybersecurity professionals review the AI's findings, considering the context and potential impact.

- Strategic Response: Together, they formulate a response plan that incorporates both automated and manual interventions.

This collaborative approach not only enhances the immediate response to threats but also contributes to long-term security strategies. By leveraging AI's capabilities, human experts can develop more nuanced and effective policies that address both current and future risks.

In conclusion, the partnership between AI and human experts is a cornerstone of modern cybersecurity. As we move forward in this digital age, organizations must prioritize this collaboration to build resilient security frameworks that can withstand the challenges posed by increasingly sophisticated cyber threats. It's a dance of technology and human insight, and when done right, it creates a formidable force against cybercrime.

Q1: How does AI improve cybersecurity?

AI enhances cybersecurity by automating threat detection, analyzing vast datasets for anomalies, and providing real-time responses to potential breaches.

Q2: Why is human expertise still necessary in cybersecurity?

While AI can process data quickly, human experts are essential for contextual understanding, strategic decision-making, and ethical considerations in cybersecurity.

Q3: What are the risks of relying solely on AI for cybersecurity?

Relying solely on AI can lead to vulnerabilities if the technology misinterprets data or fails to adapt to new threats. Human oversight is crucial for effective threat management.

Q4: How can organizations foster collaboration between AI and human experts?

Organizations can encourage collaboration by investing in training programs that enhance human skills in interpreting AI data and by creating interdisciplinary teams that include both AI specialists and cybersecurity professionals.

Future Trends in Cybersecurity and AI

The future of cybersecurity is intricately tied to the advancements in artificial intelligence, creating a landscape that is both exciting and daunting. As we look ahead, several key trends are emerging that are set to redefine how organizations protect their digital assets. One of the most significant trends is the use of predictive analytics. By leveraging AI algorithms to analyze historical data, organizations can anticipate potential threats before they materialize. This proactive approach allows businesses to stay one step ahead of cybercriminals, making it increasingly difficult for them to execute their malicious plans.

Moreover, the integration of automated response systems is becoming more prevalent. These systems can react to threats in real-time, significantly reducing the time it takes to mitigate potential damage. Imagine having a security guard who doesn’t just alert you to a breach but also locks the doors and calls for backup—all in a matter of seconds. This level of automation is not just a dream; it’s quickly becoming a reality as AI technologies evolve.

Another fascinating trend is the evolving role of human oversight in security protocols. While AI can handle vast amounts of data and execute tasks with speed and precision, the human element remains critical. Cybersecurity professionals will need to work alongside AI systems, providing the intuition and contextual understanding that machines currently lack. This collaboration will ensure that organizations can effectively interpret AI-generated insights and make informed decisions in the face of complex threats.

Additionally, as AI continues to develop, we can expect to see a rise in adaptive security measures. These measures will utilize machine learning to continuously learn from new data and evolving threats, allowing security systems to adapt in real-time. This is akin to a chameleon that changes its color to blend in with its surroundings, making it harder for predators to spot it. In the same way, adaptive security will help organizations remain resilient against emerging threats.

However, with these advancements come challenges. The rapid development of AI tools can lead to a skills gap, where the demand for cybersecurity professionals who understand AI far exceeds the supply. Organizations will need to invest in training and development to equip their teams with the skills necessary to navigate this new landscape effectively. Furthermore, the ethical implications of AI in cybersecurity must be addressed. Questions surrounding privacy, consent, and accountability will become increasingly important as AI systems take on more responsibility in threat detection and response.

In conclusion, the future trends in cybersecurity and AI are not just about technology; they are about creating a holistic approach to security that incorporates human insight, advanced analytics, and automated solutions. As we embrace these innovations, organizations must remain vigilant and proactive, ensuring that they are not only prepared for the threats of today but also for those of tomorrow.

- What is predictive analytics in cybersecurity?

Predictive analytics involves using historical data and AI algorithms to forecast potential cyber threats, allowing organizations to take preventive measures before an attack occurs.

- How do automated response systems work?

Automated response systems react to cyber threats in real-time, executing predefined actions to mitigate damage, such as isolating affected systems or alerting security teams.

- Why is human oversight important in AI-driven cybersecurity?

Human oversight is crucial because it provides the contextual understanding and intuition needed to interpret AI-generated insights and make informed decisions in complex situations.

- What are adaptive security measures?

Adaptive security measures use machine learning to continuously learn from new data and evolving threats, allowing security systems to adjust in real-time to protect against attacks.

Frequently Asked Questions

- What role does AI play in enhancing cybersecurity?

AI plays a crucial role in cybersecurity by improving threat detection and response times. With its ability to analyze vast amounts of data quickly, AI can identify patterns and anomalies that may indicate a cyber threat. This allows organizations to respond proactively to potential attacks, making their security measures more robust and effective.

- What are the challenges associated with AI-driven cyber attacks?

AI-driven cyber attacks present unique challenges, such as the sophistication of the attacks and the speed at which they can occur. Cybercriminals are leveraging AI to create more advanced malware and phishing schemes, making it harder for traditional security measures to keep up. Organizations must adapt their security frameworks to counter these evolving threats effectively.

- How do deepfakes impact cybersecurity?

Deepfakes pose significant risks to cybersecurity by enabling misinformation campaigns and identity fraud. They can undermine trust in digital communications, making it challenging for individuals and organizations to discern genuine content from manipulated media. This technology raises concerns about privacy, consent, and the potential for abuse in various contexts.

- What methods are used to detect deepfake threats?

Detecting deepfake threats involves a combination of advanced algorithms and human oversight. Technologies such as machine learning models analyze video and audio for inconsistencies that may indicate manipulation. Additionally, organizations are developing tools to verify the authenticity of digital content, helping to mitigate the risks associated with deepfakes.

- What legal and ethical issues arise from the use of deepfakes?

The rise of deepfakes raises significant legal and ethical questions, particularly regarding privacy and consent. Issues such as the unauthorized use of someone's likeness and the potential for defamation are critical concerns. Tech companies also face scrutiny over their responsibilities in managing and regulating the use of deepfake technology.

- How does AI enhance threat intelligence?

AI enhances threat intelligence by analyzing large datasets to identify trends and potential vulnerabilities. By leveraging machine learning algorithms, organizations can predict and prevent cyber threats before they materialize. This proactive approach allows security teams to stay one step ahead of cybercriminals, improving overall security posture.

- What are best practices for building resilient cybersecurity frameworks?

Building resilient cybersecurity frameworks involves integrating AI technologies while addressing existing vulnerabilities. Best practices include regular security assessments, employee training on security awareness, and implementing multi-layered security protocols. Collaboration between AI systems and human experts is also essential for effective threat management.

- Why is collaboration between AI and human experts important in cybersecurity?

Collaboration between AI and human experts is vital because, while AI can automate many processes, human intuition and expertise are irreplaceable. Cybersecurity professionals can interpret complex data, make informed decisions, and respond to threats in ways that AI alone cannot. This synergy enhances the effectiveness of security measures.

- What are the future trends in cybersecurity influenced by AI?

Future trends in cybersecurity influenced by AI include the rise of predictive analytics, which enables organizations to foresee potential threats, and automated response systems that can react to incidents in real-time. Additionally, the evolving role of human oversight will become increasingly important as AI technologies continue to advance and integrate into security protocols.