AI and Ethics: Are We Doing Enough?

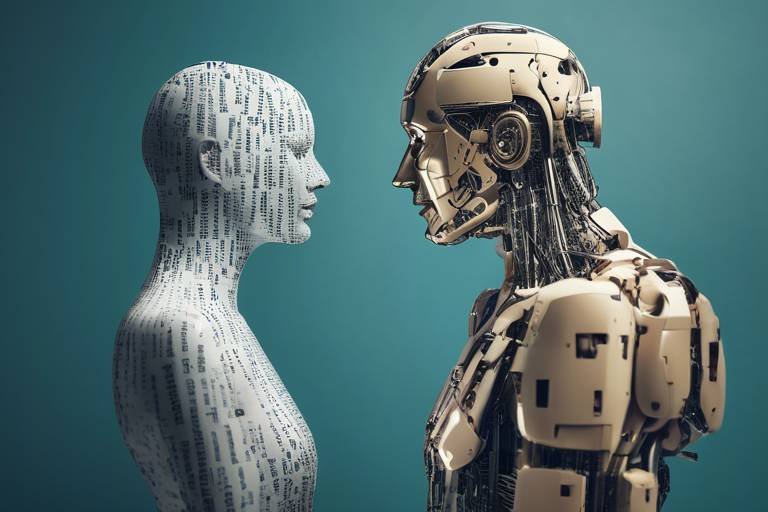

As artificial intelligence (AI) continues to weave itself into the very fabric of our daily lives, the question of whether we are doing enough to address the ethical implications of these technologies looms larger than ever. Imagine a world where your personal data is not just a commodity but a tool for manipulation; where algorithms dictate the opportunities available to you based on biased training data. It's a reality that many fear, and rightly so. The rapid advancement of AI technologies presents both incredible opportunities and daunting challenges. In this article, we will navigate through the intricate landscape of AI and ethics, exploring the vital importance of ethical considerations, the current guidelines in place, and the global perspectives that shape our understanding of responsible AI. Are we truly prepared to harness the power of AI while safeguarding our fundamental rights and values? Let’s dive in!

Understanding the significance of ethical AI is not just an academic exercise; it is a necessity for ensuring that technology serves humanity positively. Ethical considerations in AI development are crucial for several reasons. First and foremost, they help prevent harmful consequences that could arise from biased algorithms and decision-making processes. For instance, consider a hiring algorithm that favors certain demographics over others. This not only perpetuates existing inequalities but also undermines the very fabric of meritocracy. By prioritizing ethical AI, we can create a framework that promotes fairness, transparency, and accountability in technology. This leads to a more inclusive society where everyone has an equal opportunity to thrive.

Currently, various ethical frameworks and guidelines govern AI development, each with its strengths and weaknesses. For example, organizations like the IEEE and the European Commission have proposed guidelines aimed at ensuring ethical AI practices. However, these guidelines often lack enforceability, leading to a gap between theory and practice. While some companies adopt these frameworks voluntarily, others may view them as mere suggestions rather than obligations. This inconsistency raises questions about the effectiveness of current measures in addressing the challenges posed by AI technologies. Are we merely scratching the surface, or are we genuinely committed to ethical innovation?

Examining how different countries and cultures approach AI ethics reveals a rich tapestry of perspectives that shape international standards and practices. For instance, in Europe, there is a strong emphasis on data protection and privacy, largely influenced by the General Data Protection Regulation (GDPR). In contrast, countries like China prioritize technological advancement and economic growth, often at the expense of individual rights. This divergence highlights the need for a more unified approach to AI ethics that respects cultural differences while promoting universal values. How can we foster a global dialogue that encourages ethical AI practices across borders?

Real-world examples of ethical AI practices can provide valuable insights into their potential for positive societal impact. One notable case is that of a healthcare startup that developed an AI system to assist in diagnosing diseases. By implementing rigorous ethical standards, including bias detection and patient consent protocols, the company was able to enhance the accuracy of its diagnoses while respecting patient privacy. Such case studies not only demonstrate the feasibility of ethical AI but also serve as a roadmap for other organizations looking to implement similar practices. What lessons can we learn from these pioneers in ethical AI?

Despite the clear benefits of ethical AI, organizations face several obstacles when trying to adopt these guidelines. Resistance to change is a significant barrier, as many companies are reluctant to alter established practices that have proven profitable. Additionally, a lack of awareness about the importance of ethical AI can lead to complacency, while resource constraints may hinder the implementation of comprehensive ethical frameworks. How can organizations overcome these challenges to create a culture that prioritizes ethical considerations in AI development?

The general public's perception of AI ethics plays a crucial role in shaping corporate and policy decisions. Concerns over privacy, bias, and accountability are prevalent, often fueled by sensationalized media coverage of AI failures. For instance, incidents involving biased facial recognition technology have sparked outrage and calls for stricter regulations. This public scrutiny can drive organizations to adopt more robust ethical practices, but it can also lead to fear-driven responses that stifle innovation. How can we strike a balance between public concern and the need for technological advancement?

Government regulation is increasingly seen as a necessary component in shaping ethical AI practices. Potential legislation could establish standards for transparency, accountability, and fairness in AI systems. However, finding the right balance between fostering innovation and protecting individual rights remains a complex challenge. Policymakers must navigate the fine line between creating a regulatory environment that encourages growth and one that curtails creativity. What role should governments play in ensuring ethical AI practices while still promoting technological advancement?

Looking ahead, several emerging trends are likely to influence the landscape of AI ethics. Advancements in technology, such as explainable AI and machine learning fairness, are paving the way for more transparent and accountable systems. Additionally, societal expectations are evolving, with the public increasingly demanding ethical considerations in technology deployment. As we move forward, the role of ethics in AI development will continue to evolve, necessitating ongoing dialogue and adaptation. Are we ready to embrace these changes and lead the charge for ethical AI?

Finally, collaboration among stakeholders—governments, businesses, and civil society—is essential for fostering a comprehensive approach to ethical AI practices. By working together, these entities can share knowledge, resources, and best practices, creating a more cohesive framework for ethical AI development. Initiatives like the Partnership on AI exemplify how collective efforts can drive meaningful change in the industry. How can we encourage more collaborative efforts to ensure that ethical AI becomes the norm rather than the exception?

- What is ethical AI? Ethical AI refers to the development and deployment of artificial intelligence technologies that prioritize fairness, accountability, and transparency, ensuring that they benefit society as a whole.

- Why is AI ethics important? AI ethics is crucial for preventing harmful consequences, promoting fairness, and ensuring that AI technologies do not perpetuate existing biases and inequalities.

- What are some current ethical guidelines for AI? Various organizations have proposed guidelines, including the IEEE and the European Commission, focusing on principles like transparency, accountability, and respect for privacy.

- How can organizations adopt ethical AI practices? Organizations can adopt ethical AI practices by raising awareness, providing training, and implementing comprehensive ethical frameworks that prioritize responsible innovation.

- What role do governments play in AI ethics? Governments can shape ethical AI practices through regulation, establishing standards for transparency and accountability while balancing the need for innovation.

The Importance of Ethical AI

In a world increasingly driven by technology, the significance of ethical AI cannot be overstated. As we integrate artificial intelligence into various aspects of our lives—from healthcare to finance, and even our daily interactions—it's crucial to ask ourselves: Are we ensuring that these technologies are developed and deployed responsibly? The ethical implications of AI touch on fundamental human rights, privacy, and even the very fabric of our societal norms. Without a robust ethical framework, we risk creating systems that not only fail to serve humanity but potentially harm it.

Imagine a future where AI systems make decisions without any oversight. What happens when these systems are biased, or worse, when they perpetuate existing inequalities? This scenario is not far-fetched; it’s already happening in some sectors. For instance, algorithms used in hiring processes have shown to favor certain demographics over others, leading to discrimination. This highlights the need for ethical guidelines that ensure fairness, transparency, and accountability in AI applications.

Moreover, ethical AI is about more than just preventing harm; it’s about leveraging technology to create positive outcomes. When ethical considerations are embedded in the development process, we pave the way for innovations that enhance our lives. Consider AI in healthcare, where ethical frameworks can guide the use of patient data, ensuring privacy while enabling breakthroughs in treatment. The potential for AI to revolutionize industries is immense, but it must be balanced with a commitment to ethical practices.

To illustrate the importance of ethical AI, let’s look at some key principles that should guide its development:

- Transparency: AI systems should be understandable and explainable. Users deserve to know how decisions are made.

- Accountability: There must be clear lines of responsibility for AI actions, ensuring that developers and organizations are held accountable.

- Fairness: AI should be designed to be inclusive and equitable, avoiding biases that can lead to discrimination.

- Privacy: Protecting user data is paramount. Ethical AI must prioritize data security and user consent.

In summary, the importance of ethical AI lies not just in preventing negative consequences but in actively fostering a more just and equitable society. As we navigate the complexities of AI technologies, we must remain vigilant and proactive in ensuring that ethical considerations are at the forefront of innovation. The question isn’t just whether we can create intelligent systems; it’s whether we can do so in a way that aligns with our values and serves the greater good.

Q: Why is ethical AI important?

A: Ethical AI is crucial because it ensures that technology serves humanity positively, preventing harm and fostering equitable outcomes.

Q: What are some key principles of ethical AI?

A: Key principles include transparency, accountability, fairness, and privacy, which guide the responsible development and deployment of AI technologies.

Q: How can organizations implement ethical AI practices?

A: Organizations can implement ethical AI practices by establishing clear guidelines, conducting regular audits, and fostering a culture of ethical awareness among employees.

Current Ethical Guidelines

In the rapidly evolving landscape of artificial intelligence, the establishment of ethical guidelines is more crucial than ever. These guidelines serve as a roadmap for developers and organizations, ensuring that AI technologies are created and deployed with a sense of responsibility. However, the current frameworks in place often face scrutiny regarding their effectiveness and comprehensiveness. It's essential to explore these ethical guidelines to understand their strengths and weaknesses in addressing the challenges posed by AI.

One of the most recognized frameworks is the IEEE Ethically Aligned Design, which emphasizes the importance of aligning AI systems with human values. This guideline encourages developers to consider the broader implications of their technologies, promoting a design philosophy that prioritizes human well-being. Yet, despite its comprehensive nature, critics argue that it lacks enforceability and practical application in real-world scenarios.

Another significant initiative is the EU's Ethics Guidelines for Trustworthy AI, which outlines key requirements for AI systems, such as accountability, transparency, and fairness. These guidelines aim to ensure that AI technologies are not only effective but also trustworthy. However, the challenge lies in the implementation of these principles. Many organizations struggle to translate these ethical ideals into actionable practices, leading to a gap between theory and reality.

To illustrate the current state of ethical guidelines, let's take a look at a table summarizing some of the prominent frameworks:

| Framework | Key Focus Areas | Strengths | Weaknesses |

|---|---|---|---|

| IEEE Ethically Aligned Design | Human values, ethical design | Comprehensive approach | Lacks enforceability |

| EU Ethics Guidelines for Trustworthy AI | Accountability, transparency, fairness | Clear principles | Implementation challenges |

| OECD Principles on AI | Inclusive growth, human-centered values | International consensus | General recommendations |

| Partnership on AI | Collaboration, best practices | Industry-wide engagement | Varied commitment levels |

As we can see, while there are various ethical guidelines in place, they often share common challenges. The lack of enforceability and the difficulty in implementing these guidelines in practice create a significant hurdle. Moreover, many organizations may not fully understand the implications of these guidelines, leading to a lack of adherence. This raises the question: are we doing enough to ensure that these ethical frameworks translate into meaningful action?

In conclusion, while the current ethical guidelines provide a foundation for responsible AI development, there remains a pressing need for more robust mechanisms to enforce these principles. The conversation around AI ethics is ongoing, and as technology continues to advance, so too must our commitment to ensuring that it serves humanity positively. The journey towards ethical AI is far from complete, and it's a collective responsibility to ensure that we navigate this path wisely.

Global Perspectives on AI Ethics

When we dive into the realm of AI ethics, it's like peering into a kaleidoscope of ideas and values shaped by diverse cultures and societies. Each country offers its own unique lens through which to view the ethical implications of artificial intelligence. For instance, in the United States, the focus often leans towards innovation and economic growth, sometimes at the expense of comprehensive ethical oversight. Here, the mantra tends to be "move fast and break things," which can lead to a hasty implementation of AI technologies without fully considering the potential consequences.

On the other hand, countries like Germany emphasize a more cautious approach, prioritizing privacy and data protection. The German approach is heavily influenced by historical contexts, particularly the need for strong safeguards against authoritarianism. This has resulted in strict regulations that govern how AI can be developed and deployed, ensuring that human rights are at the forefront of technological advancement.

Meanwhile, in China, the narrative is quite different. The Chinese government promotes AI development as a means to enhance national power and social stability. The ethical considerations here often revolve around the collective good, with less emphasis on individual privacy. This raises questions about the balance between societal benefits and individual rights, highlighting a significant divergence in global perspectives.

To illustrate these differences, let's take a closer look at some of the key elements that shape AI ethics across different regions:

| Country | Focus Areas | Ethical Concerns |

|---|---|---|

| United States | Innovation, Economic Growth | Privacy, Accountability |

| Germany | Privacy, Data Protection | Human Rights, Regulatory Compliance |

| China | National Power, Social Stability | Collective Good vs. Individual Rights |

In addition to these national approaches, international organizations like the European Union are striving to create a unified framework for AI ethics that can transcend borders. The EU's General Data Protection Regulation (GDPR) is a prime example of an effort to establish a baseline for ethical AI practices, emphasizing transparency and user consent. However, the challenge lies in reconciling these varied perspectives into a coherent global strategy.

As we navigate these complex waters, it becomes increasingly clear that the conversation around AI ethics is not just a technical dialogue but a deeply human one. It invites us to reflect on our values, our priorities, and how we envision the future of technology in our lives. The question remains: can we find common ground amidst our differences, or will our divergent paths lead to a fragmented landscape of AI ethics that ultimately undermines the very goals we seek to achieve?

In conclusion, understanding these global perspectives on AI ethics is crucial for fostering a more inclusive and responsible approach to technology. By learning from each other, we can work towards a future where AI serves humanity as a whole, rather than a select few. As we continue to explore this dynamic field, let’s keep the dialogue open and collaborative, ensuring that the voices of diverse cultures and communities are heard and respected.

- What are the main ethical concerns surrounding AI? The primary concerns include privacy, bias, accountability, and the potential for misuse of AI technologies.

- How do different countries approach AI ethics? Countries like the US focus on innovation, Germany emphasizes privacy, while China prioritizes national power and social stability.

- Why is international collaboration important in AI ethics? It helps to create a unified framework that can address global challenges and ensure that AI benefits everyone, not just a select few.

Case Studies of Ethical AI Implementation

When we think about ethical AI implementation, real-world examples can illuminate the path forward. One notable case is that of IBM Watson, which has been used in healthcare to assist doctors in diagnosing diseases. By analyzing vast amounts of medical data, Watson provides recommendations that can lead to better patient outcomes. However, what sets this case apart is IBM's commitment to transparency. They have made it a point to ensure that the algorithms used are explainable, allowing medical professionals to understand how decisions are made. This transparency not only builds trust among healthcare providers but also empowers patients, making them active participants in their own care.

Another compelling example comes from Google's AI for Social Good initiative. This program focuses on leveraging AI to tackle pressing social issues, such as environmental challenges and disaster response. For instance, Google has developed AI models that predict natural disasters, allowing communities to prepare and respond more effectively. The ethical implications here are profound; by prioritizing social good, Google showcases how technology can be harnessed to create a positive societal impact. Yet, they also face the challenge of ensuring that their AI tools do not inadvertently perpetuate biases, which requires ongoing scrutiny and adjustment.

In the realm of finance, Mastercard has implemented ethical AI practices to combat fraud. Using machine learning algorithms, they analyze transaction patterns to detect anomalies that may indicate fraudulent activity. What’s noteworthy is their approach to inclusivity; they have developed systems that consider the unique transaction behaviors of different demographics. This not only enhances security but also ensures that no group is unfairly targeted or misrepresented. By incorporating diverse data sets, Mastercard is taking steps to mitigate bias and promote fairness in their AI systems.

However, the journey toward ethical AI is fraught with challenges. For example, the case of Amazon's facial recognition technology raises significant ethical questions. While the technology can enhance security, it has been criticized for its potential to misidentify individuals, particularly people of color. This has sparked debates about privacy and civil liberties, highlighting the importance of rigorous ethical standards. The backlash against such technologies emphasizes the need for companies to engage with communities and stakeholders to address concerns proactively.

As we analyze these case studies, it becomes evident that ethical AI implementation is not just about adhering to guidelines; it’s about fostering a culture of responsibility and accountability. Companies must not only innovate but also consider the ethical implications of their technologies on society. This requires collaboration across various sectors, including government, academia, and civil society. By sharing knowledge and resources, stakeholders can create a more equitable and ethical landscape for AI development.

- What is ethical AI? Ethical AI refers to the development and deployment of artificial intelligence technologies that prioritize fairness, accountability, transparency, and respect for human rights.

- Why are case studies important in understanding ethical AI? Case studies provide tangible examples of how ethical principles can be applied in real-world situations, showcasing both successes and challenges.

- How can organizations ensure they are implementing ethical AI? Organizations can adopt ethical guidelines, engage with diverse stakeholders, and conduct regular audits of their AI systems to ensure fairness and transparency.

- What role does public perception play in AI ethics? Public perception can significantly influence policy decisions and corporate practices, making it essential for organizations to address ethical concerns transparently.

Challenges in Adopting Ethical Guidelines

Adopting ethical guidelines in the realm of artificial intelligence (AI) is a noble endeavor, yet it is fraught with numerous challenges. Organizations often find themselves at a crossroads, where the desire to innovate clashes with the need for ethical responsibility. One of the most significant hurdles is the resistance to change. Many companies have established practices that prioritize efficiency and profit over ethical considerations. Shifting this mindset requires not only a change in policy but also a cultural transformation within the organization.

Furthermore, there is a pervasive lack of awareness regarding what ethical AI truly entails. Many stakeholders, from developers to executives, may not fully understand the implications of their technologies on society. This ignorance can lead to the implementation of AI systems that inadvertently perpetuate biases or infringe on privacy rights. To combat this, comprehensive training programs must be developed to educate all levels of an organization about the ethical dimensions of AI.

In addition to these issues, resource constraints pose a significant barrier. Smaller organizations, in particular, may struggle to allocate the necessary funds and personnel to establish and maintain ethical guidelines. This can create a disparity between larger corporations, which often have dedicated teams focused on ethics, and smaller entities that lack such resources. As a result, the ethical landscape of AI can become uneven, with some companies leading the charge while others lag behind.

Moreover, the rapid pace of AI development can make it difficult for ethical guidelines to keep up. As new technologies emerge, existing frameworks may become outdated, leaving organizations unsure of how to proceed. This creates a vicious cycle where the absence of clear, relevant guidelines leads to hesitancy in adoption, further delaying the progress toward ethical AI.

In summary, while the adoption of ethical guidelines for AI is crucial, it is not without its challenges. Organizations must navigate

- Resistance to change

- Lack of awareness

- Resource constraints

- Rapid technological advancements

Q: What are the main challenges in adopting ethical AI guidelines?

A: The main challenges include resistance to change, lack of awareness among stakeholders, resource constraints, and the rapid pace of technological advancements that can outstrip existing ethical frameworks.

Q: Why is it important to have ethical guidelines for AI?

A: Ethical guidelines are crucial to ensure that AI technologies are developed and used responsibly, minimizing the risk of harm and promoting fairness, accountability, and transparency in AI systems.

Q: How can organizations overcome resistance to change regarding ethical AI?

A: Organizations can overcome resistance by fostering a culture of ethics, providing training and education on ethical AI, and involving all stakeholders in the process of developing and implementing ethical guidelines.

Public Perception of AI Ethics

The public's perception of AI ethics is a complex tapestry woven from various threads of experience, knowledge, and concern. As artificial intelligence becomes increasingly embedded in our daily lives, people are rightfully questioning the implications of these technologies. Have you ever wondered how much of your personal data is being used to train AI algorithms? Or how decisions made by AI systems could impact your life? These questions are not just academic; they resonate deeply with individuals who feel the effects of technology on their privacy and autonomy.

Many individuals express a sense of unease regarding the ethical dimensions of AI. According to recent surveys, a significant portion of the population is concerned about issues like bias in AI algorithms, which can lead to unfair treatment of certain groups. For instance, if an AI system is trained on biased data, it may perpetuate existing inequalities, leading to outcomes that disadvantage marginalized communities. This fear is not unfounded; there have been numerous instances where AI systems have demonstrated bias, raising alarms about their deployment in critical areas such as hiring, law enforcement, and healthcare.

Moreover, the issue of accountability looms large in the public's mind. When an AI system makes a mistake—like misidentifying a person in a facial recognition system—who is held responsible? Is it the developers, the companies, or the technology itself? These questions highlight a significant gap in understanding and trust between the public and AI developers. Many individuals feel that there is a lack of transparency surrounding how AI systems operate and make decisions, which only fuels their skepticism.

Interestingly, the level of awareness about AI ethics varies widely among different demographics. Younger generations, who are more tech-savvy and engaged with digital platforms, tend to be more vocal about their concerns. They often advocate for stronger regulations and ethical guidelines to ensure that AI technologies are developed and used responsibly. On the other hand, older generations may not fully grasp the implications of AI, leading to a more passive acceptance of its integration into society. This generational divide underscores the need for comprehensive education on AI and its ethical considerations.

To address these concerns, it is essential for organizations and policymakers to engage with the public actively. This could involve initiatives such as community forums, educational campaigns, and transparent reporting on AI practices. By fostering an open dialogue, we can bridge the gap between technology developers and the public, creating a more informed society that can advocate for ethical AI practices. After all, the future of AI should not just be in the hands of a few; it should reflect the values and concerns of the many.

As we navigate this evolving landscape, it is crucial to recognize that public perception can significantly influence policy decisions and corporate strategies. Companies that prioritize ethical considerations in their AI development are likely to gain public trust and loyalty. Conversely, those that neglect these issues may face backlash and reputational damage. In this way, the conversation around AI ethics is not just about technology; it is about building a future that is equitable, transparent, and beneficial for all.

- What are the main concerns regarding AI ethics? The main concerns include bias in algorithms, accountability for AI decisions, and the transparency of AI systems.

- How can the public influence AI ethics? The public can influence AI ethics by advocating for stronger regulations, participating in discussions, and demanding transparency from companies.

- Why is public perception important for AI ethics? Public perception is crucial because it can shape policy decisions and corporate strategies, ensuring that AI technologies are developed responsibly.

Regulatory Approaches to AI Ethics

The intersection of artificial intelligence (AI) and ethics has prompted a growing need for regulatory frameworks that can guide the development and deployment of these powerful technologies. As AI continues to permeate various sectors, from healthcare to finance, the question arises: how can we ensure that these innovations are not only effective but also ethically sound? The answer lies in establishing a robust regulatory landscape that balances innovation with protection.

Governments around the world are beginning to recognize the importance of regulating AI ethics. Various countries are drafting legislation aimed at addressing ethical concerns related to AI, such as bias, transparency, and accountability. For instance, the European Union has proposed regulations that focus on high-risk AI systems, which require stringent compliance measures to ensure that these technologies do not harm individuals or society at large. These regulations are designed to foster trust in AI by mandating that companies conduct impact assessments and maintain transparency in their algorithms.

However, regulatory approaches to AI ethics are not without challenges. One significant hurdle is the rapid pace of technological advancement. By the time a regulatory framework is established, the technology may have already evolved, leaving regulations outdated and ineffective. This creates a pressing need for adaptive regulations that can evolve alongside AI technologies. Furthermore, there is a risk that overly stringent regulations could stifle innovation, making it essential to find a balance that encourages development while safeguarding ethical standards.

Moreover, the global nature of AI technology complicates regulatory efforts. Different countries have varying cultural values and ethical standards, leading to a patchwork of regulations that can create confusion for multinational companies. For example, while the EU focuses on privacy and data protection, other regions may prioritize economic growth and innovation. This divergence can hinder the establishment of universal ethical standards for AI, making collaborative international efforts crucial.

To address these challenges, many experts advocate for a multi-stakeholder approach to AI regulation. This involves collaboration among governments, industry leaders, academia, and civil society to create comprehensive guidelines that reflect diverse perspectives. Such collaboration can help ensure that regulatory frameworks are not only effective but also inclusive, taking into account the needs and concerns of various stakeholders.

In addition to governmental regulations, self-regulation within the tech industry is gaining traction. Many companies are recognizing the importance of ethical AI and are proactively developing internal guidelines and ethical review boards. This self-regulatory approach can complement governmental efforts by fostering a culture of accountability and responsibility within organizations. However, it is crucial to ensure that these self-regulatory measures are transparent and subject to external scrutiny to maintain public trust.

Ultimately, the goal of regulatory approaches to AI ethics should be to create an environment where innovation can thrive while ensuring that ethical considerations are at the forefront of AI development. By fostering collaboration and adaptability in regulatory measures, we can navigate the complexities of AI technology and build a future that benefits everyone.

- What are the main ethical concerns regarding AI? The primary concerns include bias, privacy, accountability, and transparency in AI systems.

- How can regulations help improve AI ethics? Regulations can set standards for ethical practices, promote transparency, and ensure accountability in AI development.

- Are there any existing AI regulations? Yes, various countries, particularly in the EU, are drafting regulations aimed at governing high-risk AI applications.

- What role does self-regulation play in AI ethics? Self-regulation allows companies to develop their own ethical guidelines, which can complement governmental regulations and promote a culture of responsibility.

Future Trends in AI Ethics

The landscape of artificial intelligence is evolving at an unprecedented pace, and with it, the ethical considerations surrounding its development and deployment are becoming increasingly complex. As we look to the future, several trends are emerging that will shape the ethical framework within which AI operates. One of the most significant trends is the growing emphasis on transparency. Developers and organizations are recognizing that for AI systems to be trusted, they must be able to explain their decision-making processes. This means moving towards more interpretable AI models, where users can understand how and why a decision was made. Imagine a world where AI isn't just a black box but a transparent system that communicates its reasoning clearly—this is the future we are striving for.

Another important trend is the rise of collaborative governance in AI ethics. Stakeholders from various sectors, including governments, tech companies, and civil society, are beginning to work together to establish comprehensive ethical guidelines. This collaboration is essential because it brings diverse perspectives to the table, ensuring that the ethical standards developed are inclusive and representative of the broader society. For instance, initiatives like the Partnership on AI are paving the way for collective action, fostering discussions that lead to actionable guidelines and policies.

Furthermore, as AI systems become more integrated into our daily lives, there is an increasing focus on accountability. Who is responsible when an AI system makes a mistake? This question is at the forefront of discussions about AI ethics. As we advance, we can expect to see new regulations and frameworks that clarify accountability in AI applications. This might include defining liability in cases of harm caused by AI, ensuring that there are clear channels for redress when things go wrong.

Finally, the concept of ethical AI design is gaining traction. This involves embedding ethical considerations into the design phase of AI development, rather than addressing them as an afterthought. By integrating ethics from the outset, developers can create systems that align more closely with societal values and norms. This proactive approach can help mitigate issues like bias and discrimination, which have plagued many AI systems in the past.

In summary, the future of AI ethics is likely to be characterized by a focus on transparency, collaborative governance, accountability, and ethical design. As these trends unfold, they hold the promise of creating a more responsible and trustworthy AI landscape that benefits everyone. However, it’s essential for all stakeholders to remain vigilant and engaged, ensuring that ethical considerations keep pace with technological advancements.

- What is the importance of transparency in AI? Transparency helps users understand AI decision-making, fostering trust and accountability.

- How can collaboration improve AI ethics? Collaborative governance ensures diverse perspectives are considered, leading to more inclusive ethical standards.

- What does accountability mean in the context of AI? Accountability refers to defining who is responsible when AI systems cause harm or make mistakes.

- Why is ethical design crucial for AI? Ethical design integrates values and norms into the development process, reducing the risk of bias and discrimination.

Collaborative Efforts for Ethical AI

In the rapidly evolving landscape of artificial intelligence, the need for collaboration among various stakeholders has never been more critical. As we navigate the complex ethical challenges posed by AI technologies, it becomes increasingly clear that no single entity can tackle these issues alone. Governments, businesses, academia, and civil society must come together to forge a path towards ethical AI that benefits everyone. Imagine trying to build a bridge with only one set of tools; it simply wouldn't work. Similarly, a collective effort is essential for constructing a robust framework to govern AI responsibly.

One of the primary reasons for this collaborative approach is the diversity of perspectives that different stakeholders bring to the table. For instance, tech companies may focus on innovation and market competitiveness, while governments might emphasize regulation and public safety. Meanwhile, academic institutions can contribute research and theoretical frameworks, and civil society organizations can offer insights into public concerns and ethical implications. By pooling these resources and viewpoints, we can create a more comprehensive understanding of the ethical landscape surrounding AI.

Moreover, collaborative efforts can lead to the establishment of shared ethical standards that are universally accepted. This is particularly important in a globalized world where AI technologies cross borders and cultures. For instance, the European Union has been proactive in developing ethical guidelines for AI, which can serve as a model for other regions. By engaging in dialogues and partnerships, countries can harmonize their approaches to AI ethics, reducing the risk of regulatory fragmentation that could stifle innovation.

To illustrate the impact of collaborative efforts, consider the following table that highlights successful initiatives aimed at promoting ethical AI:

| Initiative | Description | Stakeholders Involved |

|---|---|---|

| Partnership on AI | A consortium of tech companies, academia, and civil society focused on advancing the understanding and adoption of AI in a responsible manner. | Google, Facebook, Microsoft, universities, NGOs |

| AI Ethics Guidelines by the EU | A comprehensive set of guidelines aimed at ensuring AI development aligns with European values and fundamental rights. | European Commission, member states, industry experts |

| Global Partnership on AI | An initiative aimed at promoting the responsible use of AI globally, with a focus on inclusive development. | Governments, international organizations, private sector |

However, while these initiatives are promising, challenges remain. Many organizations face barriers such as resource constraints, a lack of awareness about ethical AI, and resistance to change. Overcoming these obstacles requires not just commitment but also a willingness to engage in open dialogue and share resources. For instance, workshops and training sessions can be organized to educate stakeholders about the importance of ethical AI practices.

In conclusion, the journey towards ethical AI is not a solitary one. It demands a concerted effort from all sectors of society. By fostering collaboration, we can not only address the ethical dilemmas posed by AI but also ensure that these technologies are developed and deployed in ways that enhance human welfare and societal good. The road ahead may be filled with challenges, but together, we can navigate it more effectively.

- What is ethical AI? Ethical AI refers to the development and implementation of artificial intelligence technologies in a manner that respects human rights, promotes fairness, and avoids harm.

- Why is collaboration important in AI ethics? Collaboration brings together diverse perspectives and expertise, which is crucial for creating comprehensive ethical standards and addressing the multifaceted challenges posed by AI.

- What are some examples of collaborative efforts in AI ethics? Initiatives like the Partnership on AI and the Global Partnership on AI exemplify how different stakeholders can work together to promote responsible AI use.

- What challenges do organizations face in adopting ethical AI? Common challenges include resource limitations, lack of awareness, and resistance to change within the organization.

Frequently Asked Questions

- What is the significance of ethical AI?

Ethical AI is crucial because it ensures that artificial intelligence technologies are developed and used in ways that are beneficial to society. It helps to prevent harm, promotes fairness, and fosters trust among users. Without ethical considerations, AI can lead to unintended consequences, such as discrimination or privacy violations.

- What are the current ethical guidelines for AI development?

There are several existing ethical frameworks guiding AI development, such as the EU's Ethics Guidelines for Trustworthy AI and the IEEE's Ethically Aligned Design. While these guidelines promote transparency, accountability, and fairness, they often face challenges in implementation and may lack enforcement mechanisms, leaving room for improvement.

- How do different cultures approach AI ethics?

AI ethics varies significantly across cultures. For instance, Western nations often emphasize individual rights and privacy, while some Eastern cultures may focus more on collective welfare and social harmony. This diversity shapes international standards and practices, highlighting the need for a global dialogue on ethical AI.

- Can you provide examples of ethical AI in action?

Absolutely! One notable example is the use of AI in healthcare, where algorithms are designed to assist doctors in diagnosing diseases while ensuring patient data privacy. Another example is AI-driven hiring tools that actively mitigate bias, promoting diversity in recruitment processes. These cases illustrate how ethical AI can lead to positive societal impacts.

- What challenges do organizations face in adopting ethical AI guidelines?

Organizations often encounter several obstacles when trying to implement ethical AI guidelines. These include resistance to change from employees, a lack of awareness about ethical issues, and limited resources to invest in training and compliance. Overcoming these challenges requires commitment and a cultural shift within the organization.

- How does the public perceive AI ethics?

The general public has growing concerns about AI ethics, particularly regarding issues like privacy, bias, and accountability. These concerns can significantly influence policy-making and corporate practices, as companies must address public fears to maintain trust and credibility in their AI systems.

- What role does government regulation play in AI ethics?

Government regulation is pivotal in shaping ethical AI practices. It can provide a framework for accountability and set standards that promote responsible innovation. However, regulators must strike a balance between fostering innovation and protecting citizens from potential risks associated with AI technologies.

- What are the future trends in AI ethics?

Future trends in AI ethics include advancements in technology that require new ethical considerations, evolving societal expectations for transparency and fairness, and a growing emphasis on interdisciplinary collaboration. As AI continues to integrate into various aspects of life, ethical considerations will play an increasingly vital role in development and deployment.

- How can stakeholders collaborate for ethical AI?

Collaboration among stakeholders—governments, businesses, and civil society—is essential for fostering ethical AI practices. By sharing knowledge, resources, and best practices, these groups can create a comprehensive approach to address ethical challenges and ensure that AI technologies are developed responsibly and equitably.