Balancing Technology and Morality: The Case of AI Ethics

In our rapidly evolving digital landscape, the emergence of artificial intelligence (AI) has ignited a profound dialogue about the ethical implications of technology. Imagine a world where machines not only assist us but also make decisions that impact our lives. This scenario raises critical questions: Who is responsible when AI makes a mistake? How do we ensure that these intelligent systems act in ways that align with our values? As we delve into the world of AI ethics, it becomes clear that we must strike a delicate balance between technological innovation and moral responsibility.

AI has the potential to revolutionize industries, enhance productivity, and improve our quality of life. However, with great power comes great responsibility. The ethical considerations surrounding AI are not just academic; they are fundamental to ensuring that technology serves humanity rather than undermines it. By understanding and addressing the ethical dilemmas posed by AI, we can harness its capabilities while safeguarding our societal values.

At the heart of AI ethics lies the imperative to respect human rights and promote societal well-being. As we integrate AI into our daily lives, we must ask ourselves: Are we prioritizing fairness, accountability, and transparency in our technological advancements? The answers to these questions will shape the future of AI and its role in society. Just as a compass guides a ship through treacherous waters, ethical principles can steer AI development toward a more just and equitable future.

As we navigate this complex landscape, it’s crucial to engage in conversations about the responsibilities of developers, organizations, and governments. The stakes are high, and the consequences of neglecting ethical considerations can be dire. From biased algorithms that perpetuate discrimination to opaque decision-making processes that erode trust, the challenges we face are multifaceted. Thus, it is imperative to foster a culture of ethical awareness and proactive engagement in AI development.

In conclusion, the case for AI ethics is not just about preventing harm; it’s about creating a future where technology enhances our lives while upholding our values. As we continue to explore the intersection of technology and morality, let us commit to ensuring that AI serves as a force for good, promoting fairness, accountability, and transparency in all its applications.

Understanding AI ethics is crucial as it guides the development and implementation of technology in a way that respects human rights and promotes societal well-being. In a world where AI systems can influence everything from hiring decisions to criminal justice, the need for ethical frameworks has never been more pressing. We must recognize that technology does not exist in a vacuum; it is deeply intertwined with societal norms and values.

Consider the implications of deploying AI in sensitive areas such as healthcare or law enforcement. A misstep could lead to unjust outcomes, reinforcing existing inequalities. Therefore, it is essential to establish ethical guidelines that prioritize the welfare of individuals and communities. By doing so, we can create AI systems that not only perform efficiently but also uphold the dignity and rights of all people.

This section outlines fundamental ethical principles such as fairness, accountability, transparency, and privacy that should govern AI systems to ensure they operate justly. Each of these principles serves as a pillar for building trust in AI technologies, enabling us to navigate the complex moral landscape they inhabit.

Fairness in AI involves eliminating biases in algorithms to promote equal treatment across different demographics, ensuring that technology benefits all individuals without discrimination. In a world where AI systems are increasingly used to make critical decisions, from loan approvals to job selections, ensuring fairness is paramount.

Data bias can lead to unfair AI outcomes; thus, it is essential to identify and mitigate biases in training datasets used for machine learning models. If the data fed into these systems reflects historical prejudices, the AI will inevitably perpetuate them. This is akin to trying to bake a cake with spoiled ingredients—no matter how skilled the baker, the end result will be flawed.

Designing algorithms that prioritize fairness requires a collaborative approach, involving diverse stakeholders to ensure a wide range of perspectives is considered. Engaging with communities affected by AI decisions is crucial for creating systems that truly reflect our societal values.

Establishing accountability in AI systems is vital for ensuring that developers and organizations are held responsible for the outcomes of their technologies. Just as we hold individuals accountable for their actions, we must extend this principle to the creators of AI systems. Clear lines of accountability can help mitigate the risks associated with AI deployment and foster a culture of responsibility.

Regulations are necessary to enforce ethical standards in AI development, providing guidelines that help organizations navigate the complex moral landscape. Without these regulations, the rapid pace of technological advancement may outstrip our ability to manage its consequences effectively.

Creating global standards for AI ethics fosters international collaboration and consistency, ensuring that ethical considerations are prioritized across borders. In an interconnected world, a unified approach to AI ethics can help prevent a race to the bottom where countries compromise on ethical standards for competitive advantage.

Implementing effective regulations poses challenges, including balancing innovation with ethical oversight and addressing the fast-paced nature of technological advancements. Striking this balance is akin to walking a tightrope; too much regulation can stifle innovation, while too little can lead to societal harm. Therefore, it is essential to engage in ongoing dialogue among stakeholders to refine and adapt regulations as technology evolves.

- What is AI ethics? - AI ethics refers to the moral principles guiding the development and use of artificial intelligence technologies.

- Why is fairness important in AI? - Fairness ensures that AI systems do not perpetuate existing biases and provide equal treatment to all individuals.

- How can we ensure accountability in AI? - Establishing clear lines of responsibility for AI developers and organizations is crucial for accountability.

- What role do regulations play in AI ethics? - Regulations help enforce ethical standards and provide guidelines for responsible AI development.

The Importance of AI Ethics

Understanding AI ethics is crucial in today’s rapidly evolving technological landscape. As artificial intelligence becomes increasingly integrated into our daily lives, the way we develop and implement these technologies must align with ethical considerations. Why is this so important? Well, think about it: AI systems have the potential to influence everything from healthcare decisions to hiring practices. If we don't prioritize ethics, we risk creating a world where technology exacerbates inequalities rather than alleviating them.

At its core, AI ethics serves as a guiding framework that ensures technology respects human rights and promotes societal well-being. By embedding ethical principles into AI development, we can ensure that these systems are designed with the intent to benefit humanity rather than harm it. This is not merely a theoretical concern; the real-world implications of unethical AI can lead to significant harm, including privacy violations, biased decision-making, and even physical harm in cases where AI controls critical infrastructure.

Moreover, the importance of AI ethics extends beyond individual rights. It also encompasses the broader societal impact. For instance, consider how AI can be used in law enforcement or social services. If these systems are not held to ethical standards, they could perpetuate systemic biases, leading to unfair treatment of marginalized communities. This is why a strong ethical foundation is not just a nice-to-have; it’s an absolute necessity.

Incorporating ethics into AI development requires a multi-faceted approach. It involves not only the developers and engineers who create these systems but also stakeholders from various sectors, including government, academia, and civil society. By fostering a collaborative environment, we can ensure that diverse perspectives are considered, leading to more equitable outcomes. The challenge lies in creating a culture that values ethical considerations as much as technical prowess.

To illustrate the significance of AI ethics, consider the following key points:

- Human-Centric Design: AI systems should prioritize the needs and rights of individuals, ensuring that technology serves humanity.

- Long-Term Impact: Ethical considerations should account for the long-term consequences of AI deployment, not just immediate benefits.

- Public Trust: Establishing ethical standards helps build public trust in AI technologies, which is crucial for their widespread adoption.

In conclusion, the importance of AI ethics cannot be overstated. It is essential for guiding the responsible development and implementation of artificial intelligence technologies. By prioritizing ethics, we can create a future where technology enhances human well-being, promotes fairness, and safeguards our fundamental rights.

Key Ethical Principles in AI

As we navigate the rapidly evolving landscape of artificial intelligence, understanding the key ethical principles that govern its development is more important than ever. These principles serve as a compass, guiding developers, organizations, and policymakers in creating AI systems that not only advance technology but also uphold the values that define our society. Among these principles, fairness, accountability, transparency, and privacy stand out as fundamental pillars that ensure AI operates justly and ethically.

Fairness is perhaps the most discussed ethical principle in AI. It emphasizes the necessity of eliminating biases that can creep into algorithms, which can lead to discriminatory outcomes. Imagine a world where an AI system decides who gets a loan based on biased historical data; this could perpetuate inequality and injustice. To combat this, developers must actively work to identify and mitigate biases in the data used to train their models. This is not just a technical challenge but a moral imperative.

Next, we have accountability. In a world where AI systems make decisions that can significantly impact people's lives, it is crucial to establish clear lines of responsibility. Who is to blame if an AI system makes a mistake? Is it the developer, the organization, or the AI itself? Without accountability, there can be no trust in AI technologies. Developers and organizations must be held responsible for the outcomes of their systems, ensuring that there are mechanisms in place for redress when things go wrong.

Transparency is another critical ethical principle. It refers to the need for AI systems to be understandable and explainable. Users have a right to know how decisions are made, especially when those decisions affect their lives. For instance, if an AI denies someone a job application, that individual deserves to understand the reasoning behind that decision. By fostering transparency, we empower users and build trust in AI technologies. This can be achieved through clear documentation, user-friendly interfaces, and open communication about how algorithms function.

Finally, we must consider privacy. In an age where data is the new gold, protecting individuals' privacy is paramount. AI systems often rely on vast amounts of personal data to function effectively, but this raises questions about consent and data security. Organizations must implement robust data protection measures and ensure that users are informed about how their data is being used. This not only protects individuals but also enhances public trust in AI technologies.

In summary, the key ethical principles of fairness, accountability, transparency, and privacy are essential for guiding the development of AI technologies. These principles should not be viewed as mere guidelines but as foundational elements that shape the future of AI. By adhering to these ethical standards, we can harness the power of AI while safeguarding human rights and promoting societal well-being.

- What is AI ethics? AI ethics refers to the moral principles that guide the development and implementation of artificial intelligence technologies.

- Why is fairness important in AI? Fairness is crucial to ensure that AI systems do not perpetuate existing biases and discrimination, promoting equal treatment for all individuals.

- How can accountability be established in AI? Accountability can be established through clear regulations, documentation, and mechanisms that hold developers and organizations responsible for their AI systems.

- What role does transparency play in AI? Transparency helps users understand how AI systems make decisions, fostering trust and enabling informed choices.

- Why is privacy a concern for AI? Privacy is a concern because AI systems often require large amounts of personal data, raising issues related to consent and data security.

Fairness in AI

When we talk about , we're diving into a deep and complex ocean of ethical responsibility. Imagine a world where technology is not just a tool but a companion that treats everyone equally, regardless of their background. This is the essence of fairness in artificial intelligence. It's about eliminating biases that can creep into algorithms, often unnoticed, and ensuring that all individuals are treated with respect and equality. Think of it like a referee in a sports game—if the referee is biased, the game becomes unfair. Similarly, if AI systems are biased, the outcomes can lead to significant societal issues.

One of the most pressing challenges in achieving fairness is addressing bias in data. Data is the lifeblood of AI; it feeds the algorithms and guides their decisions. However, if the data itself is flawed or biased, the AI will inevitably produce skewed results. For instance, if a hiring algorithm is trained on historical data that reflects past discrimination, it may perpetuate those biases, leading to unfair hiring practices. To combat this, organizations must take proactive steps to identify and mitigate biases in their training datasets. This involves not just cleaning the data but also ensuring a diverse representation of demographics within it.

Moreover, achieving fairness is not just a technical challenge; it requires an equitable algorithm design process. This means involving a diverse group of stakeholders in the development of AI systems. Picture a potluck dinner where everyone brings their unique dish to the table. The more diverse the contributions, the richer the meal. Similarly, when designing algorithms, including voices from various backgrounds—whether they be different genders, ethnicities, or socio-economic statuses—ensures a broader perspective and helps in creating a more balanced and fair AI system. Collaboration is key; when multiple viewpoints are considered, the likelihood of overlooking biases decreases significantly.

In summary, fairness in AI is not merely an idealistic goal but a necessary foundation for building technology that serves everyone. It demands rigorous attention to data quality, a commitment to inclusive design processes, and a continuous effort to challenge and rectify biases as they arise. As we move forward in this exciting yet challenging field, let’s remember that the real power of AI lies in its potential to uplift and empower all individuals, not just a select few.

- What is fairness in AI? Fairness in AI refers to the principle of ensuring that AI systems operate without bias, providing equal treatment and opportunities to all individuals regardless of their background.

- How can bias in data affect AI outcomes? If the data used to train AI models is biased, the resulting AI decisions can perpetuate those biases, leading to unfair treatment in areas like hiring, lending, and law enforcement.

- Why is diverse stakeholder involvement important in AI design? Involving diverse stakeholders helps to ensure that multiple perspectives are considered, which can lead to more equitable and fair AI systems.

Addressing Bias in Data

In the realm of artificial intelligence, addressing bias in data is not just a technical challenge; it's a moral imperative. Imagine a world where the decisions made by AI systems could unfairly disadvantage entire groups of people simply because the data they were trained on reflected historical inequalities. This scenario highlights the urgent need to scrutinize the data that feeds our algorithms. After all, as the saying goes, "garbage in, garbage out." If the input data is flawed or biased, the output will inevitably reflect those same imperfections.

To effectively tackle bias in data, we must first understand its origins. Bias can creep in at various stages of data collection and processing. For instance, if the data collected is not representative of the entire population, certain demographics may be underrepresented. This can lead to skewed results that do not accurately reflect reality. Furthermore, biases can also be introduced through the subjective choices made by data collectors or through the historical context of the data itself. Recognizing these sources of bias is the first step towards mitigation.

One effective strategy for addressing bias is to conduct thorough audits of the datasets used in AI training. This involves analyzing the data for imbalances and ensuring that it reflects a diverse range of perspectives. For example, if an AI system is being developed for hiring practices, the training data should include a balanced representation of candidates across various demographics, including age, gender, ethnicity, and socio-economic status. By doing so, we can help ensure that the AI system does not inadvertently favor one group over another.

Moreover, engaging a diverse group of stakeholders in the data collection and algorithm design process is crucial. This collaborative approach not only enriches the development process but also helps to identify potential biases that may not be immediately apparent to a homogenous group. When people from different backgrounds come together, they can challenge assumptions and broaden the understanding of what fairness looks like in AI.

Finally, it's essential to implement continuous monitoring and feedback loops once the AI systems are deployed. This means regularly assessing the performance of AI models to ensure they are not perpetuating biases over time. If biases are detected, it is vital to take corrective actions swiftly, whether that means retraining the model with more representative data or adjusting the algorithms to account for identified disparities.

In summary, addressing bias in data is a multifaceted endeavor that requires vigilance, collaboration, and a commitment to ethical standards. By actively engaging with the data we use and the systems we create, we can foster a more equitable technological landscape that benefits everyone.

- What is data bias? Data bias refers to systematic errors in data that can lead to unfair outcomes in AI systems, often resulting from unrepresentative samples or subjective data collection methods.

- How can we identify bias in data? Bias can be identified through data audits, statistical analysis, and by evaluating the representation of different demographics within the dataset.

- Why is it important to address bias in AI? Addressing bias is crucial to ensure that AI systems operate fairly and do not reinforce existing inequalities in society.

- What role do diverse teams play in mitigating bias? Diverse teams bring a variety of perspectives that can help identify and address potential biases in data and algorithms, leading to more equitable AI systems.

Equitable Algorithm Design

When we talk about , we’re diving into a world where technology meets fairness. Imagine a world where algorithms are like chefs in a kitchen, crafting the perfect dish for everyone, regardless of their background. Just as a chef needs to understand the diverse tastes of their diners, developers must consider the varied perspectives of users when creating algorithms. This means not only recognizing but actively incorporating the voices of different demographics to ensure that technology serves all, not just a select few.

To achieve this, a collaborative approach is essential. Developers should engage with stakeholders from various fields, including ethicists, sociologists, and community representatives. This collaboration is akin to assembling a diverse team of chefs, each bringing their unique flavor to the table. By pooling knowledge and experiences, we can create algorithms that are more inclusive and reflective of the society they are meant to serve. It's about breaking down silos and fostering an environment where everyone’s input is valued.

Moreover, we need to consider the data that feeds these algorithms. If the ingredients are flawed, the final dish will be, too. This means rigorously examining training datasets for biases that could skew outcomes. For instance, if an algorithm is trained primarily on data from one demographic, it may fail to accurately serve others. Therefore, it’s vital to ensure that datasets are representative and diverse. Think of it as ensuring a recipe has all the necessary spices to create a balanced flavor profile.

In addition, developers should implement continuous monitoring of algorithms post-deployment. Just like a chef tastes their dish throughout the cooking process, developers must regularly assess the performance of their algorithms to identify and rectify any biases that may emerge over time. This ongoing evaluation will help maintain fairness and accountability, ensuring that the technology evolves alongside societal changes.

In conclusion, equitable algorithm design is not just a technical challenge; it’s a moral imperative. By fostering collaboration, ensuring diverse data representation, and committing to ongoing evaluation, we can create algorithms that are truly equitable. In this way, technology can serve as a powerful tool for social good, promoting fairness and equality in a rapidly changing world.

- What is equitable algorithm design? Equitable algorithm design refers to creating algorithms that are fair and inclusive, taking into account the diverse backgrounds and needs of all users.

- Why is collaboration important in algorithm design? Collaboration ensures a variety of perspectives are considered, leading to more inclusive and effective algorithms.

- How can biases in data be addressed? By rigorously examining and diversifying training datasets, developers can identify and mitigate biases that may affect algorithm outcomes.

- What role does continuous monitoring play in algorithm design? Continuous monitoring helps identify and correct biases that may arise after deployment, ensuring the algorithm remains fair and effective over time.

Accountability in AI Systems

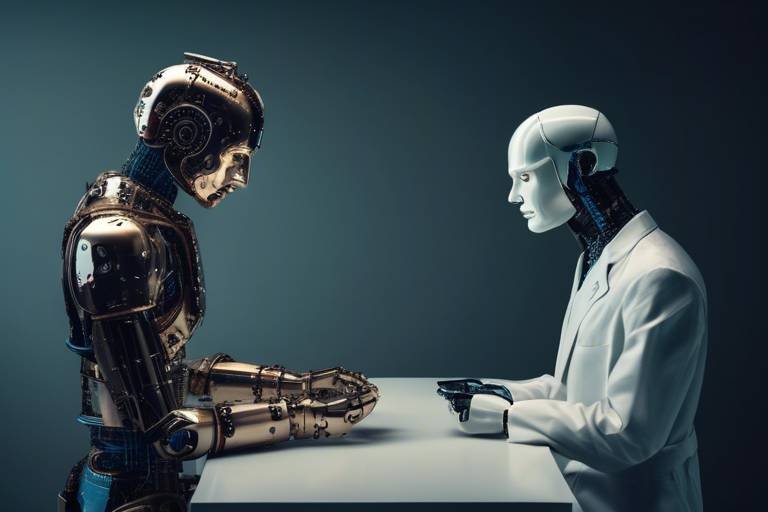

Establishing accountability in AI systems is not just a luxury; it’s a necessity. As artificial intelligence continues to embed itself in our daily lives—from self-driving cars to recommendation algorithms—it's crucial that developers and organizations are held responsible for the outcomes of their technologies. Imagine a world where a malfunctioning AI system causes harm, yet no one is held accountable. This scenario raises a multitude of ethical and legal questions that we must address.

Accountability in AI is about more than just assigning blame; it’s about creating a culture of responsibility. This involves several key components:

- Clear Ownership: Organizations must define who is responsible for the AI systems they create. Is it the developers, the company, or the end-users? Clear ownership helps in tracing accountability.

- Transparent Processes: Transparency in how AI systems operate is essential. When users understand how decisions are made, they can better trust and hold systems accountable.

- Regulatory Compliance: Adhering to laws and regulations not only ensures ethical practices but also protects organizations from potential liabilities.

To illustrate the importance of accountability, consider the use of AI in hiring processes. If an AI system inadvertently discriminates against a qualified candidate due to biased training data, who should be held responsible? The developers who created the algorithm? The organization that deployed it? Or the AI itself? This complexity highlights the need for robust accountability frameworks that can address such dilemmas.

Moreover, implementing accountability mechanisms can foster trust between AI systems and their users. When people know that there are checks and balances in place, they are more likely to embrace AI technologies. This could involve regular audits of AI systems, where independent third parties assess the performance and ethical implications of these technologies. Such audits can help identify potential biases and ensure that the AI operates within ethical boundaries.

In conclusion, accountability in AI systems is not merely about avoiding blame; it is about fostering a responsible innovation environment. As we continue to push the boundaries of what AI can achieve, we must also push for frameworks that ensure these technologies are developed and deployed ethically. After all, the future of AI is not just about technological advancement; it’s about how we navigate the moral landscape that comes with it.

- What is accountability in AI systems? Accountability in AI systems refers to the responsibility of developers and organizations for the outcomes of their technologies, ensuring ethical practices and transparency.

- Why is accountability important in AI? Accountability is crucial to build trust, ensure ethical usage, and prevent harm caused by AI technologies.

- How can organizations ensure accountability in their AI systems? Organizations can ensure accountability by establishing clear ownership, maintaining transparency, and adhering to regulatory compliance.

The Role of Regulations in AI Ethics

As we dive deeper into the world of artificial intelligence, the need for robust regulations becomes increasingly clear. Imagine a ship sailing without a compass; it’s bound to drift off course. Similarly, without regulations, AI technologies can veer into ethical grey areas that may result in harmful consequences. Regulations serve as our compass, guiding the development of AI in a manner that respects human rights and societal norms. They provide a framework within which innovators can operate, ensuring that technological advancements do not come at the expense of ethical considerations.

Moreover, regulations play a pivotal role in addressing public concerns about AI. As AI systems become more integrated into our daily lives, from self-driving cars to healthcare diagnostics, the potential for misuse or unintended consequences grows. Regulatory frameworks can help mitigate these risks by establishing clear guidelines for ethical AI development. For instance, regulations can mandate that AI systems undergo rigorous testing to ensure they are safe and effective before being deployed in real-world scenarios.

However, crafting effective regulations is no easy task. The landscape of AI is evolving at breakneck speed, making it challenging for lawmakers to keep up. This is where the concept of adaptive regulations comes into play. Adaptive regulations are flexible and can evolve alongside technological advancements, allowing for a more responsive approach to governance. This adaptability can help balance the need for innovation with the imperative of ethical oversight.

Another important aspect of AI regulations is the promotion of global standards. In a world where technology knows no borders, it's essential to establish international guidelines that ensure ethical practices across different jurisdictions. This not only fosters collaboration among nations but also helps prevent a race to the bottom, where countries may lower their ethical standards to attract tech companies. By creating a unified approach to AI ethics, we can ensure that all individuals, regardless of where they live, are protected from potential abuses of AI technologies.

Despite the clear benefits of regulations, there are challenges to implementation. For example, striking the right balance between fostering innovation and enforcing ethical standards can be tricky. If regulations are too stringent, they may stifle creativity and slow down technological progress. Conversely, if they are too lenient, the risks associated with AI may go unchecked. This delicate balancing act requires ongoing dialogue between developers, ethicists, and policymakers to create a regulatory environment that encourages responsible innovation.

In conclusion, the role of regulations in AI ethics is paramount. They not only provide necessary guidelines to ensure the ethical development of AI technologies but also help build public trust in these systems. As we continue to navigate this complex landscape, it is crucial that all stakeholders work together to create regulations that are both effective and adaptable, ensuring that the benefits of AI can be enjoyed by all, without compromising our moral compass.

- What is the primary purpose of AI regulations?

The main purpose of AI regulations is to ensure that AI technologies are developed and deployed ethically, with respect for human rights and societal well-being. - How can regulations adapt to rapidly changing AI technologies?

Regulations can incorporate adaptive frameworks that allow for updates and changes in response to new technological advancements and challenges. - Why is international collaboration important in AI ethics?

International collaboration helps establish consistent ethical standards across different countries, preventing a race to the bottom in ethical practices.

Global Standards for AI Ethics

As the world increasingly embraces artificial intelligence, the need for global standards in AI ethics becomes more pressing. These standards are essential to ensure that AI technologies are developed and utilized in a manner that is not only effective but also ethical and responsible. Imagine a world where every AI system operates under a unified set of ethical guidelines—this would not only enhance trust but also foster innovation across borders.

Creating such standards requires collaboration among nations, organizations, and communities. It’s akin to assembling a jigsaw puzzle where each piece represents different cultural values, legal frameworks, and technological capabilities. When pieced together, these standards can help shape a cohesive approach to AI ethics. However, the path to achieving this is fraught with challenges. For instance, different countries may have varying definitions of what constitutes ethical behavior in AI, influenced by their unique social norms and legal systems.

To tackle these differences, international bodies like the United Nations and the IEEE have begun to draft guidelines aimed at fostering a common understanding of AI ethics. These guidelines typically emphasize key areas such as:

- Human Rights: Ensuring that AI respects and promotes fundamental human rights.

- Accountability: Establishing clear lines of responsibility for AI outcomes.

- Transparency: Promoting openness in AI decision-making processes.

- Privacy: Safeguarding personal data against misuse.

Moreover, the establishment of global standards encourages organizations to adopt best practices, which can lead to improved public trust in AI technologies. When companies know that they are adhering to recognized ethical standards, it not only boosts their reputation but also enhances customer loyalty. Just like how brands that prioritize sustainability often see increased consumer support, the same can be true for those that commit to ethical AI practices.

However, implementing these global standards is not without its hurdles. One major challenge is the dynamic nature of technology itself. AI evolves rapidly, and the standards must be flexible enough to adapt to new developments. This requires ongoing dialogue between stakeholders, including technologists, ethicists, policymakers, and the public. Think of it as a dance where everyone must stay in sync to avoid stepping on each other's toes.

In conclusion, while the journey towards establishing global standards for AI ethics is complex, it is a necessary endeavor. By fostering international collaboration and understanding, we can create a future where AI serves as a force for good, enhancing lives while respecting the ethical boundaries that define our humanity.

Q: Why are global standards for AI ethics important?

A: Global standards are crucial to ensure that AI technologies are developed and used ethically, promoting trust and accountability across different regions.

Q: What challenges exist in creating these standards?

A: Challenges include differing cultural values, legal frameworks, and the rapidly changing nature of technology itself.

Q: Who is responsible for establishing these global standards?

A: International organizations, governments, and industry stakeholders all play a role in drafting and implementing AI ethics standards.

Q: How can organizations ensure they comply with these standards?

A: Organizations can adopt best practices, engage with ethical guidelines, and participate in ongoing training and discussions about AI ethics.

Challenges in Implementing Regulations

Implementing regulations for artificial intelligence (AI) is no walk in the park. It's akin to trying to catch smoke with your bare hands—challenging, to say the least. As technology evolves at breakneck speed, regulators often find themselves playing catch-up, struggling to keep pace with innovations that seem to sprout overnight. One of the primary challenges is striking the right balance between fostering innovation and ensuring ethical oversight. Too much regulation can stifle creativity, while too little can lead to catastrophic consequences.

Moreover, the rapid development of AI technologies means that regulations can quickly become outdated. For instance, a regulation that governs facial recognition technology today may not adequately address the nuances of tomorrow's AI advancements. This creates a constant need for adaptation and flexibility in regulatory frameworks, which can be a daunting task for policymakers.

Another significant hurdle is the global nature of AI. Technology knows no borders, and a regulation in one country may have little effect if other nations do not adopt similar standards. This disparity can lead to a "race to the bottom," where companies might relocate to jurisdictions with lax regulations, undermining ethical practices. To combat this, international collaboration is essential, yet it often proves difficult due to differing cultural values, regulatory philosophies, and governmental structures.

Furthermore, there is the issue of transparency. Many AI systems operate as "black boxes," making it challenging to understand how decisions are made. This opacity complicates the task of holding developers accountable for their creations. If a self-driving car makes a mistake, who do you blame? The manufacturer, the software engineer, or the data scientist? This ambiguity can lead to significant legal and ethical dilemmas.

Lastly, we must consider the public perception of AI. Many people harbor fears about the implications of AI, from job displacement to privacy concerns. These fears can lead to public backlash against regulations perceived as too lenient, or conversely, against innovations deemed too risky. Striking a balance between public safety and technological advancement is a tightrope walk that regulators must navigate with care.

In summary, the challenges in implementing AI regulations are multifaceted and complex. They require a nuanced understanding of technology, ethics, and societal needs. As we move forward, it’s crucial to foster an environment where innovation and ethics can coexist harmoniously, ensuring that AI serves as a tool for enhancing human life rather than a source of anxiety.

- What are the main challenges in regulating AI? The main challenges include keeping pace with rapid technological advancements, ensuring global consistency, maintaining transparency, and balancing public perception with innovation.

- Why is global collaboration important in AI regulation? Global collaboration is vital to prevent a "race to the bottom" in ethical standards and to ensure that regulations are effective across borders.

- How can transparency be improved in AI systems? Transparency can be improved through better documentation of algorithms, clearer communication about how AI systems make decisions, and involving diverse stakeholders in the design process.

- What role do ethics play in AI development? Ethics guide the development of AI technologies to ensure they respect human rights, promote fairness, and enhance societal well-being.

Frequently Asked Questions

- What is AI ethics, and why is it important?

AI ethics refers to the moral principles guiding the development and use of artificial intelligence. It's essential because it ensures that technology respects human rights and promotes the well-being of society. Without these ethical guidelines, we risk creating systems that could harm individuals or communities.

- How can fairness be ensured in AI systems?

To ensure fairness in AI systems, developers must eliminate biases in algorithms. This involves using diverse training datasets and considering a wide range of perspectives during the design process. By actively working to prevent discrimination, we can create technology that benefits everyone equally.

- What role does accountability play in AI?

Accountability in AI is crucial because it holds developers and organizations responsible for the outcomes of their technologies. When there's a clear line of accountability, it encourages ethical practices and fosters trust among users, ensuring that AI systems are developed with care and consideration.

- Are there regulations for AI ethics?

Yes, regulations for AI ethics are necessary to enforce ethical standards in the development of AI technologies. These regulations provide guidelines that help organizations navigate the moral landscape, ensuring that ethical considerations are prioritized throughout the design and implementation process.

- What are the challenges in implementing AI regulations?

Implementing AI regulations comes with various challenges, such as balancing the need for innovation with ethical oversight. Additionally, the rapid pace of technological advancements makes it difficult to keep regulations up-to-date, leading to potential gaps in ethical governance.

- How can global standards for AI ethics be established?

Global standards for AI ethics can be established through international collaboration among governments, organizations, and experts. By working together to develop consistent guidelines, we can ensure that ethical considerations are prioritized across different countries and cultures.